You have seen one or more variations on:

This Is How Much It ‘Costs’ To Get An Ambassadorship: Guccifer 2.0 Leaks DNC ‘Pay-To-Play’ Donor List

DNC Leak Exposes Pay to Play Politics, How the Clinton’s REALLY Feel About Obama

CORRUPTION! Obama caught up in Pay for Play Scandal, sold every job within his power to sell.

You may be wondering why CNN, the New York Time and the Washington Post aren’t all over this story?

While selling public offices surprises some authors, whose names I omitted out of courtesy to their families, selling offices is a regularized activity in the United States.

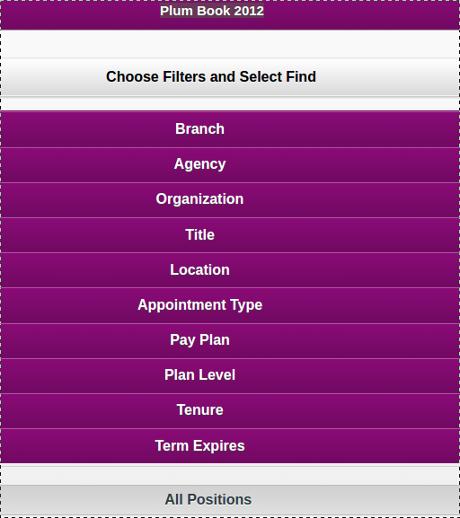

So regularized that immediately following each presidential election, the Government Printing Office publishes the United States Government Policy and Supporting Positions 2012 (Plum Book) that lists the 9,000 odd positions that are subject to presidential appointment.

From the description of the 2012 edition:

Every four years, just after the Presidential election, “United States Government Policy and Supporting Positions” is published. It is commonly known as the “Plum Book” and is alternately published between the House and Senate.

The Plum Book is a listing of over 9,000 civil service leadership and support positions (filled and vacant) in the Legislative and Executive branches of the Federal Government that may be subject to noncompetitive appointments, or in other words by direct appointment.

These “plum” positions include agency heads and their immediate subordinates, policy executives and advisors, and aides who report to these officials. Many positions have duties which support Administration policies and programs. The people holding these positions usually have a close and confidential relationship with the agency head or other key officials.

Even though the 2012 “plum” book is currently on sale for $19.00 (usual price is $38.00), given that a new one will appear later this year, consider using the free online version at: Plum Book 2012.

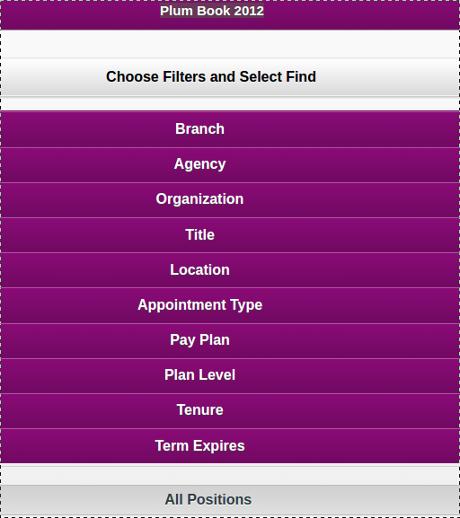

The online interface is nothing to brag on. You have to select filters and then find to obtain further information on positions. Very poor UI.

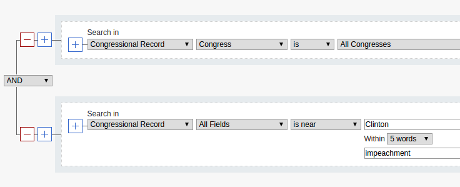

However, if under title you select “Chief of Mission, Monaco” and then select “find,” the resulting screen looks something like this:

To your far right there is a small arrow that if selected, takes you to the details:

If you were teaching a high school civics class, the question would be:

How much did Charles Rivkin have to donate to obtain the position of Chief of Mission, Monaco?

FYI, the CIA World FactBook gives this brief description for Monaco:

Monaco, bordering France on the Mediterranean coast, is a popular resort, attracting tourists to its casino and pleasant climate. The principality also is a banking center and has successfully sought to diversify into services and small, high-value-added, nonpolluting industries.

Unlike the unhappy writers that started this post, you would point the class to: Transaction Query By Individual Contributor at the Federal Election Commission site.

Entering the name Rivkin, Charles and select “Get Listing.”

Rivkin’s contributions are broken into categories and helpfully summed to assist you in finding the total.

Contributions to All Other Political Committees Except Joint Fundraising Committees – $72399.00

Joint Fundraising Contributions – $22300.00

Recipient of Joint Fundraiser Contributions – $36052.00

Caution: There is an anomalous Rivkin in that last category, contributing $40 to Donald Trump. For present discussions, I would subtract that from the grand total of:

$130,711 to be the Chief of Mission, Monaco.

Realize that this was not a lump sum payment but a steady stream of contributions starting in the year 2000.

Using the Transaction Query By Individual Contributor resource, you can correct stories that claim:

Jane Hartley paid DNC $605,000 and then was nominated by Obama to serve concurrently as the U.S. Ambassador to the French Republic and the Principality of Monaco.

(from: This Is How Much It ‘Costs’ To Get An Ambassadorship: Guccifer 2.0 Leaks DNC ‘Pay-To-Play’ Donor List)

If you run the FEC search you will find:

Contributions to Super PACs, Hybrid PACs and Historical Soft Money Party Accounts – $5000.00

Contributions to All Other Political Committees Except Joint Fundraising Committees – $516609.71

Joint Fundraising Contributions – $116000.00

Grand total: $637,609.71.

So, $637,609.71, not $605,000.00 but also as a series of contributions starting in 1997, not one lump sum.

You don’t have to search discarded hard drives to get pay-to-play appointment pricing. It’s all a matter of public record.

PS: I’m not sure how accurate or complete Nominations & Appointments (White House) may be, but its an easier starting place for current appointees than the online Plum book.

PPS: Estimated pricing for “Plum” book positions could be made more transparent. Not a freebie. Let me know if you are interested.