From the webpage:

Welcome to JournalismCourses.org, an online training platform of the Knight Center for Journalism in the Americas at the University of Texas at Austin.

Since 2003, our online courses have trained more than 50,000 people from 160 countries. Initially, the program was focused on online classes for small groups of journalists, mainly from Latin America and the Caribbean, but eventually the Knight Center began offering Massive Open Online Courses. It became the first program of MOOCs in the world specializing in journalism training, but it still offers courses to small groups as well. The MOOCs are free, but participants are asked to pay a small fee for a certificate of completion. Other courses are paid, but we keep the fees as low as possible in an effort to make the courses available to as many people as possible.

Our courses cover a variety of topics including investigative reporting, ethics, digital journalism techniques, election reporting, coverage of armed conflicts, computer-assisted reporting, and many others. Our MOOCs and courses for smaller groups last from four to six weeks. They are conducted completely online and taught by some of the most respected, experienced journalists and journalism trainers in the world. The courses take full advantage of multimedia. They feature video lectures, discussion forums, audio slideshows, self-paced quizzes, and other collaborative learning technologies. Our expert instructors provide a quality learning experience for journalists seeking to improve their skills, and citizens looking to become more engaged in journalism and democracy.

The courses offered on the JournalismCourses.org platform are asynchronous, so participants can log in on the days and times that are most convenient for them. Each course, however, is open just for a specific period of time and access to it is restrict to registered students.

The Knight Center has offered online courses in English, Spanish and Portuguese. Please check this site often, as we will soon announce more online courses.

For more information about the Knight Center’s Distance Learning program, please click here .

For more information about the Knight Center’s MOOCs and how they work, see our Frequently Asked Questions (FAQ) .

…

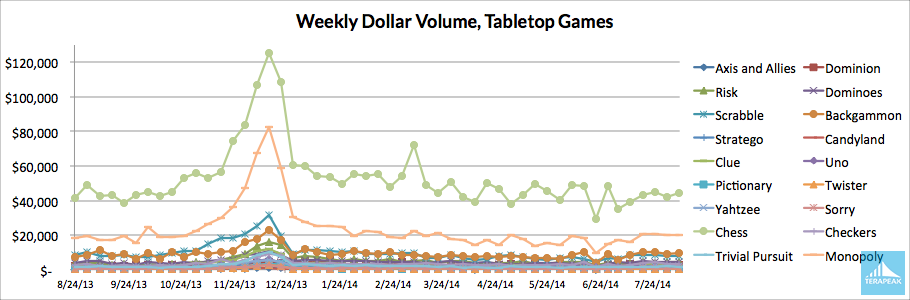

JournalismCourses.org/ sponsors the Introduction to Infographics and Data Visualization MOOC I just posted about but I thought it needed more than a passing mention in a post.

More courses are on the way!

Speaking of more courses, do yourself a favor and visit: Knight Center’s Distance Learning program. You won’t be disappointed, I promise.