Can you spot the “American bias” both in this story and the reporting by NPR?

U.S. Operations Killed Two Hostages Held By Al-Qaida, Including An American by Krishnadev Calamur:

President Obama offered his “grief and condolences” to the families of the American and Italian aid workers killed in a U.S. counterterrorism operation in January. Both men were held hostage by al-Qaida.

“I take full responsibility for a U.S. government counterterrorism operation that killed two innocent hostages held by al-Qaida,” Obama said.

The president said both Warren Weinstein, an American held by the group since 2011, and Giovanni Lo Porto, an Italian national held since 2012, were “devoted to improving the lives of the Pakistani people.”

Earlier Thursday, the White House in a statement announced the two deaths, along with the killings of two American al-Qaida members.

“Analysis of all available information has led the Intelligence Community to judge with high confidence that the operation accidentally killed both hostages,” the White House statement said. “The operation targeted an al-Qa’ida-associated compound, where we had no reason to believe either hostage was present, located in the border region of Afghanistan and Pakistan. No words can fully express our regret over this terrible tragedy.”

…

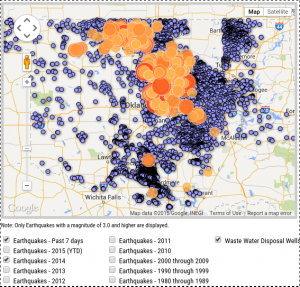

Exact numbers of casualties from American drone strikes are hard to come by but current estimates suggest that more people have died from drone attacks than in 9/11. A large number of those people were not the intended targets but civilians, including hundreds of children. A Bureau of Investigative Journalism report has spreadsheets you can download to find the specifics about drone strikes in particular countries.

Let’s pause to hear the Obama Administration’s “grief and condolences” over the deaths of civilians and children in each of those strikes:

That’s right, the Obama Administration has trouble admitting any civilians or children have died as a result of its drone war. Perhaps trying to avoid criminal responsibility for their actions. But it certainly has not expressed any “grief and condolences” over those deaths.

Jeff Bachman, of American University, estimates that between twenty-eight (28) and thirty-five (35) civilians die for every one (1) person killed on the Obama “kill” list in Pakistan alone. Drone Strikes: Are They Obama’s Enhanced Interrogation Techniques?

You will notice that NPR reporting does not contrast Obama’s “grief and condolences” for the deaths of two hostages (one of who was American) with his lack of any remorse over the deaths of civilians and children in other drone attacks.

Obama’s lack of remorse over the deaths of innocents in other drone attacks, reportedly isn’t unusual for war criminals. War criminals see their crimes as justified by the pursuit of a goal worth more than innocent human lives. Or in this case, more valuable than non-American innocent lives.

”

”