Some tools for lifting the patent data treasure by by Michele Peruzzi and Georg Zachmann.

From the post:

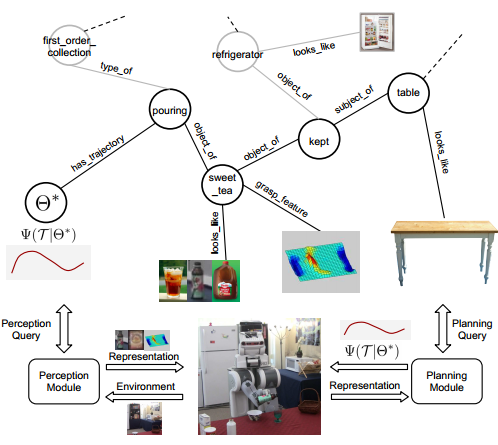

…Our work can be summarized as follows:

- We provide an algorithm that allows researchers to find the duplicates inside Patstat in an efficient way

- We provide an algorithm to connect Patstat to other kinds of information (CITL, Amadeus)

- We publish the results of our work in the form of source code and data for Patstat Oct. 2011.

More technically, we used or developed probabilistic supervised machine-learning algorithms that minimize the need for manual checks on the data, while keeping performance at a reasonably high level.

The post has links for source code and data for these three papers:

A flexible, scaleable approach to the international patent “name game” by Mark Huberty, Amma Serwaah, and Georg Zachmann

In this paper, we address the problem of having duplicated patent applicants’ names in the data. We use an algorithm that efficiently de-duplicates the data, needs minimal manual input and works well even on consumer-grade computers. Comparisons between entries are not limited to their names, and thus this algorithm is an improvement over earlier ones that required extensive manual work or overly cautious clean-up of the names.

A scaleable approach to emissions-innovation record linkage by Mark Huberty, Amma Serwaah, and Georg Zachmann

PATSTAT has patent applications as its focus. This means it lacks important information on the applicants and/or the inventors. In order to have more information on the applicants, we link PATSTAT to the CITL database. This way the patenting behaviour can be linked to climate policy. Because of the structure of the data, we can adapt the deduplication algorithm to use it as a matching tool, retaining all of its advantages.

Remerge: regression-based record linkage with an application to PATSTAT by Michele Peruzzi, Georg Zachmann, Reinhilde Veugelers

We further extend the information content in PATSTAT by linking it to Amadeus, a large database of companies that includes financial information. Patent microdata is now linked to financial performance data of companies. This algorithm compares records using multiple variables, learning their relative weights by asking the user to find the correct links in a small subset of the data. Since it is not limited to comparisons among names, it is an improvement over earlier efforts and is not overly dependent on the name-cleaning procedure in use. It is also relatively easy to adapt the algorithm to other databases, since it uses the familiar concept of regression analysis.

Record linkage is a form of merging that originated in epidemiology in the late 1940’s. To “link” (read merge) records across different formats, records were transposed into a uniform format and “linking” characteristics chosen to gather matching records together. A very powerful technique that has been in continuous use and development ever since.

One major different with topic maps is that record linkage has undisclosed subjects, that is the subjects that make up the common format and the association of the original data sets with that format. I assume in many cases the mapping is documented but it doesn’t appear as part of the final work product, thereby rendering the merging process opaque and inaccessible to future researchers. All you can say is “…this is the data set that emerged from the record linkage.”

Sufficient for some purposes but if you want to reduce the 80% of your time that is spent munging data that has been munged before, it is better to have the mapping documented and to use disclosed subjects with identifying properties.

Having said all of that, these are tools you can use now on patents and/or extend them to other data sets. The disambiguation problems addressed for patents are the common ones you have encountered with other names for entities.

If a topic map underlies your analysis, the less time you will spend on the next analysis of the same information. Think of it as reducing your intellectual overhead in subsequent data sets.

Income – Less overhead = Greater revenue for you. 😉

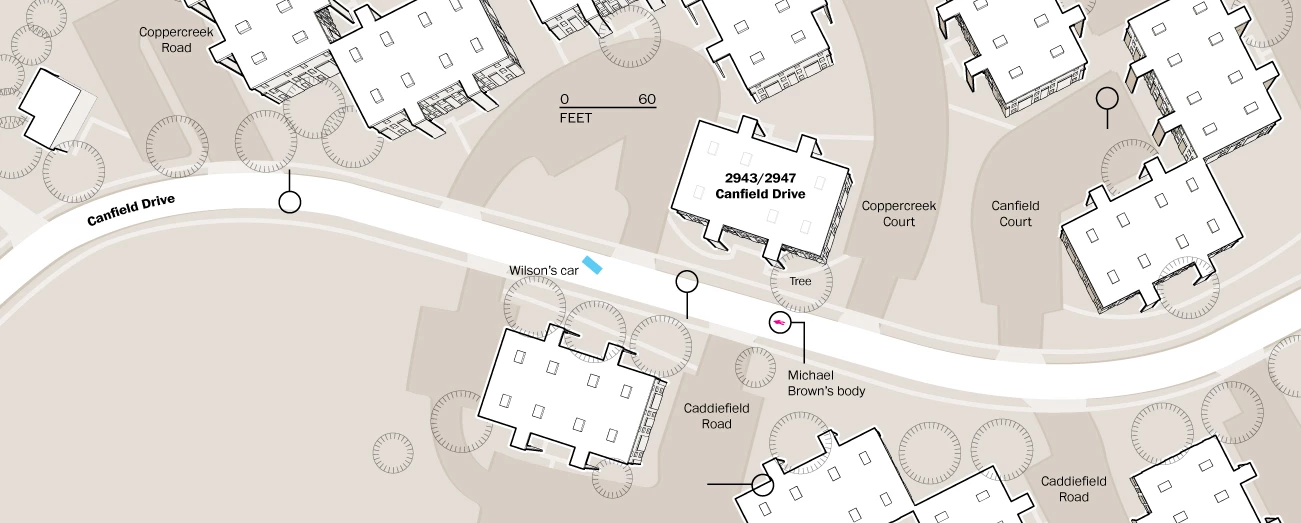

PS: Don’t be confused, you are looking for EPO Worldwide Patent Statistical Database (PATSTAT). Naturally there is a US organization, http://www.patstats.org/ that is just patent litigation statistics.

PPS: Sam Hunting, the source of so many interesting resources, pointed me to this post.

.

.