If you answered “Yes,” this post won’t interest you.

If you answered “No,” read on:

Senator Mark Udall faces the question: “Would You Protect Nazi Torturers And Their Superiors?” as reported by Mike Masnick in:

Mark Udall’s Open To Releasing CIA Torture Report Himself If Agreement Isn’t Reached Over Redactions.

Mike writes in part:

As we were worried might happen, Senator Mark Udall lost his re-election campaign in Colorado, meaning that one of the few Senators who vocally pushed back against the surveillance state is about to leave the Senate. However, Trevor Timm pointed out that, now that there was effectively “nothing to lose,” Udall could go out with a bang and release the Senate Intelligence Committee’s CIA torture report. The release of some of that report (a redacted version of the 400+ page “executive summary” — the full report is well over 6,000 pages) has been in limbo for months since the Senate Intelligence Committee agreed to declassify it months ago. The CIA and the White House have been dragging out the process hoping to redact some of the most relevant info — perhaps hoping that a new, Republican-controlled Senate would just bury the report.

…

Mike details why Senator Udall’s recent reelection defeat makes release of the report, either in full or in summary, a distinct possibility.

In addition to Mike’s report, here is some additional information you may find useful

Contact Information for Senator Udall

http://www.markudall.senate.gov/

Senator Mark Udall

Hart Office Building Suite SH-730

Washington, D.C. 20510

P: 202-224-5941

F: 202-224-6471

An informed electorate is essential to the existence of self-governance.

No less a figure than Thomas Jefferson spoke about the star chamber proceedings we now take for granted saying:

An enlightened citizenry is indispensable for the proper functioning of a republic. Self-government is not possible unless the citizens are educated sufficiently to enable them to exercise oversight. It is therefore imperative that the nation see to it that a suitable education be provided for all its citizens. It should be noted, that when Jefferson speaks of “science,” he is often referring to knowledge or learning in general. “I know no safe depositary of the ultimate powers of the society but the people themselves; and if we think them not enlightened enough to exercise their control with a wholesome discretion, the remedy is not to take it from them, but to inform their discretion by education. This is the true corrective of abuses of constitutional power.” –Thomas Jefferson to William C. Jarvis, 1820. ME 15:278

“Every government degenerates when trusted to the rulers of the people alone. The people themselves, therefore, are its only safe depositories. And to render even them safe, their minds must be improved to a certain degree.” –Thomas Jefferson: Notes on Virginia Q.XIV, 1782. ME 2:207

“The most effectual means of preventing [the perversion of power into tyranny are] to illuminate, as far as practicable, the minds of the people at large, and more especially to give them knowledge of those facts which history exhibits, that possessed thereby of the experience of other ages and countries, they may be enabled to know ambition under all its shapes, and prompt to exert their natural powers to defeat its purposes.” –Thomas Jefferson: Diffusion of Knowledge Bill, 1779. FE 2:221, Papers 2:526

Jefferson didn’t have to contend with Middle East terrorists, only the English terrorizing the country side. Since more Americans died in British prison camps than in the Revolution proper, I would say they were as bad as terrorists. Prisoners of war in the American Revolutionary War

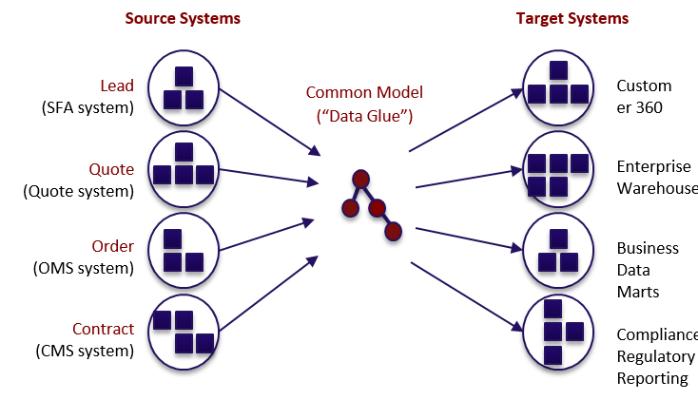

Noise about the CIA torture program post 9/11 is plentiful. But the electorate, that would be voters in the United States, lack facts about the CIA torture program, its oversight (or lack thereof) and those responsible for torture, from top to bottom. There isn’t enough information to “connect the dots,” a common phrase in the intelligence community.

Connecting those dots are what could bring the accountability and transparency necessary to prevent torture from returning as an instrument of US policy.

Thirty retired generals are urging President Obama to declassify the Senate Intelligence Committee’s report on CIA torture, arguing that without accountability and transparency the practice could be resumed. (Even Generals in US Military Oppose CIA Torture)

Hiding the guilty will produce an expectation of potential future torturers that they too will get a free pass on torture.

Voters are responsible for turning out those who authorized the use of torture and to hold their subordinates are held accountable for their crimes. To do so voters must have the information contained in the full CIA torture report.

Release of the Full CIA Torture Report: No Doom and Gloom

Senator Udall should ignore speculation that release of the full CIA torture report will “doom the nation.”

Poppycock!

There have been similar claims in the past and none of them, not one, has ever proven to be true. Here are some of the ones that I remember personally:

Others that I should add to this list?

Is Saying “Nazi” Inflammatory?

Is using the term “Nazi” inflammatory in this context? The only difference between CIA and Nazi torture is the government that ordered or tolerated the torture. Unless you know of some other classification of torture. The United States military apparently doesn’t and I am willing to take their word for it.

Some will say the torturers were “serving the American people.” The same could be and was said by many a death camp guard for the Nazis. Wrapping yourself in a flag, any flag, does not put criminal activity beyond the reach of the law. It didn’t at Nuremberg and it should not work here.

Conclusion

A functioning democracy requires an informed electorate. Not elected officials, not a star chamber group but an informed electorate. To date the American people lack details about illegal torture carried out by a government agency, the CIA. To exercise their rights and responsibilities an an informed electorate, American voters must have full access to the full CIA torture report.

Release of anything less than the full CIA torture report protects torture participants and their superiors. I have no interest in protecting those who engage in illegal activities nor their superiors. As an American citizen, do you?

Experience with prior “sensitive” reports indicates that despite the wailing and gnashing of teeth, the United States will not fall when the guilty are exposed, prosecuted and lead off to jail. This case is no different.

As many retired US generals point out, transparency and accountability are the only ways to keep illegal torture from returning as an instrument of United States policy.

Is there any reason to wait until American torturers are in their nineties, suffering from dementia and living in New Jersey to hold them accountable for their crimes?

I don’t think so either.

PS: When Senator Udall releases the full CIA torture report in the Congressional Record (not to the New York Times or Wikileaks, both of which censor information for reasons best known to themselves), I hereby volunteer to assist in the extraction of names, dates, places and the association of those items with other, pubic data, both in topic map form as well as in other formats.

How about you?

PPS: On the relationship between Nazis and the CIA, see: Nazis Were Given ‘Safe Haven’ in U.S., Report Says. The special report that informed that article: The Office of Special Investigations: Striving for Accountability in the Aftermath of the Holocaust. (A leaked document)

When you compare Aryanism to American Exceptionalism the similarities between the CIA and the Nazi regime are quite pronounced. How could any act that protects the fatherland/homeland be a crime?