First steps towards semantic descriptions of electronic laboratory notebook records by Simon J Coles, Jeremy G Frey, Colin L Bird, Richard J Whitby and Aileen E Day.

Abstract:

In order to exploit the vast body of currently inaccessible chemical information held in Electronic Laboratory Notebooks (ELNs) it is necessary not only to make it available but also to develop protocols for discovery, access and ultimately automatic processing. An aim of the Dial-a-Molecule Grand Challenge Network is to be able to draw on the body of accumulated chemical knowledge in order to predict or optimize the outcome of reactions. Accordingly the Network drew up a working group comprising informaticians, software developers and stakeholders from industry and academia to develop protocols and mechanisms to access and process ELN records. The work presented here constitutes the first stage of this process by proposing a tiered metadata system of knowledge, information and processing where each in turn addresses a) discovery, indexing and citation b) context and access to additional information and c) content access and manipulation. A compact set of metadata terms, called the elnItemManifest, has been derived and caters for the knowledge layer of this model. The elnItemManifest has been encoded as an XML schema and some use cases are presented to demonstrate the potential of this approach.

And the current state of electronic laboratory notebooks:

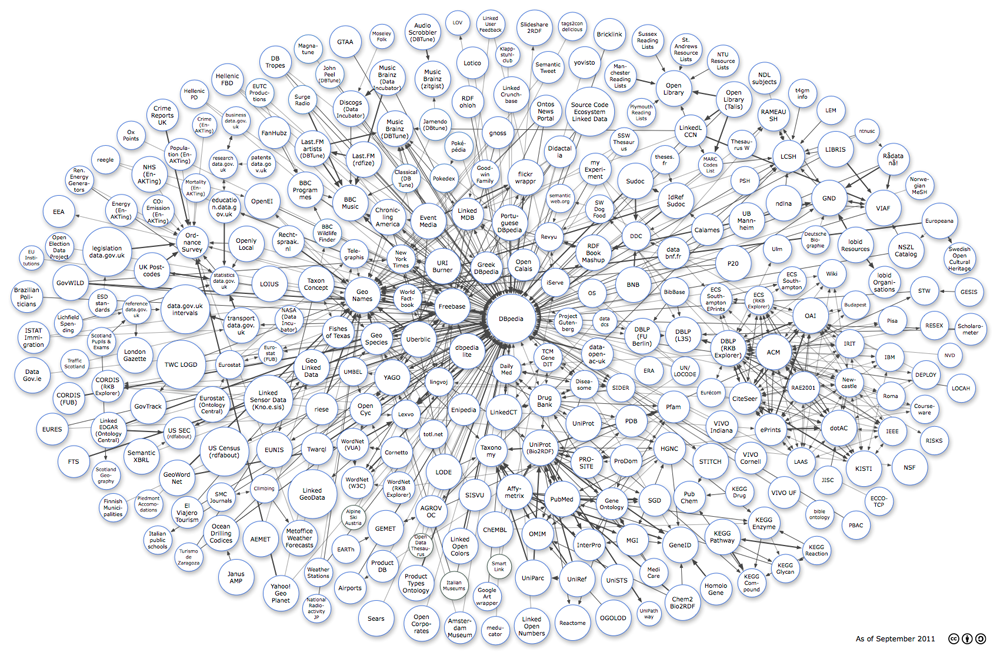

It has been acknowledged at the highest level [15] that “research data are heterogeneous, often classified and cited with disparate schema, and housed in distributed and autonomous databases and repositories. Standards for descriptive and structural metadata will help establish a common framework for understanding data and data structures to address the heterogeneity of datasets.” This is equally the case with the data held in ELNs. (citing: 15. US National Science Board report, Digital Research Data Sharing and Management, Dec 2011 Appendix F Standards and interoperability enable data-intensive science. http://www.nsf.gov/nsb/publications/2011/nsb1124.pdf, accessed 10/07/2013.)

It is trivially true that: “…a common framework for understanding data and data structures …[would] address the heterogeneity of datasets.”

Yes, yes a common framework for data and data structures would solve the heterogeneity issues with datasets.

What is surprising is that no one had that idea up until now. 😉

I won’t recite the history of failed attempts at common frameworks for data and data structures here. To the extent that communities do adopt common practices or standards, those do help. Unfortunately there have never been any universal ones.

Or should I say there have never been any proposals for universal frameworks that succeeded in becoming universal? That’s more accurate. We have not lacked for proposals for universal frameworks.

That isn’t to say this is a bad proposal. But it will be only one of many proposals for the integration of electronic laboratory notebook records, leaving the task of integration between systems for integration left to be done.

BTW, if you are interested in further details, see the article and the XML schema at: http://www.dial-a-molecule.org/wp/blog/2013/08/elnitemmanifest-a-metadata-schema-for-accessing-and-processing-eln-records/.