‘Shocking Celebrity Nip Slips’: Secrets I Learned Writing Clickbait Journalism by Kate Lloyd.

I’m sat at a desk in a glossy London publishing house. On the floors around me, writers are working on tough investigations and hard news. I, meanwhile, am updating a feature called “Shocking celebrity nip-slips: boobs on the loose.” My computer screen is packed with images of tanned reality star flesh as I write captions in the voice of a strip club announcer: “Snooki’s nunga-nungas just popped out to say hello!” I type. “Whoops! Looks like Kim Kardashian forgot to wear a bra today!”

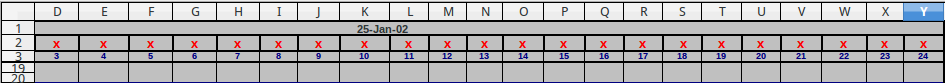

Back in 2013, I worked for a women’s celebrity news website. I stumbled into the industry at a time when online editors were panicking: Their sites were funded by advertisers who demanded that as many people as possible viewed stories. This meant writing things readers loved and shared, but also resorting to shadier tactics. With views dwindling, publications like mine often turned to the gospel of search engine optimisation, also known as SEO, for guidance.

…

Like making a deal with a highly-optimized devil, relying heavily on SEO to push readers to websites has a high moral price for publishers. When it comes to female pop stars and actors, people are often more likely to search for the celebrity’s name with the words “naked,” “boobs,” “butt,” “weight,” and “bikini” than with the names of their albums or movies. Since 2008, “Miley Cyrus naked” has been consistently Googled more than “Miley Cyrus music,” “Miley Cyrus album,” “Miley Cyrus show,” and “Miley Cyrus Instagram.” Plus, “Emma Watson naked” has been Googled more than “Emma Watson movie” since she was 15. In fact, “Emma Watson feet” gets more search traffic than “Emma Watson style,” which might explain why one women’s site has a fashion feature called “Emma Watson is an excellent foot fetish candidate.”

…

If you don’t know what other people are be searching for, try these two resources on Google Trends:

Hacking the Google Trends API (2014)

PyTrends – Pseudo API for Google Trends (Updated six days ago)

Depending on your sensibilities, you could collect content on celebrities into a topic map and when their searches spike, you can release links to the new material plus save readers the time of locating older content.

That might even be a viable business model.

Thoughts?