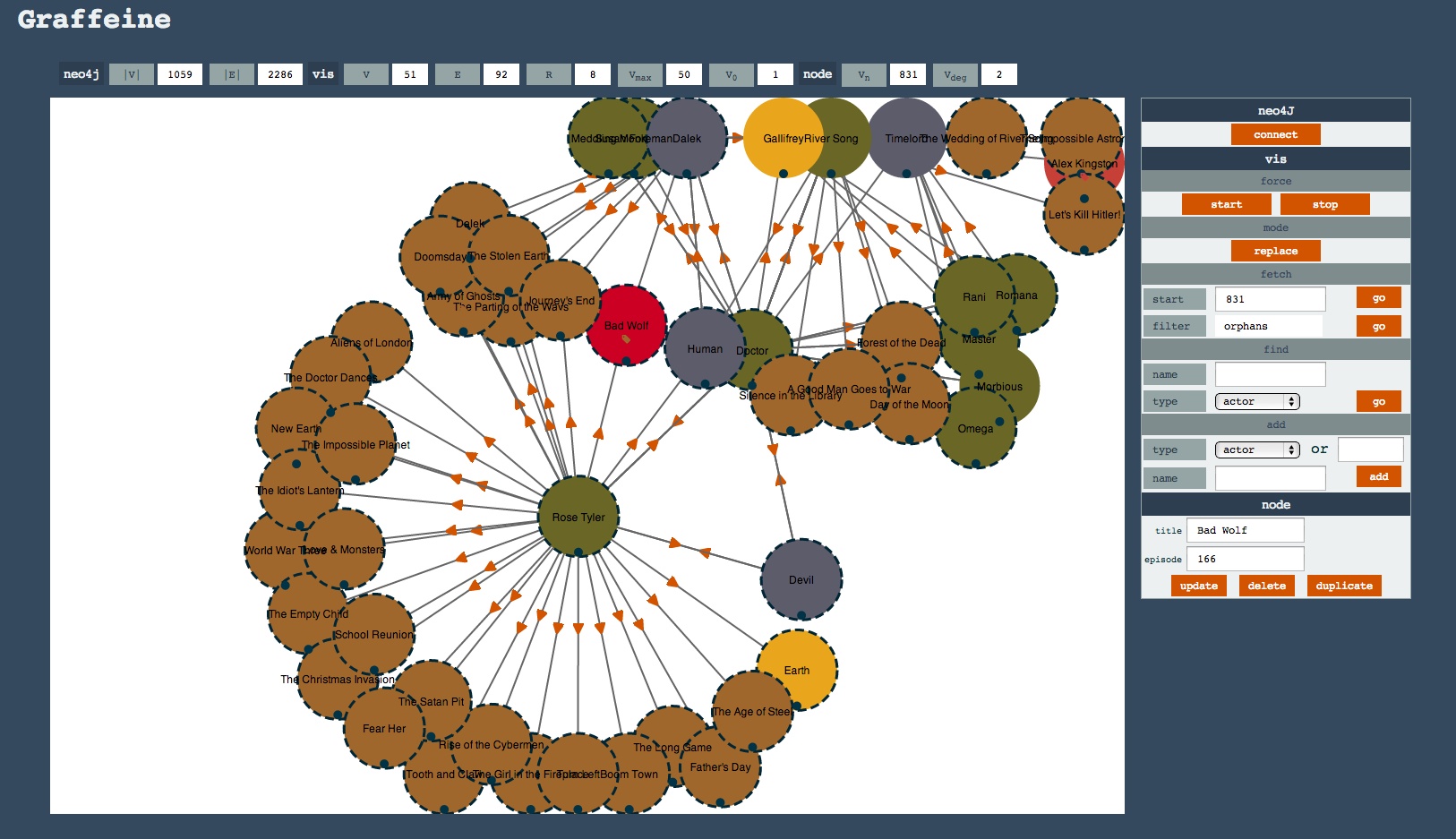

Querying Graphs with Neo4j by Michael Hunger.

Download the refcard by usual process, login into Dzone, etc.

When you open the PDF file in a viewer, do be careful. (Page references are to the DZone cheatsheet.)

Cover The entire cover is a download link. Touch it at all and you will be taken to a download link for Neo4j.

Page 1 covers “What is a Graph Database?” and “What is Neo4j?,” just in case you have been forced by home invaders to download a refcard for a technology you know nothing about.

Page 2 pitches the Neo4j server and then Getting Started with Neo4j, perhaps to annoy the NSA with repetitive content.

The DZone cheatsheet replicates the cheatsheet at: http://neo4j.com/docs/2.0/cypher-refcard/, with the following changes:

Page 3

WITH

Re-written. Old version:

MATCH (user)-[:FRIEND]-(friend) WHERE user.name = {name} WITH user, count(friend) AS friends WHERE friends > 10 RETURN user

The WITH syntax is similar to RETURN. It separates query parts explicitly, allowing you to declare which identifiers to carry over to the next part.

MATCH (user)-[:FRIEND]-(friend) WITH user, count(friend) AS friends ORDER BY friends DESC SKIP 1 LIMIT 3 RETURN user

You can also use ORDER BY, SKIP, LIMIT with WITH.

New version:

MATCH (user)-[:KNOWS]-(friend) WHERE user.name = {name} WITH user, count(*) AS friends WHERE friends > 10 RETURN user

WITH chains query parts. It allows you to specify which projection of your data is available after WITH.

ou can also use ORDER BY, SKIP, LIMIT and aggregation with WITH. You might have to alias expressions to give them a name.

I leave it to your judgement which version was the clearer.

Page 4

MERGE – inserts: typo “{name: {value3}} )” on last line of final example under MERGE.

SET – inserts: “SET n += {map} Add and update properties, while keeping existing ones.”

INDEX – inserts: “MATCH (n:Person) WHERE n.name IN {values} An index can be automatically used for the IN collection checks.”

Page 5

PATTERNS

– changes: “(n)-[*1..5]->(m) Variable length paths.” to “(n)-[*1..5]->(m) Variable length paths can span 1 to 5 hops.”

– changes: “(n)-[*]->(m) Any depth. See the performance tips.” to “(n)-[*]->(m) Variable length path of any depth. See performance tips.”

– changes: “shortestPath((n1:Person)-[*..6]-(n2:Person)) Find a single shortest path.” to “shortestPath((n1)-[*..6]-(n2))”

COLLECTIONS

– changes: “range({first_num},{last_num},{step}) AS coll Range creates a collection of numbers (step is optional), other functions returning collections are: labels, nodes, relationships, rels, filter, extract.” to “range({from},{to},{step}) AS coll Range creates a collection of numbers (step is optional).” [Loss of information from the earlier version.]

– inserts: “UNWIND {names} AS name MATCH (n:Person {name:name}) RETURN avg(n.age) With UNWIND, you can transform any collection back into individual rows. The example matches all names from a list of names.”

MAPS

– inserts: “range({start},{end},{step}) AS coll Range creates a collection of numbers (step is optional).”

Page 6

PREDICATES

– changes: “NOT (n)-[:KNOWS]->(m) Exclude matches to (n)-[:KNOWS]->(m) from the result.” to “NOT (n)-[:KNOWS]->(m) Make sure the pattern has at least one match.” [Older version more precise?]

– replaces: mixed case, true/TRUE with TRUE

FUNCTIONS

– inserts: “toInt({expr}) Converts the given input in an integer if possible; otherwise it returns NULL.”

– inserts: “toFloat({expr}) Converts the given input in a floating point number if possible; otherwise it returns NULL.”

PATH FUNCTIONS

– changes: “MATCH path = (begin) -[*]-> (end) FOREACH (n IN rels(path) | SET n.marked = TRUE) Execute a mutating operation for each relationship of a path.” to “MATCH path = (begin) -[*]-> (end) FOREACH (n IN rels(path) | SET n.marked = TRUE) Execute an update operation for each relationship of a path.”

COLLECTION FUNCTIONS

– changes: “FOREACH (value IN coll | CREATE (:Person {name:value})) Execute a mutating operation for each element in a collection.” to “FOREACH (value IN coll | CREATE (:Person {name:value})) Execute an update operation for each element in a collection.”

MATHEMATICAL FUNCTIONS

– changes: degrees({expr}), radians({expr}), pi() Converts radians into degrees, use radians for the reverse. pi for π.” to “degrees({expr}), radians({expr}), pi() to Converts radians into degrees, use radians for the reverse.” Loses “pi for π.”

– changes: “log10({expr}), log({expr}), exp({expr}), e() Logarithm base 10, natural logarithm, e to the power of the parameter. Value of e.” to “log10({expr}), log({expr}), exp({expr}), e() Logarithm base 10, natural logarithm, e to the power of the parameter.” Loses “Value of e.”

Page 7

STRING FUNCTIONS

– inserts: “split({string}, {delim}) Split a string into a collection of strings.”

AGGREGATION changes: collect(n.property) Collection from the values, ignores NULL. to “collect(n.property) Value collection, ignores NULL.”

START

– remove: “START n=node(*) Start from all nodes.”

– remove: “START n=node({ids}) Start from one or more nodes specified by id.”

– remove: “START n=node({id1}), m=node({id2}) Multiple starting points.”

– remove: “START n=node:nodeIndexName(key={value}) Query the index with an exact query. Use node_auto_index for the automatic index.”

– inserts: “START n = node:indexName(key={value}) n=node:nodeIndexName(key={value}) n=node:nodeIndexName(key={value}) Query the index with an exact query. Use node_auto_index for the old automatic index.”

– inserts: ‘START n = node:indexName({query}) Query the index by passing the query string directly, can be used with lucene or spatial syntax. E.g.: “name:Jo*” or “withinDistance:[60,15,100]”‘

I may have missed some changes because as you know, the “cheatsheets” for Cypher have no particular order for the entries. Alphabetical order suggests itself for future editions, sans the marketing materials.

Changes to a query language should appear where a user would expect to find the command in question. For example, the “CREATE a={property:’value’} has been removed” should appear where expected on the cheatsheet, noting the change. Users should not have to hunt high and low for “CREATE a={property:’value’}” on a cheatsheet.

I have passed over incorrect use of the definite article and other problems without comment.

Despite the shortcomings of the DZone refcard, I suggest that you upgrade to it.