Evaluating standard terminologies for encoding allergy information by Foster R Goss, Li Zhou, Joseph M Plasek, Carol Broverman, George Robinson, Blackford Middleton, Roberto A Rocha. (J Am Med Inform Assoc doi:10.1136/amiajnl-2012-000816)

Abstract:

Objective Allergy documentation and exchange are vital to ensuring patient safety. This study aims to analyze and compare various existing standard terminologies for representing allergy information.

Methods Five terminologies were identified, including the Systemized Nomenclature of Medical Clinical Terms (SNOMED CT), National Drug File–Reference Terminology (NDF-RT), Medication Dictionary for Regulatory Activities (MedDRA), Unique Ingredient Identifier (UNII), and RxNorm. A qualitative analysis was conducted to compare desirable characteristics of each terminology, including content coverage, concept orientation, formal definitions, multiple granularities, vocabulary structure, subset capability, and maintainability. A quantitative analysis was also performed to compare the content coverage of each terminology for (1) common food, drug, and environmental allergens and (2) descriptive concepts for common drug allergies, adverse reactions (AR), and no known allergies.

Results Our qualitative results show that SNOMED CT fulfilled the greatest number of desirable characteristics, followed by NDF-RT, RxNorm, UNII, and MedDRA. Our quantitative results demonstrate that RxNorm had the highest concept coverage for representing drug allergens, followed by UNII, SNOMED CT, NDF-RT, and MedDRA. For food and environmental allergens, UNII demonstrated the highest concept coverage, followed by SNOMED CT. For representing descriptive allergy concepts and adverse reactions, SNOMED CT and NDF-RT showed the highest coverage. Only SNOMED CT was capable of representing unique concepts for encoding no known allergies.

Conclusions The proper terminology for encoding a patient’s allergy is complex, as multiple elements need to be captured to form a fully structured clinical finding. Our results suggest that while gaps still exist, a combination of SNOMED CT and RxNorm can satisfy most criteria for encoding common allergies and provide sufficient content coverage.

Interesting article but some things that may not be apparent to the casual reader:

MedDRA:

The Medical Dictionary for Regulatory Activities (MedDRA) was developed by the International Conference on Harmonisation (ICH) and is owned by the International Federation of Pharmaceutical Manufacturers and Associations (IFPMA) acting as trustee for the ICH steering committee. The Maintenance and Support Services Organization (MSSO) serves as the repository, maintainer, and distributor of MedDRA as well as the source for the most up-to-date information regarding MedDRA and its application within the biopharmaceutical industry and regulators. (source: http://www.nlm.nih.gov/research/umls/sourcereleasedocs/current/MDR/index.html

MedDRA has a metathesaurus with translations into: Czech, Dutch, French, German, Hungarian, Italian, Japanese, Portuguese, and Spanish.

Unique Ingredient Identifier (UNII)

The overall purpose of the joint FDA/USP Substance Registration System (SRS) is to support health information technology initiatives by generating unique ingredient identifiers (UNIIs) for substances in drugs, biologics, foods, and devices. The UNII is a non- proprietary, free, unique, unambiguous, non semantic, alphanumeric identifier based on a substance’s molecular structure and/or descriptive information.

…

The UNII may be found in:

- NLM’s Unified Medical Language System (UMLS)

- National Cancer Institutes Enterprise Vocabulary Service

- USP Dictionary of USAN and International Drug Names (future)

- FDA Data Standards Council website

- VA National Drug File Reference Terminology (NDF-RT)

- FDA Inactive Ingredient Query Application

(source: http://www.fda.gov/ForIndustry/DataStandards/SubstanceRegistrationSystem-UniqueIngredientIdentifierUNII/

National Drug File – Reference Terminology (NDF-RT)

The National Drug File – Reference Terminology (NDF-RT) is produced by the U.S. Department of Veterans Affairs, Veterans Health Administration (VHA).

…

NDF-RT combines the NDF hierarchical drug classification with a multi-category reference model. The categories are:

- Cellular or Molecular Interactions [MoA]

- Chemical Ingredients [Chemical/Ingredient]

- Clinical Kinetics [PK]

- Diseases, Manifestations or Physiologic States [Disease/Finding]

- Dose Forms [Dose Form]

- Pharmaceutical Preparations

- Physiological Effects [PE]

- Therapeutic Categories [TC]

- VA Drug Interactions [VA Drug Interaction]

(source: http://www.nlm.nih.gov/research/umls/sourcereleasedocs/current/NDFRT/

MedDRA, UNII, and NDF-RT have been in use for years, MedDRA internationally in multiple languages. An uncounted number of medical records, histories and no doubt publications rely upon these vocabularies.

Assume the conclusion: SNOMED CT with RxNorm (links between drug vocabularies) provide the best coverage for “encoding common allergies.”

A critical question remains:

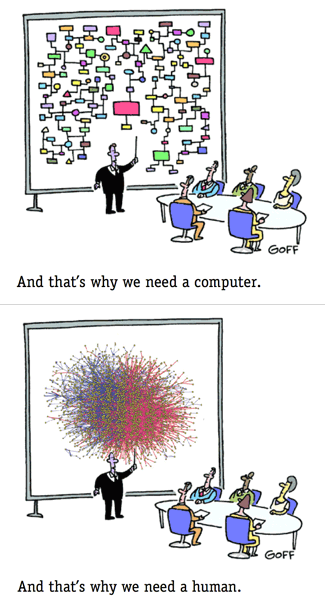

How to access medical records using other terminologies?

Recalling from the adventures of owl:sameAs (The Semantic Web Is Failing — But Why? (Part 5)) that any single string identifier is subject to multiple interpretations. Interpretations that can only be disambiguated by additional information.

You might present a search engine with string to string mappings but those are inherently less robust and harder to maintain than richer mappings.

The sort of richer mappings that are supported by topic maps.