No, not that sort of moon shot!

Steve O’Keeffe writes in Death and Rebirth?

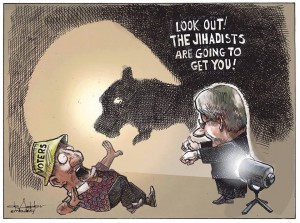

The OPM breach – and the subsequent Cyber Sprint – may be just the jolt we need to euthanize our geriatric Fed IT. According to Tony Scott and GAO at this week’s FITARA Forum, we spend more than 80 percent of the $80 billion IT budget on operations and maintenance for legacy systems. You see with the Cyber Sprint we’ve been looking hard at how to secure our systems. And, the simple truth of the matter is – it’s impossible. It’s impossible to apply two-factor authentication to systems and applications built in the ’60s, ’70s, ’80s, ’90s, and naughties.

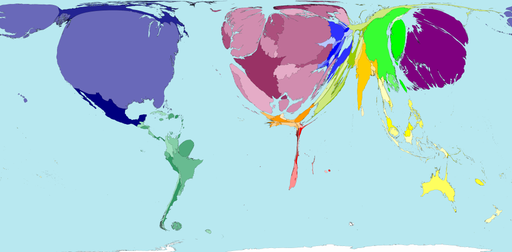

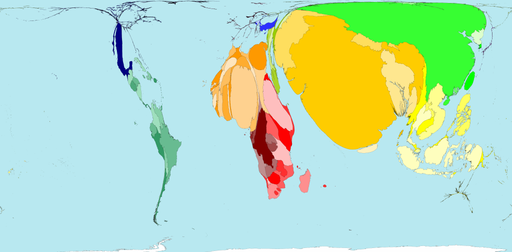

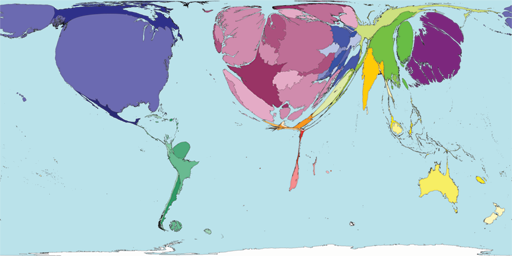

Here’s an opportunity for real leadership – to move away from advocating for incremental change, like Cloud First, Mobile First, FDCCI, HSPD-12, TIC, etc. These approaches have clearly failed us. Now’s the time for a moon shot in government IT – a digital Interstate Highway program. I’m going to call this .usa 2020 – the idea to completely replace our aging Federal IT infrastructure by 2020. You see, IT is the highway artery system that connects America today. I’m proposing that we take inspiration from the OPM disaster – and the next cyber disaster lurking oh so inevitably around the next corner – to undertake a mainstream modernization of the Federal government’s IT infrastructure and applications. It’s not about transformation, it’s about death and rebirth.

To be clear, this is not simply about moving to the cloud. It’s about really reinventing government IT. It’s not just that our Federal IT systems are decrepit and insecure – it’s about the fact they’re dysfunctional. How can it be that the top five addresses in America received 4,900 tax refunds in 2014? How did a single address in Lithuania get 699 tax refunds? How can we have 777 supply chain systems in the Federal government?

…

You can’t see it but when Steve asked the tax refunds question, my hand looked like a helicopter blade in the air. 😉 I know the answer to that question.

First, the IRS estimates 249 million tax returns will be filed in 2015.

Second, in addition to IT reductions, the IRS is required by law to “pay first, ask questions later,” and to deliver refunds within thirty (30) days. Tax-refund fraud to hit $21 billion, and there’s little the IRS can do.

I agree that federal IT systems could be improved, but if funds are not available for present systems, what are the odds of adequate funding being available for a complete overhaul?

BTW, the “80 percent of the $80 billion IT budget” works out to about $64 billion. If you were getting part of that $64 billion now, how hard would you resist changes that eliminated your part of that $64 billion?

Bear in mind that elimination of legacy systems also means users of those legacy systems will have to be re-trained on the replacement systems. We all know how popular forsaking legacy applications is among users.

As a practical matter, rip-n-replace proposals buy you virulent opposition from people currently enjoying $64 billion in payments every year and the staffers who use those legacy systems.

On the other hand, layering solutions, like topic maps, buy you support from people currently enjoying $64 billion in payments every year and the staffer who use those legacy systems.

Being a bright, entrepreneurial sort of person, which option are you going to choose?