Saturday’s data dump from St. Louis County Prosecutor Robert McCulloch is still short at least two critical pieces of evidence. There is no copy of the “documents that we gave you to help in your deliberation.” And, there is no copy of the police map to “…guide the grand jury.”

I. The “documents that we gave you to help in your deliberations:”

The prosecutors gave the grand jury written documents that supplemented their various oral misstatements of the law in this case.

From Volume 24 - November 21, 2014 - Page 138:

...

2 You have all the information you need in

3 those documents that we gave you to help in your

4 deliberation.

...

That follows verbal mis-statement of the law by Ms. Whirley:

Volume 24 - November 21, 2014 - Page 137

...

13 MS. WHIRLEY: Is that in order to vote

14 true bill, you also must consider whether you

15 believe Darren Wilson, you find probable cause,

16 that's the standard to believe that Darren Wilson

17 committed the offense and the offenses are what is

18 in the indictment and you must find probable cause

19 to believe that Darren Wilson did not act in lawful

20 self—defense, and you've got the last sheet talks

21 about self—defense and talks about officer's use of

22 force, because then you must also have probable

23 cause to believe that Darren Wilson did not use

24 lawful force in making an arrest. So you are

25 considering self—defense and use of force in making

Volume 24 - November 21, 2014 - Page 138

Grand Jury — Ferguson Police Shooting Grand Jury 11/21/2014

1 an arrest.

...

Where are the “documents that we gave you to help in your deliberation?”

Have you seen those documents? I haven’t.

And consider this additional misstatement of the law:

Volume 24 - November 21, 2014 - Page 139

...

8 And the one thing that Sheila has

9 explained as far as what you must find and as she

10 said, it is kind of in Missouri it is kind of, the

11 State has to prove in a criminal trial, the State

12 has to prove that the person did not act in lawful

13 self—defense or did not use lawful force in making,

14 it is kind of like we have to prove the negative.

15 So in this case because we are talking

16 about probable cause, as we've discussed, you must

17 find probable cause to believe that he committed the

18 offense that you're considering and you must find

19 probable cause to believe that he did not act in

20 lawful self—defense. Not that he did, but that he

21 did not and that you find probable cause to believe

22 that he did not use lawful force in making the

23 arrest.

...

Just for emphasis:

the State has to prove that the person did not act in lawful self—defense or did not use lawful force in making, it is kind of like we have to prove the negative.

How hard is it to prove a negative? James Randi, James Randi Lecture @ Caltech – Cant Prove a Negative, points out that proving a negative is a logical impossibility.

The grand jury was given a logically impossible task in order to indict Darren Wilson.

What choice did the grand jury have but to return a “no true bill?”

More Misguidance: The police map, Grand Jury 101

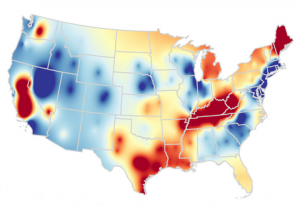

A police map was created to guide the jury in its deliberations, a map that reflected the police view of the location of witnesses.

Volume 24 - November 21, 2014 - Page 26

Grand Jury — Ferguson Police Shooting Grand Jury 11/21/2014

...

10 Q (By Ms. Alizadeh) Extra, okay, that's

11 right. And you indicated that you, along with other

12 investigators prepared this, which is your

13 interpretation based upon the statements made of

14 witnesses as to where various eyewitnesses were

15 during, when I say shooting, obviously, there was a

16 time period that goes along, the beginning of the

17 time of the beginning of the incident until after

18 the shooting had been done. And do you still feel

19 that this map accurately reflects where witnesses

20 said they were?

21 A I do.

22 Q And just for your instruction, this just,

23 this map is for your purposes in your deliberations

24 and if you disagree with anything that's on the map,

25 these little sticky things come right off. So

Volume 24 - November 21, 2014 - Page 27

Grand Jury — Ferguson Police Shooting Grand Jury 11/21/2014

1 supposedly they come right off.

2 A They do.

3 Q If you feel that this witness is not in

4 the right place, you can move any of these stickers

5 that you want and put them in the places where you

6 think they belong.

7 This is just something that is

8 representative of what this witness believes where

9 people were. If you all do with this what you will.

10 Also there was a legend that was

11 provided for all of you regarding the numbers

12 because the numbers that were assigned witnesses are

13 not the same numbers as the witnesses testimony in

14 this grand jury.

...

Two critical statements:

11... And you indicated that you, along with other

12 investigators prepared this, which is your

13 interpretation based upon the statements made of

14 witnesses as to where various eyewitnesses were

15 during, when I say shooting,

So the map represents the detective’s opinion about other witnesses, and:

3 Q If you feel that this witness is not in

4 the right place, you can move any of these stickers

5 that you want and put them in the places where you

6 think they belong.

The witness gave the grand jury a map, to guide its deliberations but we will never know what map that was, because the stickers can be moved.

Pretty neat trick, giving the grand jury guidance that can never be disclosed to others.

Summary:

You have seen the quote from the latest data dump from the prosecutor’s office:

McCulloch apologized in a written statement for any confusion that may have occurred by failing to initially release all of the interview transcripts. He said he believes he has now released all of the grand jury evidence, except for photos of Brown’s body and anything that could lead to witnesses being identified.

The written instructions to the grand jury and the now unknowable map (Grand Jury 101) aren’t pictures of Brown’s body or anything that could identify a witness. Where are they?

Please make a donation to support further research on the grand jury proceedings concerning Michael Brown. Future work will include:

- A witness index to the grand jury transcripts

- An exhibit index to the grand jury transcripts

- Analysis of the grand jury transcript for patterns by the prosecuting attorneys, both expected and unexpected

- A concordance of the grand jury transcripts

- Suggestions?

Donations will enable continued analysis of the grand jury transcripts, which, along with other evidence, may establish a pattern of conduct that was not happenstance or coincidence, but in fact was, enemy action.

Thanks for your support!

Other Michael Brown Posts

Missing From Michael Brown Grand Jury Transcripts December 7, 2014. (The witness index I propose to replace.)

New recordings, documents released in Michael Brown case [LA Times Asks If There’s More?] Yes! December 9, 2014 (before the latest document dump on December 14, 2014).

Michael Brown Grand Jury – Presenting Evidence Before Knowing the Law December 10, 2014.

How to Indict Darren Wilson (Michael Brown Shooting) December 12, 2014.

More Missing Evidence In Ferguson (Michael Brown) December 15, 2014. (above)