The Debunking Handbook by John Cook, Stephan Lewandowsky.

From the post:

The Debunking Handbook, a guide to debunking misinformation, is now freely available to download. Although there is a great deal of psychological research on misinformation, there’s no summary of the literature that offers practical guidelines on the most effective ways of reducing the influence of myths. The Debunking Handbook boils the research down into a short, simple summary, intended as a guide for communicators in all areas (not just climate) who encounter misinformation.

The Handbook explores the surprising fact that debunking myths can sometimes reinforce the myth in peoples’ minds. Communicators need to be aware of the various backfire effects and how to avoid them, such as:

It also looks at a key element to successful debunking: providing an alternative explanation. The Handbook is designed to be useful to all communicators who have to deal with misinformation (eg – not just climate myths).

I think you will find this a delightful read! From the first section, titled: Debunking the first myth about debunking,

It’s self-evident that democratic societies should base their decisions on accurate information. On many issues, however, misinformation can become entrenched in parts of the community, particularly when vested interests are involved.1,2 Reducing the influence of misinformation is a difficult and complex challenge.

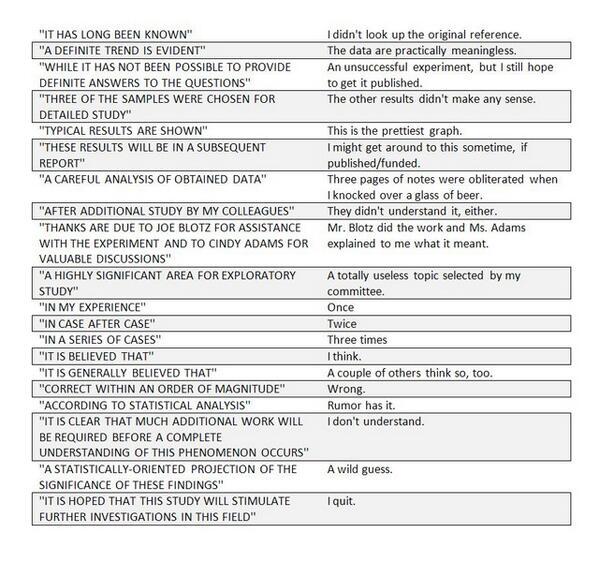

A common misconception about myths is the notion that removing its influence is as simple as packing more information into people’s heads. This approach assumes that public misperceptions are due to a lack of knowledge and that the solution is more information – in science communication, it’s known as the “information deficit model”. But that model is wrong: people don’t process information as simply as a hard drive downloading data.

Refuting misinformation involves dealing with complex cognitive processes. To successfully impart knowledge, communicators need to understand how people process information, how they modify

their existing knowledge and how worldviews affect their ability to think rationally. It’s not just what people think that matters, but how they think.

I would have accepted the first sentence had it read: It’s self-evident that democratic societies don’t base their decisions on accurate information.

I don’t know of any historical examples of democracies making decisions on accurate information.

For example, there are any number of “rational” and well-meaning people who have signed off on the “war on terrorism” as though the United States is in any danger.

Deaths from terrorism in the United States since 2001 – fourteen (14).

Deaths by entanglement in bed sheets between 2001-2009 – five thousand five hundred and sixty-one (5561).

From: How Scared of Terrorism Should You Be? and Number of people who died by becoming tangled in their bedsheets.

Despite being a great read, Debunking has a problem, it presumes you are dealing with a “rational” person. Rational as defined by…, as defined by what? Hard to say. It is only mentioned once and I suspect “rational” means that you agree with debunking the climate “myth.” I do as well but that’s happenstance and not because I am “rational” in some undefined way.

Realize that “rational” is a favorable label people apply to themselves and little more than that. It rather conveniently makes anyone who disagrees with you “irrational.”

I prefer to use “persuasion” on topics like global warming. You can use “facts” for people who are amenable to that approach but also religion (stewarts of the environment), greed (exploitation of the Third World for carbon credits), financial interest in government funded programs, or whatever works to persuade enough people to support your climate change program. Being aware that other people with other agendas are going to be playing the same game. The question is whether you want to be “rational” or do you want to win?

Personally I am convinced of climate change and our role in causing it. I am also aware of the difficulty of sustaining action by people with an average attention span of fifteen (15) seconds over the period of the fifty (50) years it will take for the environment to stabilize if all human inputs stopped tomorrow. It’s going to take far more than “facts” to obtain a better result.