NoSQL Matters 2013 (Video/Slides)

A great set of videos but in no particular order in the original listing. I ordered them by the author’s last name for quick scanning.

Unless otherwise noted, the titles link to videos at Vimeo. Abstracts follow each title when available.

Enjoy!

Pavlo Baron – 100% Big Data, 0% Hadoop, 0% Java

If your data is big enough, Hadoop it!” That’s simply not true – there is much more behind this term than just a tool. In this talk I will show one possible, practically working approach and the corresponding selection of tools that help collect, mine, move around, store and provision large, unstructured data amounts. Completely without Hadoop. And even completely without Java.

Pere Urbón Bayes – From Tables to Graph. Recommendation Systems, a Graph Database Use Case (No video, slides)

Recommendation engines have changed a lot during the last years and the last big change is NoSQL, especially Graph Data- bases. With this presentation we intend to show how to build a Graph Processing technology, based on our experience in doing that for environments like Digital Libraries and Movies and Digital Media. First, we will introduce the state of the art on context aware Recommendation Engines, with special interest on how peo- ple are using Graph Processing, NoSQL, systems to scale this kind of solutions. After an introduction to the ecosystem, the next step is to have something to work with. So we will show the audience how to build a Recommendation Engine with a few steps.

The demonstration part will be made using the next technology stack: Sinatra as a simple web framework. Ruby as a programming language. OrientDB, Neo4j, Redis, etc. as a NoSQL technology stack. The result of our demonstration will be a simple engine, accessible through a REST API, to play and extend, so that atten- dants can learn by doing.

In the end our audience will have a full in- troduction to the field of Recommendati- on Engines, with special interest on Graph Processing, NoSQL, systems. Based on our experience making this technology for large scale architectures, we think the best way to learn this is by doing it and having an example to play with.

Nic Caine – Leveraging InfiniteGraph for Big Data

Slides

Practical insight! Graph databases could help institutions designed for research & development in healthcare and life sciences managing Big Data sets. Researcher obtains entry in a new level for healthcare and pharmaceutical data analytics.

This talk explains the challenges for developer to detect relationships and similar cross link interaction within data analysis. Graph database technology can give the answer that nobody asked before!

William Candillon – JSONiq – The SQL of NoSQL

Slides

SQL has been good to the relational world, but what about query languages in the NoSQL space?

We introduce JSONiq: the SQL of NoSQL.

Like SQL, JSONiq enables developers to leverage the same productive high-level language across a variety of products.

But this not your grandma’s SQL; it supports novel concepts purposely designed for flexible data.

Moreover, JSONiq is a highly optimizable language to query and update NoSQL stores.

We show how JSONiq can be used on top products such as MongoDB, CouchBase, and DynamoDB.

Aparna Chaudhary – Look Ma! No more Blobs

Slides

GridFS is a storage mechanism for persisting large objects in MongoDB. The talk will cover a use case of content management using MongoDB. During the talk I would explain why we chose MongoDB over traditional relational database to store XML files. The talk would be accompanied by a live demo using Spring Data & GridFS.

Sean Cribbs – Data Structures in Riak

Slides

Since the beginning, Riak has supported high write-availability using Dynamo-style multi-valued keys – also known as conflicts or siblings. The tradeoff for this type of availability is that the application must include logic to resolve conflicting updates. While it is convenient to say that the application can reason best about conflicts, ad hoc resolution is error-prone and can result in surprising anomalies, like the reappearing item problem in Dynamo’s shopping cart.

What is needed is a more formal and general approach to the problem of conflict resolution for complex data structures. Luckily, there are some formal strategies in recent literature, including Conflict-Free Replicated Data Types (CRDTs) and BloomL lattices. We’ll review these strategies and cover some recent work we’ve done toward adding automatically-convergent data structures to Riak.

David Czarnecki – Real-World Redis

Slides

Redis is a data structure server, but yet all too often, it is used to do simple data caching. This is not because its internal data structures are not powerful, but I believe, because they require libraries which wrap the functionality into something meaningful for modeling a particular problem or domain. In this talk, we will cover 3 important use cases for Redis data structures that are drawn from real-world experience and production applications handling millions of users and GB of data:

Leaderboards – also known as scoreboards or high score tables – used in video games or in gaming competition sites

Relationships (e.g. friendships) – used in “social” sites

Activity feeds – also known as timelines in “social” sites

The talk will cover these use cases in detail and the development of libraries around each separate use case. Particular attention for each service will be devoted to service failover, scaling and performance issues.

Lucas Dohmen – Rapid API Development with Foxx

Slides

Foxx is a feature of the upcoming version of the free and open source NoSQL database ArangoDB. It allows you to build APIs directly on top of the database and therefore skip the middleman (Rails, Django or whatever your favorite web framework is). This can for example be used to build a backend for Single Page Web Applications. It is designed with simplicity and the specific use case of modern client-side MVC frameworks in mind featuring tools like an asset delivery system.

Stefan Edlich – NoSQL in 5+ years

Slides

Currently it’s getting harder and harder to keep track of all the movements in the SQL, NoSQL and NewSQL world. Furthermore even polyglot persistence can have many meanings and eculiarities. This talk shows possible directions where NoSQL might move in this decade. We will discuss db-model integration- and storage aspects together with some of the hottest systems in the market that lead the way toady. We conclude with some survival strategies for us as users / companies in this messy world.

Benjamin Engber- How to Compare NoSQL Databases: Determining True Performance and Recoverability Metrics For Real-World Use Cases

Slides

One of the major challenges in choosing an appropriate NoSQL solution is finding reliable information as to how a particular database performs for a given use case. Stories of high profile systems failures abound, and tossed around with widely varying benchmark numbers that seem to have no bearing on how tuned systems behave out of the lab. Many of the profiling tools and studies out there use deeply flawed tools or methodologies. Getting meaningful data out of published benchmark studies is difficult, and running internal benchmarks even more so.

In this presentation we discuss how to perform tuned benchmarking across a number of NoSQL solutions (Couchbase, Aerospike, MongoDB, Cassandra, HBase, others) and to do so in a way that does not artificially distort the data in favor of a particular database or storage paradigm. This includes hardware and software configurations, as well as ways of measuring to ensure repeatable results.

We also discuss how to extend benchmarking tests to simulate different kinds of failure scenarios to help evaluate the maintainablility and recoverability of different systems. This requires carefully constructed tests and significant knowledge of the underlying databases — the talk will help evaluators overcome the common pitfalls and time sinks involved in trying to measure this.

Lastly we discuss the YCSB benchmarking tool, its significant limitations, and the significant extensions and supplementary tools Thumbtack has created to provide distributed load generation and failure simulation.

Ralf S. Engelschall – Polyglot Persistence: Boon and Bane for Software Architects

Slides

RDBMS since two decades are the established single standard for every type of data storage of business information systems. In the last few years, the NoSQL movement brought us a myriad of interesting alternative data storage approaches. Now Polyglot Persistence tells us to leverage from the combination of multiple data storage approaches in a “best tool for the job” approach, including the combination of RDBMS and NoSQL.

This presentation addresses the following questions: How does Polyglot Persistence look like in practive when implementing a full-size business information system with it? What interesting use-cases towards the persistence layer exist here? What technical challenges are we faced with here? How does the persis-tence layer architecture look like when using multiple storage backends? What are the technical alternative solutions to implement Polgylot Persistence?

Martin Fowler – NoSQL Distilled to an hour

NoSQL databases offer a significant change to how enterprise applications are built, challenging to two-decade hegemony of relational databases. The question people face is whether NoSQL databases are an appropriate choice, either for new projects or to introduce to existing projects. I’ll give rapid introduction to NoSQL databases: where they came from, the nature of the data models they use, and the different way you have to think about consistency. From this I’ll outline what kinds of circumstances you should consider using them, why they will not make relational databases obsolete, and the important consequence of polyglot persistence.

Uwe Friedrichsen – How to survive in a BASE world

Slides

NoSQL, Big Data and Scale-out in general are leaving the hype plateau and start to become enterprise reality. This usally means no more ACID tranactions, but BASE transactions instead. When confronted with BASE, many developers just shrug and think “Okay, no more SQL but that’s basically it, isn’t it?”. They are terribly wrong!

BASE transactions do not guarantee data consistency at all times anymore, which is a property we became so used to in the ACID years that we barely think about it anymore. But if we continue to design and implement our applications as if there still were ACID transactions, system crashes and corrupt data will become your daily company.

This session gives a quick introduction into the challenges of BASE transactions and explains how to design and implement a BASE-aware application using real code examples. Additionally we extract some concrete patterns in order to preserve the ideas in a concise way. Let’s get ready to survive in a BASE world!

Lars George – HBase Schema Design

Slides

HBase is the Hadoop Database, a random access store that can easily scale to Petabytes of data. It employs common logical concepts, such as rows and tables. But the lack of transaction, the simply CRUD API, combined with a nearly schema-less data layout, it requires a deeper understanding of its inner workings, and how these affect performance. The talk will discuss the architecture behind HBase and lead into practical advice on how to structure data inside HBase to gain the best possible performance.

Lars George – Introduction to Hadoop

Slides

Apache Hadoop is the most popular solution for today’s big data problems. By connecting multiple servers, Hadoop provides a redundant and distributed platform to store and process large amounts of data. This presentation will introduce the architecture of Hadoop and the various interfaces to import and export data into it. Finally, a range of tools will be presented to access the data which include a NoSQL layer called HBase, a Scripting Language layer called Pig, but also a goo old SQL approach through Hive.

Kris Geusebroek – Creating Neo4j Graph Databases with Hadoop

Slides

When exploring very large raw datasets containing massive interconnected networks, it is sometimes helpful to extract your data, or a subset thereof, into a graph database like Neo4j. This allows you to easily explore and visualize networked data to discover meaningful patterns. When your graph has 100M+ nodes and 1000M+ edges, using the regular Neo4j import tools will make the import very time-intensive (as in many hours to days).

In this talk, I’ll show you how we used Hadoop to scale the creation of very large Neo4j databases by distributing the load across a cluster and how we solved problems like creating sequential row ids and position-dependent records using a distributed framework like Hadoop.

Tugdual Grall -Introduction to Map Reduce with Couchbase 2.0

Slides

MapReduce allows systems to delegate the query processing on different machines in parallel. Couchbase 2.0 allows developer to use Map Reduce to query JSON based data on a large volume of data (and server). Couchbase 2.0 features incremental map reduce, which provides powerful aggregates and summaries, even with large datasets for distributed real-time analytic use cases. In this session you will learn:

– What is MapReduce?

– How incremental map-reduce works in Couchbase Server 2.0?

– How to utilize incremental map-reduce for real-time analytics?

– Common use cases this feature addresses?

In this session you will see in demonstration how you can create new Map Reduce function and use it into your application.

Alex Hall – Processing a Trillion Cells per Mouse Click

Slides

Column-oriented database systems have been a real game changer for the industry in recent years. Highly tuned and performant systems have evolved that provide users with the possibility of answering ad hoc queries over large datasets in an interactive manner. In this paper we present the column-oriented datastore developed as one of the central components of PowerDrill (internal Google data-analysis project).

It combines the advantages of columnar data layout with other known techniques (such as using composite range partitions) and extensive algorithmic engineering on key data structures. The main goal of the latter being to reduce the main memory footprint and to increase the efficiency in processing typical user queries. In this combination we achieve large speed-ups. These enable a highly interactive Web UI where it is common that a single mouse click leads to processing a trillion values in the underlying dataset.

Randall Hauch – Elastic, consistent and hierarchical data storage with ModeShape 3

Slides

ModeShape 3 is an elastic, strongly-consistent hierarchical database that supports queries, full-text search, versioning, events, locking and use of schema-rich or schema- less constraints. It’s perfect for storing files and hierarchically structured data that will be accessed by navigation or queries. You can choose where (if at all) you want ModeShape to enforce your schema, but your structure and schema can always evolve as your needs change. Sequencers make it easy to extract structure from stored files, and federation can bring into your database information from external systems. It’s fast, sits on top of an Infinispan data grid, and open source. Learn about the benefits of ModeShape 3, and how to deploy and use it to store your own data.

Michael Hausenblas – Apache Drill In-Depth Dissection

Slides

The Apache Drill project ostensibly has goals that make it look a lot like Dremel in that the mainline use case involves SQL or SQL-like queries applied to a large distributed data store, possibly organized in a columnar format.

In fact, however, Drill is a highly flexible architecture that allows it to serve many needs. Moreover, Drill has standardized internal API’s which allow easy extension for experimentation with parallel query evaluation. This is achieved by defining a standard logical query data flow language with a standardized and very flexible JSON syntax. Operators can be added to this framework very easily with the only assumption being that operators have inputs that are record sequences and a single output consisting of a record sequence. A SQL to logical query translator and the operators necessary to evaluate these queries are part of the standard Drill, but alternative syntax is easily added and alternative semantics are easily substituted.

This talk will describe the overall architecture of Drill, report on the progress in building an open source development community and show how Drill can be used to do machine learning, how Drill can be embedded in a language like Scala or Groovy, and how new syntax components can be added to support a language like Pig. This will be done by a description of how new parsers and operators are added. In addition, I will provide a description of how Drill uses Optiq to do cost-based query optimization.

Michael Hunger – Cypher Query Language and Neo4j

Slides

The Neo4j graph database is all about relationships. It allows to model domains of connected data easily. Querying using a imperative API is cumbersome. So we decided to develop a query language more suited to query graph data and focused on readability.

Taking inspiration from SQL, SparQL and others and using Scala to implement it turned out to be a good decision. Cypher has become one of the things people love about Neo4j. So in the talk we’ll introduce the language and its applicability for graph querying. We will focus on the expressive declaration of patterns, conditions and projections as well as the updating capabilities.

Michael Hunger – Intro to Neo4j or Domain Modeling with graphs

Slides No abstract)

Dr. Stefan Kaes & Sebastian Röbke – Triple R – Riak, Redis and RabbitMQ at XING

Slides

This talk will focus on how the team at XING, a popular social network for business professionals, rebuilt the activity stream for xing.com with Riak.

We will present interesting challenges we had to solve, like:

* Finding the proper data structures for storing very large activity streams

* Dealing with eventual consistency

* Migrating legacy data

* Setting up Riak in production

Piotr Kolaczkowski- Scalable Full-Text Search with DataStax Enterprise

Slides

Cassandra is the scalable NoSQL database powering modern applications at companies like Netflix, eBay, Disney, and hundreds of others. Solr is the popular enterprise search platform from the Apache Lucene project, offering powerful full-text search, hit highlighting, faceted search, near real-time indexing, dynamic clustering, and many more. DataStax Enterprise platform integrates them both in such a way that data stored in Cassandra can be accessed and searched using Solr, and data stored in Solr can be accessed through Cassandra. This talk will describe the high level architecture of the Datastax Enterprise Search as well as specific algorithms responsible for massive scalability and good load balancing of Solr-enabled clusters.

Steffen Krause – DynamoDB – on-demand NoSQL scaling as a service

Slides

Scaling a distributed NoSQL database and making it resilient to failure can be hard. With Amazon DynamoDB, you just specify the desired throughput, consistency level and upload your data. DynamoDB does all the heavy lifting for you. Come to this session to get an overview of an automated, self-managed key-value store that can seamlessly scale to hundreds of thousands of operations per second.

Hannu Krosing – PostSQL – using PostgreSQL as a better NoSQL

Slides

This talk will describe using PostgreSQL superior ACID data engine “in a NoSQL way”, that is using PostgreSQL’s support for JSON and other dynamic/freeform types and embedded languages (pl/python, pl/jsv8) for data processing near data.

Also, scaling the Skype way using pl/proxy sharding language, pgbouncer connection pooler and skytools data moving and transforming multitool is demonstrated. Performance comparisons to popular NoSQL databases are also shown.

Fabian Lange – There and Back Again: A Cloud’s Tale

Slides

Want to see how we built our cloud based document management solution CenterDevice? This talk will cover the joy and pain of working with MongoDB, Elastic Search, Gluster FS, Rabbit MQ Java and more. I will show how we build, deploy, run and monitor our very own cloud. You will learn what we learned, what worked and where hype met reality. Warning: no unicorns or pixie dust.

Dennis Meyer & Uwe Seiler – Map/Reduce in Action: Large Scale Reporting Based on Hadoop and Vertica

Slides

German based ADTECH GmbH, an AOL Inc. Company, is a leading international supplier of digital market-ing solutions delivering approx. 6 billion advertisements on a daily basis for customers in over 25 countries. Every ad delivery needs to be logged for reasons of billing but additionally ADTECH’s customers want to know as much detail as possible about those deliveries. Until recently the reporting part of ADTECH’s infrastructure was based on a custom C++ reporting solution used in combination with multiple databases. With ever increasing traffic the performance was reaching its limits, especially for the customer-critical end-of-month reporting period. Furthermore changes to custom reports were complex and time consuming due to the highly intermeshed architecture. The delays in producing these customizations was a source of customer dissatisfaction. To solve these issues ADTECH, in partnership with codecentric AG, made the move to a more flexible and performant architecture based on the Apache Hadoop ecosystem. With this new approach all details about the ad deliveries are logged using a combination of Avro and Flume, stored into HDFS and then aggregated using Map/Reduce with Pig before being stored in the NoSQL datastore Vertica. This talk aims to give an overview of the architecture and explain the architectural decisions made with a strong focus on the lessons learned.

Konstantin Osipov – Persistent message queues with Tarantool/Box

Slides

Tarantool/Box is an open-source, persistent, transactional in-memory database with rich support of Lua as a stored procedures and extensions language.

In the diverse world of NoSQL, Tarantool/Box owns a niche of a database efficiently running important parts of Web application logic, thus smartly enabling Web application to scale. In this talk I’ll present several new use cases in which Tarantool/Box plays a special role, and our new features implemented to support them. In particular, I’ll explore the problem of persistent transactional message queues and the role they play in highly available software. I will demonstrate how Tarantool/Box can be used as a reliable message queue server, but customized with parts of application-specific logic.

I’ll show-case Tarantool/Box features, designed to support message queues:

– inter- stored procedure communication channels, to effectively exchange messages between task producers and consumers

– triggers, fired upon system events, such as connect or disconnect, and their usage in an efficient queue implementation

– new database index types: bitmap, partial and functional indexes, necessary to implement very large queues with minimal memory footprint.

Panel discussion (No abstract)

Mahesh Paolini-Subramanya – NoSQL the Telco way

Slides

Being a decent-sized Telecommunications provider, we process a lot of calls (hundreds/second), and need to keep track of all the events on each call. Technically speaking, this is “A Lot” of data – data that our clients (and our own people!) want real-time access to in a myriad of ways. We’ve ended up going through quite a few NoSQL stores in our quest to satisfy everyone – and the way we do things now has very little to do with where we started out. Join me as I describe our experience and what we’ve learned, focusing on the Big 4, viz.

– The “solution-oriented” nature of NoSQL repeatedly changed our understanding of our problem-space – sometimes drastically.

– The system behavior , particularly the failure modes, were significantly different at scale

– The software model kept getting overhauled – regardless of how much we planned ahead

– We came to value agility – the ability to change direction – above all (yes, even at a Telco!)

Eric Redmond – Distributed Patterns You Should Know

Slides

Do you use Merkle trees? How about hash rings? Vector clocks? How many message patterns do you know? In this increasingly decentralized world, a firm grasp of the pantheon of data structures and patterns used to manage decentralized systems is no longer a luxury, it’s a necessity. You may never implement a pipeline, but chances are, you already use them.

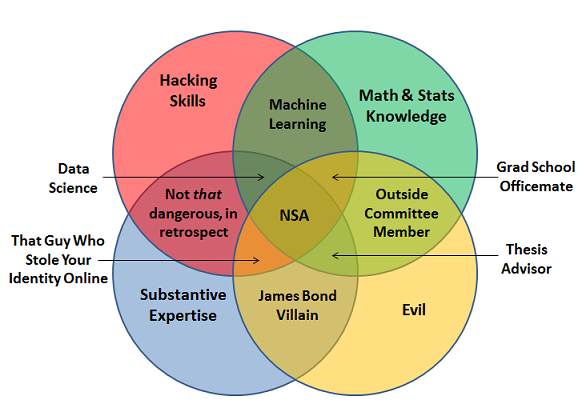

Larysa Visengeriyeva – Introduction to Predictive Analytics for NoSQL Nerds (Slides, no video)

Rolling out and running a NoSQL Database is only half the battle. It’s obvious to see that NoSQL Databases are used more and more in companies and start-ups where there is a huge need to dig the ‘big-data’ treasures. This requires a profound knowledge of mathematics, statistics, AI, data mining and machine-learning where experts are rare. This talk will give an overview of the most important concepts mentioned before. Furthermore tools, techniques and and experiences for a successful data analysis will be introduced. Finally this talk closes with a practical implementation for analyzing text – following the ‘IBM Watson’ idea.

Matthew Revell – Building scalability into your next project with Riak

Slides

Change is the one thing you can guarantee will happen in your next project. Fixing your schema before you’ve even launched means taking a massive gamble over what your users will want and how you’re going to deliver it.

The new generation of schema-free databases give you the flexibility to learn what your users need and what your application should do. As a bonus, databases such as Riak give you huge scalability meaning that you needn’t fear success.

Matthew introduces the new world of schema-free/NoSQL databases and focuses on how Riak can make your next project truly web-scale.

Martin Schoenert – CAP and Architectual Consequences

Slides

The blunt formulation of the CAP theorem states that any database system can achieve only 2 of the 3 properties: consistency, availability and partition-tolerance. We look at it more closely and see that this

formulation is misleading, because there is not a single big design decision but several smaller ones for the design of a database system. We then concentrate on the architectural consequences for massively

distributed database systems and argue that such systems must place restrictions on consistency and functionality.

Jan Steemann – Query Languages for Document Stores

Slides

SQL is the standard and established way to query relational databases.

As the name “NoSQL” suggests, NoSQL databases have gone some other way, coming up with several approaches of querying, e.g. access by key, map/reduce, and even own full-featured query languages.

We surely don’t want the super-fast key/value store require us to use a full-blown query language and slow us down – but for several other cases querying using a language can still be convenient. This is especially the case in document stores that have a wide range of use cases and allow us to look at different aspects of the same data.

As there isn’t yet an established standard for querying document databases, the talk will showcase some of the existing implementations such as UNQL, AQL, and jsoniq. Additionally, related topics such as graph query languages will be covered.

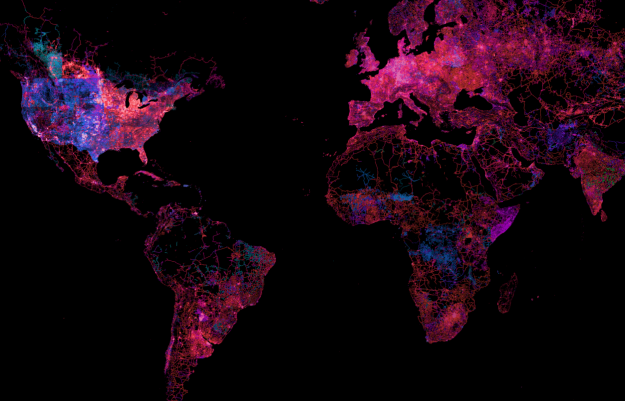

Kai Wähner – Big Data beyond Hadoop – How to integrate ALL your data

Slides

Big data represents a significant paradigm shift in enterprise technology. Big data radically changes the nature of the data management profession as it introduces new concerns about the volume, velocity and variety of corporate data.

Apache Hadoop is the open source defacto standard for implementing big data solutions on the Java platform. Hadoop consists of its kernel, MapReduce, and the Hadoop Distributed Filesystem (HDFS). A challenging task is to send all data to Hadoop for processing and storage (and then get it back to your application later), because in practice data comes from many different applications (SAP, Salesforce, Siebel, etc.) and databases (File, SQL, NoSQL), uses different technologies and concepts for communication (e.g. HTTP, FTP, RMI, JMS), and consists of different data formats using CSV, XML, binary data, or other alternatives.

This session shows the powerful combination of Apache Hadoop and Apache Camel to solve this challenging task. Learn how to use every thinkable data with Hadoop – without plenty of complex or redundant boilerplate code. Besides supporting the integration of all different technologies and data formats, Apache Camel also offers an easy, standardized DSL to transform, split or filter incoming data using the Enterprise Integration Patterns (EIP). Therefore, Apache Hadoop and Apache Camel are a perfect match for processing big data on the Java platform.

Simon Willnauer – With a hammer in your hand…

ElasticSearch combines the power of Apache Lucene (NoSQL since 2001) and the movement of distributed, scalable high-performance NoSQL solutions into easy to use schema free search engine that can serve full-text search request, key-value lookups, schema free analytics requests, facets or even suggestions in real-time. This talk will give an introduction to the key features of ElasticSearch with live examples.

The talk won’t be an exhaustive feature presentation but rather an overview of what and how ElasticSearch can do for you.

Randall Wilson – A Billion Person Family Tree with MongoDB

Slides

FamilySearch maintains a collaborative family tree with a billion individuals in it. The tree is edited in real time by thousands of concurrent users. Recent experiments to move the tree from a relational database to a MongoDB yielded a huge gain in performance. This presentation reviews the lessons learned throughout this experience, including how to deal with things such as operations that previously depended on transactional integrity. It also shares some insights into the experience gained by testing against Riak and other solutions.

I first saw this in a tweet by Eugene Dvorkin.