Cray Parlays Supercomputing Technology Into Big Data Appliance by Michael Feldman.

From the post:

For the first time in its history, Cray has built something other than a supercomputer. On Wednesday, the company’s newly hatched YarcData division launched “uRiKA,” a hardware-software solution aimed at real-time knowledge discovery with terascale-sized data sets. The system is designed to serve businesses and government agencies that need to do high-end analytics in areas as diverse as social networking, financial management, healthcare, supply chain management, and national security.

As befits Cray’s MO, their target market for uRiKA, (pronounced Eureka) is slanted toward the cutting edge. It uses a graph-based data approach to do interactive analytics with large, complex, and often dynamic data sets. “We are not trying to be everything for everybody,” says YarcData general manager Arvind Parthasarathi. (emphasis added) (YarcData is a new division at Cray. Just a little more name confusion for everyone.

Read the article for the hardware/performance stats but consider the following on graphs:

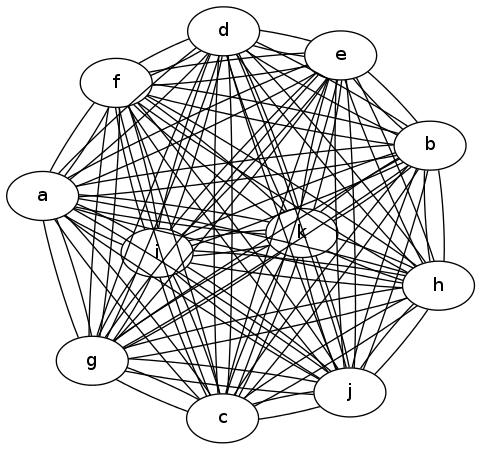

More to the point, uRiKA is designed to analyze graphs rather than simple tabular databases. A graph, one of the fundamental data abstractions in computer science, is basically a structure whose objects are linked together by some relationship. It is especially suited to structures like website links, social networks, and genetic maps — essentially any data set where the relationships between the objects are as important as the objects themselves.

This type of application exists further up the analytics food change than most business intelligence or data mining applications. In general, a lot of these more traditional applications involve searching for particular items or deriving simple relationships. The YarcData technology is focused on relationship discovery. And since it’s uses graph structures, the system can support graph-based reasoning and deductions to uncover new relationships.

A typical example is pattern-based queries — does x resemble y? This might not lead to a definitive answer, but will provide a range of possibilities, which can then be further refined. So, for example, one of the YarcData’s early customers is a government agency that is interested in finding “persons of interest.” They maintain profiles of terrorists, criminals or other ne’er-do-wells, and are using uRiKA to search for patterns of specific behaviors and activities. A credit card company could use the same basic algorithms to search for fraudulent transactions.

YarcData uses the term “relationship analytics” to describe this approach. While that might sound a bit Oprah-ish, it certainly emphasizes the importance of extracting knowledge from how the objects are connected rather than just their content. This is not to be confused with relational databases, which are organized in tabular form and use simpler forms of querying.

And:

After data is ingested, it needs to be converted to an internal format called RDF, or Resource Description Framework (in case you were wondering, uRiKA stands for Universal RDF Integration Knowledge Appliance), an industry standard graph format for representing information in the Web. According to Mufti, they are providing tools for RDF data conversion and are also laying the groundwork for a standards-based software that allows for third-party conversion tools.

Industry standard is a common theme here. uRiKA’s software internals include SUSE Linux, Java, Apache, WS02, Google Gadgets, and Relfinder. That stack of interfaces allows users to write or port analytics applications to the platform without having to come up with a uRiKA-specific implementation. So Java, J2EE, SPARQL, and Gadget apps are all fair game. YarcData thinks this will be key to encouraging third-party developers to build applications on top of the system, since it doesn’t require them to use a whole new programming language or API.

At least as of today, CrayDoc has no documentation on conversion to the “…industry standard graph format…” RDF or the details of its graph operations.

Parthasarathi talks about shoehorning data into relational databases. I wonder why uRiKA shoehorns data into RDF?

Perhaps the documentation, when it appears, will explain the choice of RDF as a “data format.” (I know RDF isn’t a format, I am just repeating what the article says.)

I am curious because efficient graph structures are going to be necessary for a variety of problems. Has Cray/YarcData compared graph structures, RDF and others for performance on particular problems? If so, are the tests and test data available?

Before laying out sums in the “low hundreds of thousands of dollars,” I would want to know I wasn’t brute forcing solutions, when less costly and elegant solutions existed.