From the description:

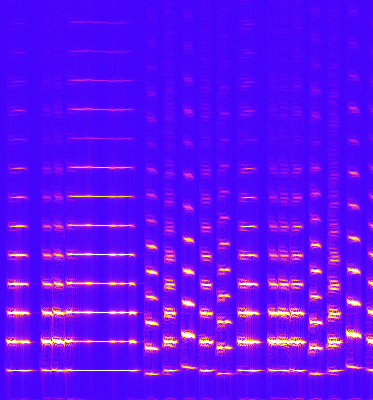

From OSCON 2014: Is it possible to imagine a future where “concert programmers” are as common a fixture in the worlds auditoriums as concert pianists? In this presentation Andrew will be live-coding the generative algorithms that will be producing the music that the audience will be listening too. As Andrew is typing he will also attempt to narrate the journey, discussing the various computational and musical choices made along the way. A must see for anyone interested in creative computing.

This impressive demonstration is performed using Extempore.

From the GitHub page:

Extempore is a systems programming language designed to support the programming of real-time systems in real-time. Extempore promotes human orchestration as a meta model of real-time man-machine interaction in an increasingly distributed and environmentally aware computing context.

Extempore is designed to support a style of programming dubbed ‘cyberphysical’ programming. Cyberphysical programming supports the notion of a human programmer operating as an active agent in a real-time distributed network of environmentally aware systems. The programmer interacts with the distributed real-time system procedurally by modifying code on-the-fly. In order to achieve this level of on-the-fly interaction Extempore is designed from the ground up to support code hot-swapping across a distributed heterogeneous network, compiler as service, real-time task scheduling and a first class semantics for time.

Extempore is designed to mix the high-level expressiveness of Lisp with the low-level expressiveness of C. Extempore is a statically typed, type-inferencing language with strong temporal semantics and a flexible concurrency architecture in a completely hot-swappable runtime environment. Extempore makes extensive use of the LLVM project to provide back-end code generation across a variety of architectures.

For more detail on what the Extempore project is all about, see the Extempore philosophy.

For programmers only at this stage but can you imagine the impact of “live searching?” Where data structures and indexes arise from interaction with searchers? Definitely worth a long look!

I first saw this in a tweet by Alan Zucconi.