Accelerate Your Newsgathering and Verification reported a post that had 3 out of 5 newsgathering tools for images. But as I mention, there are important but non-visual stories that need improved tools for newsgathering and verification.

The copyright struggle between the Blue People and Carl Malamud is an important, but thus far, non-visual story.

Here’s the story in a nutshell:

Laws, court decisions, agency rulings, etc., that govern our daily lives, are found in complex document stores. They have complex citation systems to enable anyone to find a particular law, decision, or rule.

Those systems are the Dewey Decimal system or the Library of Congress classification, except several orders of magnitude more complex. And the systems vary from state to state, etc.

It’s important to get citations right, well, let’s let the BlueBook speak for itself:

The primary purpose of a citation is to facilitate finding and identifying the authority cited…. (A Uniform System of Citation, Tenth Edition, page iv.)

If you are going to quote a law or have access to it, you must have the correct citation.

In order to compel people to obey the law, they must have fair notice of it. And it stands to reason if you can’t find the law, no access to a citation guide, you are SOL as far as access to the law.

The courts come into the picture, being as lazy if not lazier than programmers, by referring to the “BlueBook” as the standard for citations. Courts could have written out their citation practices but as I said, courts are lazy.

Over time, the court’s enshrined their references to the “BlueBook” in court rules, which grants the “BlueBook” an informal monopoly on legal citations and access to the law.

As you have guessed by now, the Blue People, with their government created, unregulated monopoly, charge for the privilege of knowing how to find the law.

The Blue People are quite fond of their monopoly and are loathe to relinquish it. Even though a compilation of how statutes, regulations and courts decisions are cited in fact, is “sweat of the brow” work and not eligible for copyright protection.

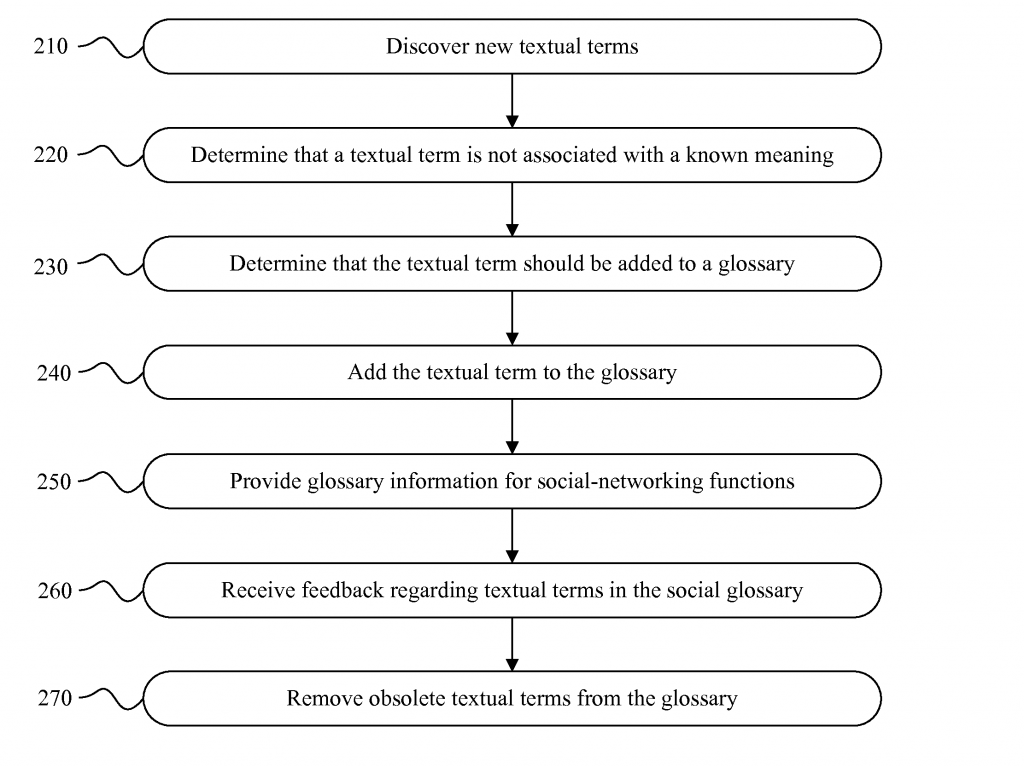

A Possible Solution, Based on Capturing Public Facts

The answer to claims of copyright by the Blue People is to collect evidence of the citation practices in all fifty states and federal practice and publish such evidence along with advisory comments on usage.

Fifty law student/librarians could accomplish the task in parallel using modern search technologies and legal databases. Their findings would need to be collated but once done, every state plus federal practice, including nuances, would be easily accessible to anyone.

The courts, as practitioners of precedent,* will continue to support their self-created BlueBook monopoly.

But most judges will have difficulty distinguishing Holder, Attorney General, et al. v. Humanitarian Law Project et al. 561 U. S. 1 (2010) (following the BlueBook) and Holder, Attorney General, et al. v. Humanitarian Law Project et al. 561 U. S. 1 (2010) (following the U.S. Supreme Court and/or some recording of how cases are cited by the US Supreme Court).

If you are in the legal profession or aspire to be, don’t forget Jonathan Swift’s observation in Gulliver’s Travels:

It is a maxim among these lawyers that whatever has been done before, may legally be done again: and therefore they take special care to record all the decisions formerly made against common justice, and the general reason of mankind. These, under the name of precedents, they produce as authorities to justify the most iniquitous opinions; and the judges never fail of directing accordingly.

The inability of courts to distinguish between “BlueBook” and “non-BlueBook” citations will over time render their observance of precedent a nullity.

Not as satisfying as riding them and the Blue People down with war horns blowing but just as effective.

The Need For Visuals

If you have read this far, you obviously don’t need visuals to keep your interest in a story. Particularly a story about access to law and similarly exciting topics. It is an important topic, just not one that really gets your blood pumping.

How would you create visuals to promote public access the laws that govern our day-to-day lives?

I’m no artist but one thought would be to show people trying to consult law books that are chained shut by their citations. Or perhaps one or two of the identifiable Blue People as Jacob Marley type figures with bound law books and heavy chains about them?

The “…could have shared…might have shared…” lines would work well with access to legal materials.

Ping me with suggested images. Thanks!