A new window into our world with real-time trends

From the post:

Every journey we take on the web is unique. Yet looked at together, the questions and topics we search for can tell us a great deal about who we are and what we care about. That’s why today we’re announcing the biggest expansion of Google Trends since 2012. You can now find real-time data on everything from the FIFA scandal to Donald Trump’s presidential campaign kick-off, and get a sense of what stories people are searching for. Many of these changes are based on feedback we’ve collected through conversations with hundreds of journalists and others around the world—so whether you’re a reporter, a researcher, or an armchair trend-tracker, the new site gives you a faster, deeper and more comprehensive view of our world through the lens of Google Search.

Real-time data

You can now explore minute-by-minute, real-time data behind the more than 100 billion searches that take place on Google every month, getting deeper into the topics you care about. During major events like the Oscars or the NBA Finals, you’ll be able to track the stories most people are searching for and where in the world interest is peaking. Explore this data by selecting any time range in the last week from the date picker.

…

Follow @GoogleTrends for tweets about new data sets and trends.

See GoogleTrends at: https://www.google.com/trends/

This has been in browser tab for several days. I could not decide if it was eye candy or something more serious.

After all, we are talking about searches ranging from experts to the vulgar.

I went an visited today’s results at Google Trends, and found:

- 5 Crater of Diamonds State Park, Arkansas

- 17 Ted 2, Jurassic World

- 22 World’s Ugliest Dog Contest [It doesn’t say if Trump entered or not.]

- 35 Episcopal Church

- 48 Grace Lee Boggs

- 59 Raquel Welch

- 68 Dodge, Mopar, Dodge Challenger

- 79 Xbox One, Xbox, Television

- 86 Escobar: Paradise Lost, Pablo Escobar, Benicio del Toro

- 98 Islamic State of Iraq and the Levant

I was glad to see Raquel Welch was in the top 100 but saddened that she was out scored by the Episcopal Church. That has to sting.

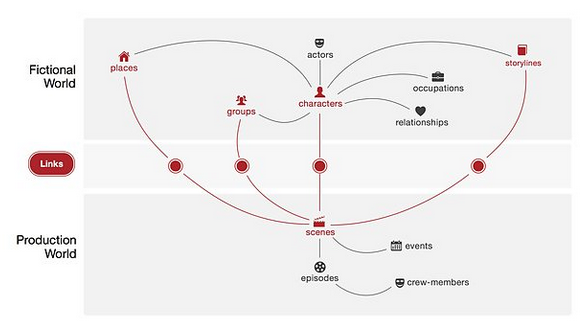

When I think of topic maps that I can give you as examples, they involve taxes, Castrati, and other obscure topics. My favorite use case is an ancient text annotated with commentaries and comparative linguistics based on languages no longer spoken.

I know what interests me but not what interests other people.

Thoughts on using Google Trends to pick “hot” topics for topic mapping?