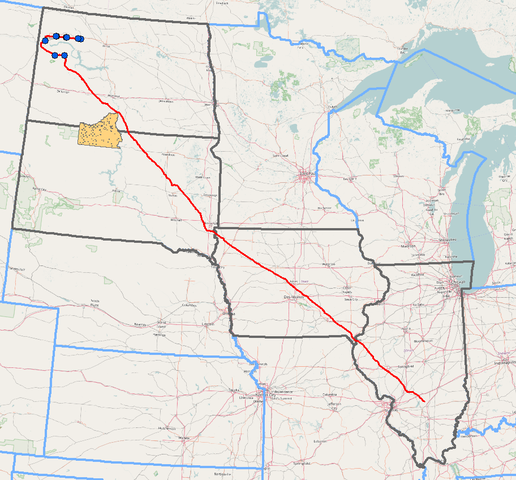

If you use public feeds from traffic cams to guide or monitor disruptions, Public Spy (Traffic) Cams, or “leak” that you are using public feeds in that manner, government authorities are likely to interrupt public access to those feeds.

The presence of numerous wi-fi hotspots and inexpensive wi-fi video cameras suggests the most natural counter to such interruptions.

Unlike government actors, you know which locations are important, which disruptions are false flags (including random events that attract attention), and you benefit from public uncertainly caused by any interruption of public services, such as traffic cams.

As an illustration and not a suggestion, if cars caught in gridlock come under attack, say a pattern of attacks over several days, motorists caught in ordinary gridlock become more nervous and authorities view accidents or other causes with hightened suspicion. Whether you are the cause of the gridlock or not.

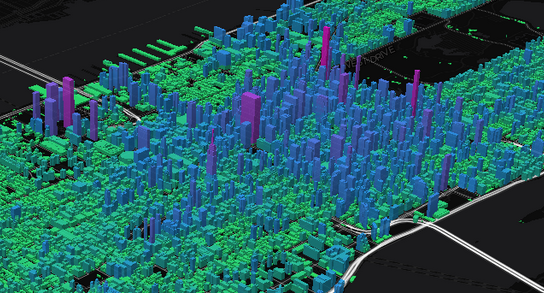

Authorities suffer from apophenia, that is “seeing apparently meaningful connections between unrelated patterns, data or phenomena.” What is pareidolia? (a sub-class of apophenia) Perhaps more than apophenia, because actively searching for patterns, makes them more likely to discover false ones. With an eye for patterns, you can foster their recognition of false ones. [FYI, false patterns are “subjects” in the topic maps. May include data on their creation.]