Drawing Causal Inference from Big Data.

Overview:

This colloquium was motivated by the exponentially growing amount of information collected about complex systems, colloquially referred to as “Big Data”. It was aimed at methods to draw causal inference from these large data sets, most of which are not derived from carefully controlled experiments. Although correlations among observations are vast in number and often easy to obtain, causality is much harder to assess and establish, partly because causality is a vague and poorly specified construct for complex systems. Speakers discussed both the conceptual framework required to establish causal inference and designs and computational methods that can allow causality to be inferred. The program illustrates state-of-the-art methods with approaches derived from such fields as statistics, graph theory, machine learning, philosophy, and computer science, and the talks will cover such domains as social networks, medicine, health, economics, business, internet data and usage, search engines, and genetics. The presentations also addressed the possibility of testing causality in large data settings, and will raise certain basic questions: Will access to massive data be a key to understanding the fundamental questions of basic and applied science? Or does the vast increase in data confound analysis, produce computational bottlenecks, and decrease the ability to draw valid causal inferences?

Videos of the talks are available on the Sackler YouTube Channel. More videos will be added as they are approved by the speakers.

Great material but I’m in the David Hume camp when it comes to causality. Or more properly the sceptical realist interpretation of David Hume. The contemporary claims that ISIS is a social media Svengali is a good case in point. The only two “facts” that not in dispute is that ISIS has used social media and some Westerners have in fact joined up with ISIS.

Both of those facts are true, but to assert a causal link between them borders on the bizarre. Joshua Berlinger reports in The names: Who has been recruited to ISIS from the West that some twenty thousand (20,000) foreign fighters have joined ISIS. That group of foreign fighters hails from ninety (90) countries and thirty-four hundred are from Western states.

Even without Hume’s skepticism on causation, there is no evidence for the proposition that current foreign fighters read about ISIS on social media and therefore decided to join up. None, nada, the empty set. The causal link between social media and ISIS is wholly fictional and made to further other policy goals, like censoring ISIS content.

Be careful how you throw “causality” about when talking about big data or data in general.

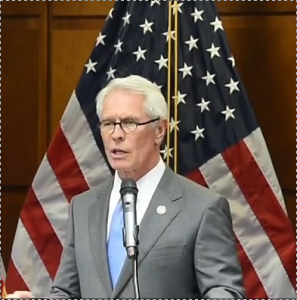

The listing of the current videos at YouTube has the author names only, does not include the titles or abstracts. To make these slightly more accessible, I have created the following listing with the author, title (link to YouTube if available), and Abstract/Slides as appropriate. In alphabetical order by last name. Author names are hyperlinks to identify the authors.

Edo Airoldi, Harvard University, Optimal Design of Causal Experiments in the Presence of Social Interference. Abstract

Susan Athey, Stanford University, Estimating Heterogeneous Treatment Effects Using Machine Learning in Observational Studies. Slides.

Leon Bottou, Facebook AI Research, Causal Reasoning and Learning Systems Abstract

Peter Buhlmann, ETH Zurich, Causal Inference Based on Invariance: Exploiting the Power of Heterogeneous Data Slides

Dean Eckles, Facebook, Identifying Peer Effects in Social Networks Abstract

James Fowler, University of California, San Diego, An 85 Million Person Follow-up to a 61 Million Person Experiment in Social Influence and Political Mobilization. Abstract

Michael Hawrylycz, Allen Institute, Project MindScope: From Big Data to Behavior in the Functioning Cortex Abstract

David Heckerman, Microsoft Corporation, Causal Inference in the Presence of Hidden Confounders in Genomics Slides.

Michael Jordan, University of California, Berkeley, On Computational Thinking, Inferential Thinking and Big Data . Abstract.

Steven Levitt, The University of Chicago, Thinking Differently About Big Data Abstract

David Madigan, Columbia University, Honest Inference From Observational Database Studies Abstract

Judea Pearl, University of California, Los Angeles, Taming the Challenge of Extrapolation: From Multiple Experiments and Observations to Valid Causal Conclusions Slides

Thomas Richardson, University of Washington, Non-parametric Causal Inference Abstract

James Robins, Harvard University, Personalized Medicine, Optimal Treatment Strategies, and First Do No Harm: Time Varying Treatments and Big Data Abstract

Bernhard Schölkopf, Max Planck Institute, Toward Causal Machine Learning Abstract.

Jasjeet Sekhon, University of California, Berkeley, Combining Experiments with Big Data to Estimate Treatment Effects Abstract.

Richard Shiffrin, Indiana University, The Big Data Sea Change Abstract.

I call your attention to this part of Shiffrin’s abstract:

Second, having found a pattern, how can we explain its causes?

This is the focus of the present Sackler Colloquium. If in a terabyte data base we notice factor A is correlated with factor B, there might be a direct causal connection between the two, but there might be something like 2**300 other potential causal loops to be considered. Things could be even more daunting: To infer probabilities of causes could require consideration all distributions of probabilities assigned to the 2**300 possibilities. Such numbers are both fanciful and absurd, but are sufficient to show that inferring causality in Big Data requires new techniques. These are under development, and we will hear some of the promising approaches in the next two days.

John Stamatoyannopoulos, University of Washington, Decoding the Human Genome: From Sequence to Knowledge.

Hal Varian, Google, Inc., Causal Inference, Econometrics, and Big Data Abstract.

Bin Yu, University of California, Berkeley, Lasso Adjustments of Treatment Effect Estimates in Randomized Experiments Abstract.

If you are interested in starting an argument, watch the Steven Levitt video starting at timemark 46:20.

Enjoy!