Linked Data Integration with Conflicts by Jan Michelfeit, Tomáš Knap, Martin Nečaský.

�

Abstract:

Linked Data have emerged as a successful publication format and one of its main strengths is its fitness for integration of data from multiple sources. This gives them a great potential both for semantic applications and the enterprise environment where data integration is crucial. Linked Data integration poses new challenges, however, and new algorithms and tools covering all steps of the integration process need to be developed. This paper explores Linked Data integration and its specifics. We focus on data fusion and conflict resolution: two novel algorithms for Linked Data fusion with provenance tracking and quality assessment of fused data are proposed. The algorithms are implemented as part of the ODCleanStore framework and evaluated on real Linked Open Data.

Conflicts in Linked Data? The authors explain:

From the paper:

The contribution of this paper covers the data fusion phase with conflict resolution and a conflict-aware quality assessment of fused data. We present new algorithms that are implemented in ODCleanStore and are also available as a standalone tool ODCS-FusionTool.2

Data fusion is the step where actual data merging happens – multiple records representing the same real-world object are combined into a single, consistent, and clean representation [3]. In order to fulfill this definition, we need to establish a representation of a record, purge uncertain or low-quality values, and resolve identity and other conflicts. Therefore we regard conflict resolution as a subtask of data fusion.

Conflicts in data emerge during the data fusion phase and can be classified as schema, identity, and data conflicts. Schema conflicts are caused by di�fferent source data schemata – di�fferent attribute names, data representations (e.g., one or two attributes for name and surname), or semantics (e.g., units). Identity conflicts are a result of di�fferent identifiers used for the same real-world objects. Finally, data conflicts occur when di�fferent conflicting values exist for an attribute of one object.

Conflict can be resolved on entity or attribute level by a resolution function. Resolution functions can be classified as deciding functions, which can only choose values from the input such as the maximum value, or mediating functions, which may produce new values such as average or sum [3].

…

Oh, so the semantic diversity of data simply flowed into Linked Data representation.

Hmmm, watch for a basis for in the data for resolving schema, identity and data conflicts.

The related work section is particularly rich with references to non-Linked Data conflict resolution projects. Definitely worth a close read and chasing the references.

To examine the data fusion and conflict resolution algorithm the authors start by restating the problem:

- Diff�erent identifying URIs are used to represent the same real-world entities.

- Diff�erent schemata are used to describe data.

- Data conflicts emerge when RDF triples sharing the same subject and predicate have inconsistent values in place of the object.

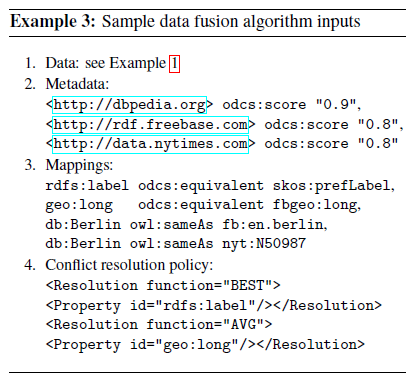

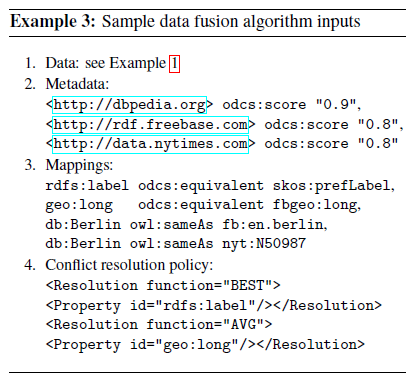

I am skipping all the notation manipulation for the quads, etc., mostly because of the inputs into the algorithm:

As a result of human intervention, the different identifying URIs have been mapped together. Not to mention the weighting of the metadata and the desired resolution for data conflicts (location data).

With that intervention, the complex RDF notation and manipulation becomes irrelevant.

Moreover, as I am sure you are aware, there is more than one “Berlin” listed in DBpedia. Several dozen as I recall.

I mention that because the process as described does not say where the authors of the rules/mappings obtained the information necessary to distinguish one Berlin from another?

That is critical for another author to evaluate the correctness of their mappings.

At the end of the day, after the “resolution” proposed by the authors, we are in no better position to map their result to another than we were at the outset. We have bald statements with no additional data on which to evaluate those statements.

Give Appendix A. List of Conflict Resolution Functions, a close read. The authors have extracted conflict resolution functions from the literature. Should be a time saver as well as suggestive of other needed resolution functions.

PS: If you look for ODCS-FusionTool you will find LD-Fusion Tool (GitHub), which was renamed to ODCS-FusionTool a year ago. See also the official LD-FusionTool webpage.