Why Most Published Research Findings Are False by John Baez.

John’s post is based on John P. A. Ioannidis, Why most published research findings are false, PLoS Medicine 2 (2005), e124, and is very much worth your time to read carefully.

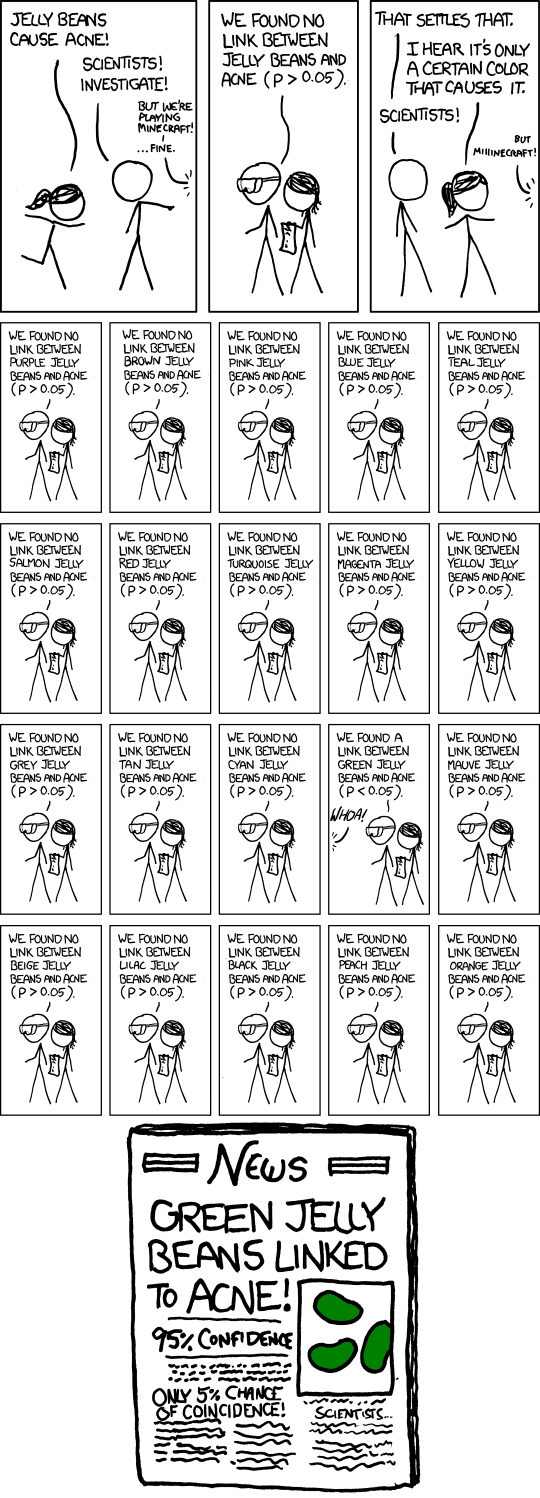

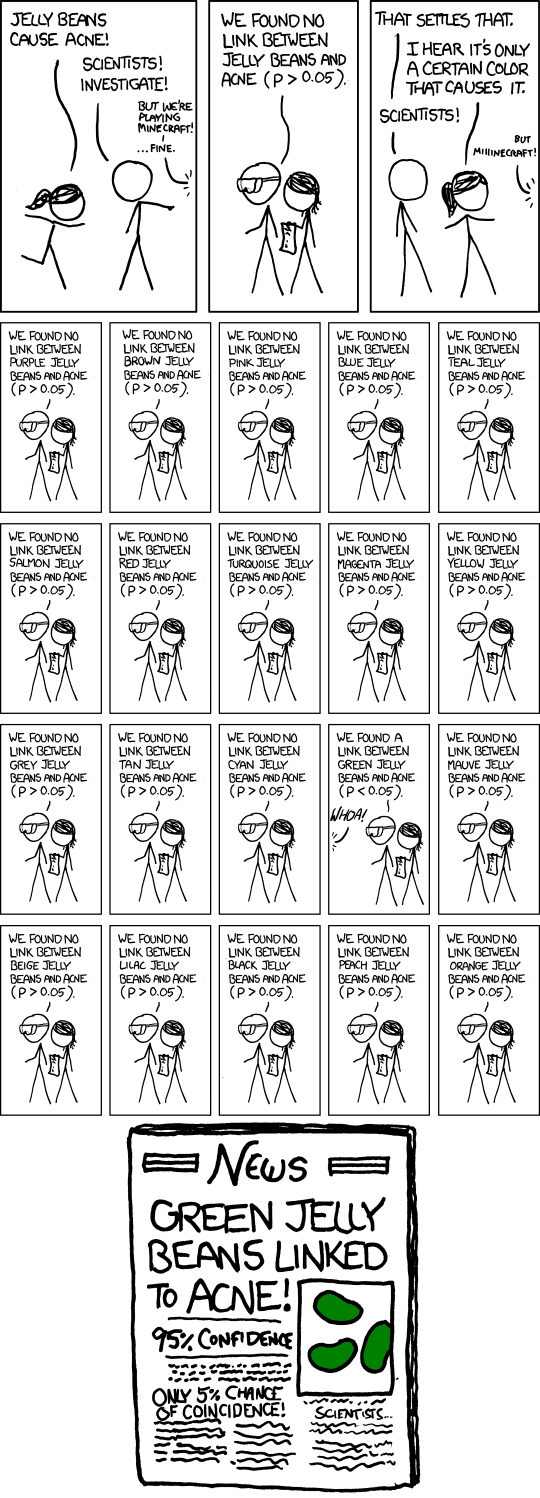

Here is a cartoon that illustrates one problem with research findings (John uses it and it appears in the original paper):

The danger of attributing false significance isn’t limited to statistical data.

Consider Vinson Cerf’s Freedom and the Social Contract in the most recent issue of CACM.

Vinson writes in discussing privacy versus the need for security:

In today’s world, threats to our safety and threats to national security come from many directions and not all or even many of them originate from state actors. If I can use the term “cyber-safety” to suggest safety while making use of the content and tools of the Internet, World Wide Web, and computing devices in general, it seems fair to say the expansion of these services and systems has been accompanied by a growth in their abuse. Moreover, it has been frequently observed that there is an asymmetry in the degree of abuse and harm that individuals can perpetrate on citizens, and on the varied infrastructure of our society. Vast harm and damage may be inflicted with only modest investment in resources. Whether we speak of damage and harm using computer-based tools or damage from lethal, homemade explosives, the asymmetry is apparent. While there remain serious potential threats to the well-being of citizens from entities we call nation- states, there are similarly serious potential threats originating with individuals and small groups.

None of which is false and the reader with a vague sense that some “we” is in danger from known and unknown actors.

To what degree? Unknown. Of what harm? Unknown. Chances of success? Unknown. Personal level of danger? Unknown.

What we do know is that on September 11, 2001, approximately 3,000 people died. Twelve years ago.

Deaths from medical misadventure are estimated to be 98,000 per year.

12 X 98,000 = 1,176,000 or 392 9/11 attack death totals.

Deaths due to medical misadventure are not known accurately but the overall comparison is a valid one.

Your odds of dying from medical misadventure are far higher than dying from a terrorist attack.

But, Vinson doesn’t warn you against death by medical misadventure. Instead you are warned there is some vague, even nebulous individuals or groups that seek to do you harm.

An unknown degree of harm. With some unknown rate of incidence.

And that position is to be taken seriously in a debate over privacy?

Most terrorism warnings are too vague for meaningful policy debate.