Simple Ain’t Easy: Real-World Problems with Basic Summary Statistics by John Myles White.

From the webpage:

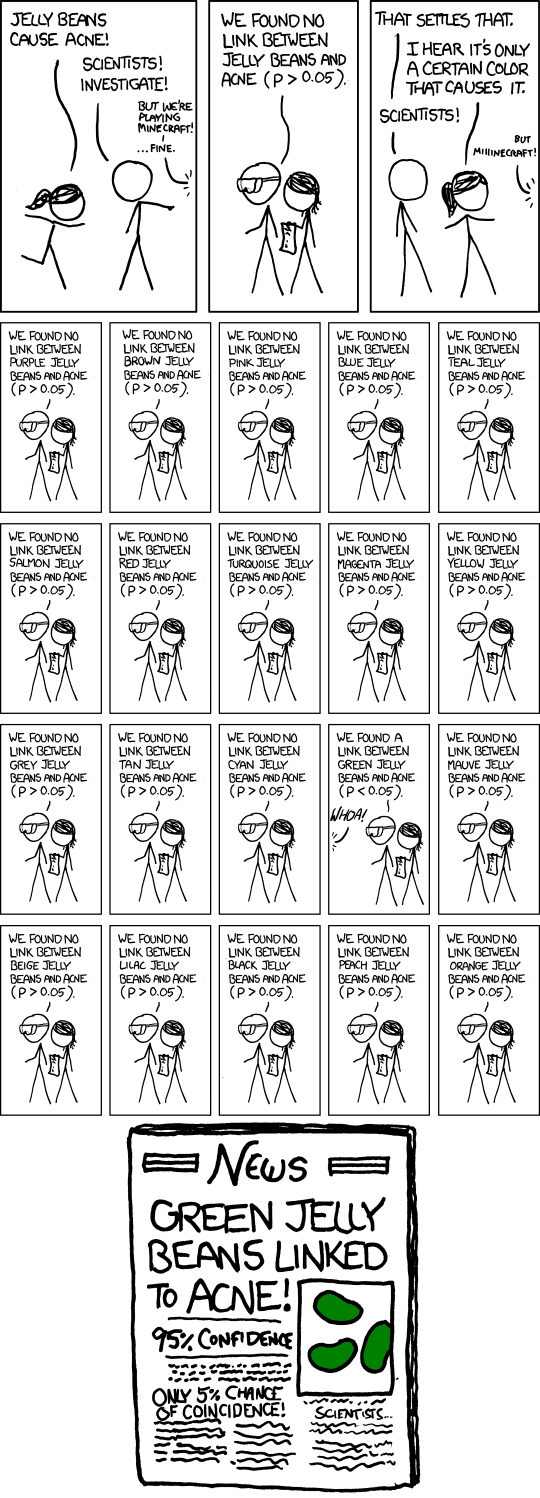

In applied statistical work, the use of even the most basic summary statistics, like means, medians and modes, can be seriously problematic. When forced to choose a single summary statistic, many considerations come into practice.

This repo attempts to describe some of the non-obvious properties possessed by standard statistical methods so that users can make informed choices about methods.

Contributing

The reason I chose to announce a book of examples isn’t just pedagogical: by writing fully independent examples, it’s possible to write a book as a community working in parallel. If 30 people each contributed 10 examples over the next month, we’d have a full-length book containing 300 examples in our hands. In practice, things are complicated by the need to make sure that examples aren’t redundant or low quality, but it’s still possible to make this book a large-scale community project.

As such, I hope you’ll consider contributing. To contribute, just submit a new example. If your example only requires text, you only need to write a short LaTeX-flavored Markdown document. If you need images, please include R code that generates your images.

A great project for several reasons.

First, you can contribute to a public resource that may improve the use of summary statistics.

Second, you have the opportunity to search the literature for examples you want to use on summary statistics. That will improve your searching skills and data skepticism. The first from finding the examples and the second from seeing how statistics are used in the “wild.”

Not to bang on statistics too harshly, I review standards where authors have forgotten how to use quotes and footnotes. Sixth grade type stuff.

Third, and to me the most important reason, as you review the work of others, you will become more conscious of similar mistakes in your own writing.

Think of contributions to Simple Ain’t Easy as exercises in self-improvement that benefit others.