Show and Tell: A Neural Image Caption Generator by Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan.

Abstract:

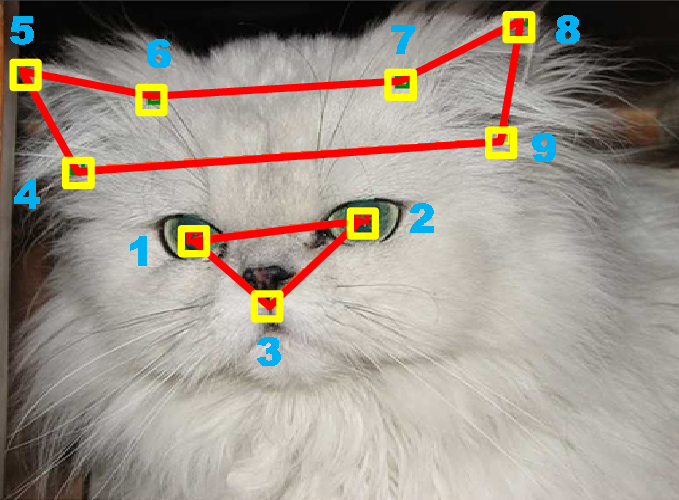

Automatically describing the content of an image is a fundamental problem in artificial intelligence that connects computer vision and natural language processing. In this paper, we present a generative model based on a deep recurrent architecture that combines recent advances in computer vision and machine translation and that can be used to generate natural sentences describing an image. The model is trained to maximize the likelihood of the target description sentence given the training image. Experiments on several datasets show the accuracy of the model and the fluency of the language it learns solely from image descriptions. Our model is often quite accurate, which we verify both qualitatively and quantitatively. For instance, while the current state-of-the-art BLEU score (the higher the better) on the Pascal dataset is 25, our approach yields 59, to be compared to human performance around 69. We also show BLEU score improvements on Flickr30k, from 55 to 66, and on SBU, from 19 to 27.

Another caption generating program for images. (see also, Deep Visual-Semantic Alignments for Generating Image Descriptions) Not quite to the performance of a human observer but quite respectable. The near misses are amusing enough for crowd correction to be an element in a full blown system.

Perhaps “rough recognition” is close enough for some purposes. Searching images for people who match a partial description and producing a much smaller set for additional processing.

I first saw this in Nat Torkington’s Four short links: 18 November 2014.