Open Educational Resources for Biomedical Big Data (R25)

Deadline for submission: April 1, 2014

Additional information: bd2k_training@mail.nih.gov

As part of the NIH Big Data to Knowledge (BD2K) project, BD2K R25 FOA will support:

Curriculum or Methods Development of innovative open educational resources that enhance the ability of the workforce to use and analyze biomedical Big Data.

The challenges:

The major challenges to using biomedical Big Data include the following:

Locating data and software tools: Investigators need straightforward means of knowing what datasets and software tools are available and where to obtain them, along with descriptions of each dataset or tool. Ideally, investigators should be able to easily locate all published and resource datasets and software tools, both basic and clinical, and, to the extent possible, unpublished or proprietary data and software.

Gaining access to data and software tools: Investigators need straightforward means of 1) releasing datasets and metadata in standard formats; 2) obtaining access to specific datasets or portions of datasets; 3) studying datasets with the appropriate software tools in suitable environments; and 4) obtaining analyzed datasets.

Standardizing data and metadata: Investigators need data to be in standard formats to facilitate interoperability, data sharing, and the use of tools to manage and analyze the data. The datasets need to be described by standard metadata to allow novel uses as well as reuse and integration.

Sharing data and software: While significant progress has been made in broad and rapid sharing of data and software, it is not yet the norm in all areas of biomedical research. More effective data- and software-sharing would be facilitated by changes in the research culture, recognition of the contributions made by data and software generators, and technical innovations. Validation of software to ensure quality, reproducibility, provenance, and interoperability is a notable goal.

Organizing, managing, and processing biomedical Big Data: Investigators need biomedical data to be organized and managed in a robust way that allows them to be fully used; currently, most data are not sufficiently well organized. Barriers exist to releasing, transferring, storing, and retrieving large amounts of data. Research is needed to design innovative approaches and effective software tools for organizing biomedical Big Data for data integration and sharing while protecting human subject privacy.

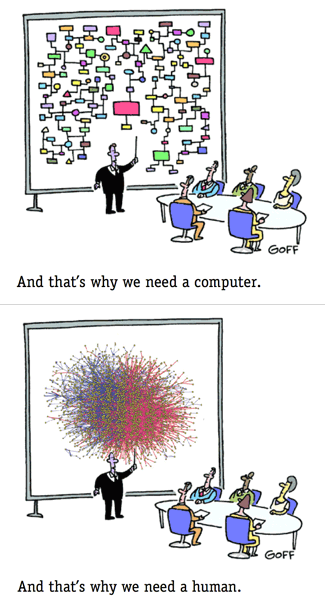

Developing new methods for analyzing biomedical Big Data: The size, complexity, and multidimensional nature of many datasets make data analysis extremely challenging. Substantial research is needed to develop new methods and software tools for analyzing such large, complex, and multidimensional datasets. User-friendly data workflow platforms and visualization tools are also needed to facilitate the analysis of Big Data.

Training researchers for analyzing biomedical Big Data: Advances in biomedical sciences using Big Data will require more scientists with the appropriate data science expertise and skills to develop methods and design tools, including those in many quantitative science areas such as computational biology, biomedical informatics, biostatistics, and related areas. In addition, users of Big Data software tools and resources must be trained to utilize them well.

Another big data biomedical data integration funding opportunity!

I do wonder about the suggestion:

The datasets need to be described by standard metadata to allow novel uses as well as reuse and integration.

Do they mean:

“Standard” metadata for a particular academic lab?

“Standard” metadata for a particular industry lab?

“Standard” metadata for either one five (5) years ago?

“Standard” metadata for either one (5) years from now?

The problem being the familiar one that knowledge that isn’t moving forward is outdated.

It’s hard to do good research with outdated information.

Making metadata dynamic, so that it reflects yesterday’s terminology, today’s and someday tomorrow’s, would be far more useful.

The metadata displayed to any user would be their choice of metadata and not the complexities that make the metadata dynamic.

Interested?