Preface: I don’t think the results reported by the authors will surprise anyone. Heretofore the evidence has been whispered at conferences, ancedotal, and piecemeal. All of which made it easier to sustain the myth of an academic meritocracy. In the face of over 19,000 faculty positions over three distinct disciplines and careful analysis, sustaining the meritocracy myth will be much harder. It has been my honor to know truly meritorious scholars but I have also know the socialite type as well.

Systematic inequality and hierarchy in faculty hiring networks by Aaron Clauset, Samuel Arbesman, Daniel B. Larremore. (Science Advances 01 Feb 2015: Vol. 1 no. 1 e1400005 DOI: 10.1126/sciadv.1400005)

Abstract:

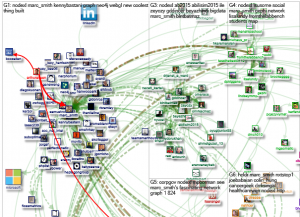

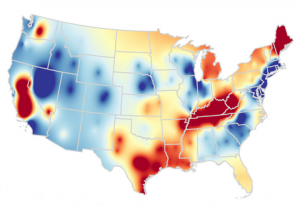

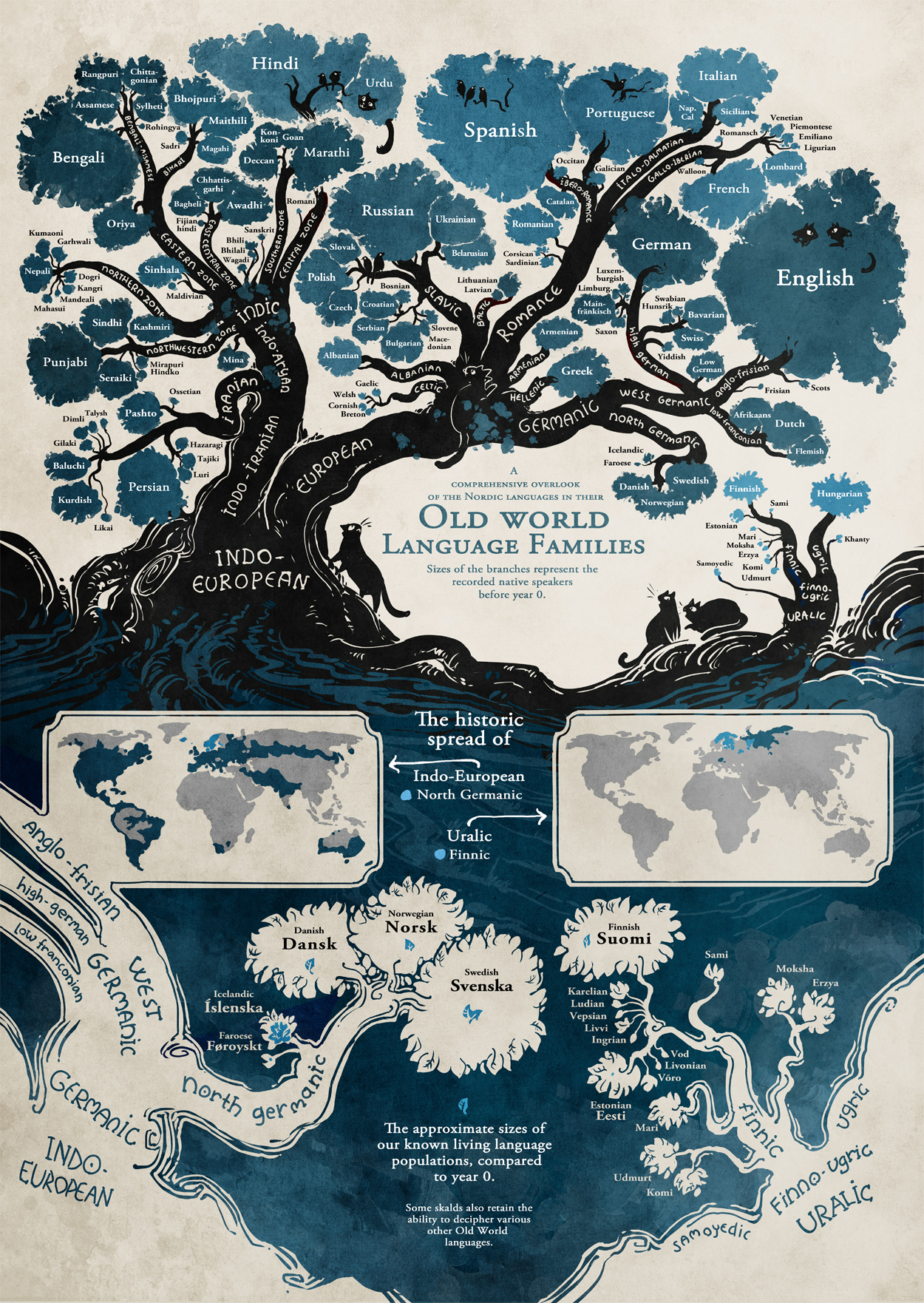

The faculty job market plays a fundamental role in shaping research priorities, educational outcomes, and career trajectories among scientists and institutions. However, a quantitative understanding of faculty hiring as a system is lacking. Using a simple technique to extract the institutional prestige ranking that best explains an observed faculty hiring network—who hires whose graduates as faculty—we present and analyze comprehensive placement data on nearly 19,000 regular faculty in three disparate disciplines. Across disciplines, we find that faculty hiring follows a common and steeply hierarchical structure that reflects profound social inequality. Furthermore, doctoral prestige alone better predicts ultimate placement than a U.S. News & World Report rank, women generally place worse than men, and increased institutional prestige leads to increased faculty production, better faculty placement, and a more influential position within the discipline. These results advance our ability to quantify the influence of prestige in academia and shed new light on the academic system.

A must read from the standpoint of techniques, methodology and the broader implications for our research/educational facilities and society at large.

The authors conclusion is quite chilling:

More broadly, the strong social inequality found in faculty placement across disciplines raises several questions. How many meritorious research careers are derailed by the faculty job market’s preference for prestigious doctorates? Would academia be better off, in terms of collective scholarship, with a narrower gap in placement rates? In addition, if collective scholarship would improve with less inequality, what changes would do more good than harm in practice? These are complicated questions about the structure and efficacy of the academic system, and further study is required to answer them. We note, however, that economics and the study of income and wealth inequality may offer some insights about the practical consequences of strong inequality (13).

In closing, there is nothing specific to faculty hiring in our network analysis, and the same methods for extracting prestige hierarchies from interaction data could be applied to study other forms of academic activities, for example, scientific citation patterns among institutions (32). These methods could also be used to characterize the movements of employees among firms within or across commercial sectors, which may shed light on mechanisms for economic and social mobility (33). Finally, because graduate programs admit as students the graduates of other institutions, a similar approach could be used to assess the educational outcomes of undergraduate programs.

I think there are three options at this point:

- Punish the data

- Ignore the data

- See where the data takes us

Which one are you and your academic institution (if any), going to choose?

If you are outside academia, you might want to make a similar study of your organization or industry to help plot your career.

If you are outside academia and the private sector, consider a similar study of government.

I discovered this paper by seeing the Faculty Hiring Networks data page in a tweet by Aaron Clauset.