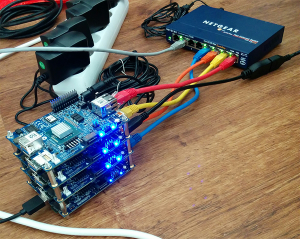

Meet Fenton (my data crunching machine) by Alex Staravoitau.

From the post:

As you might be aware, I have been experimenting with AWS as a remote GPU-enabled machine for a while, configuring Jupyter Notebook to use it as a backend. It seemed to work fine, although costs did build over time, and I had to always keep in mind to shut it off, alongside with a couple of other limitations. Long story short, around 3 months ago I decided to build my own machine learning rig.

My idea in a nutshell was to build a machine that would only act as a server, being accessible from anywhere to me, always ready to unleash its computational powers on whichever task I’d be working on. Although this setup did take some time to assess, assemble and configure, it has been working flawlessly ever since, and I am very happy with it.

…

This is the most crucial part. After serious consideration and leveraging the budget I decided to invest into EVGA GeForce GTX 1080 8GB card backed by Nvidia GTX 1080 GPU. It is really snappy (and expensive), and in this particular case it only takes 15 minutes to run — 3 times faster than a g2.2xlarge AWS machine! If you still feel hesitant, think of it this way: the faster your model runs, the more experiments you can carry out over the same period of time.

… (emphasis in original)

Total for this GPU rig? £1562.26

You now know the fate of your next big advance. 😉

If you are interested in comparing the performance of a Beowulf cluster, see: A Homemade Beowulf Cluster: Part 1, Hardware Assembly and A Homemade Beowulf Cluster: Part 2, Machine Configuration.

Either way, you are going to have enough processing power that your skill and not hardware limits are going to be the limiting factor.