Apache® Hadoop® v3.0.0 General Availability

From the post:

Ubiquitous Open Source enterprise framework maintains decade-long leading role in $100B annual Big Data market

The Apache Software Foundation (ASF), the all-volunteer developers, stewards, and incubators of more than 350 Open Source projects and initiatives, today announced Apache® Hadoop® v3.0.0, the latest version of the Open Source software framework for reliable, scalable, distributed computing.

Over the past decade, Apache Hadoop has become ubiquitous within the greater Big Data ecosystem by enabling firms to run and manage data applications on large hardware clusters in a distributed computing environment.

"This latest release unlocks several years of development from the Apache community," said Chris Douglas, Vice President of Apache Hadoop. "The platform continues to evolve with hardware trends and to accommodate new workloads beyond batch analytics, particularly real-time queries and long-running services. At the same time, our Open Source contributors have adapted Apache Hadoop to a wide range of deployment environments, including the Cloud."

"Hadoop 3 is a major milestone for the project, and our biggest release ever," said Andrew Wang, Apache Hadoop 3 release manager. "It represents the combined efforts of hundreds of contributors over the five years since Hadoop 2. I'm looking forward to how our users will benefit from new features in the release that improve the efficiency, scalability, and reliability of the platform."

Apache Hadoop 3.0.0 highlights include:

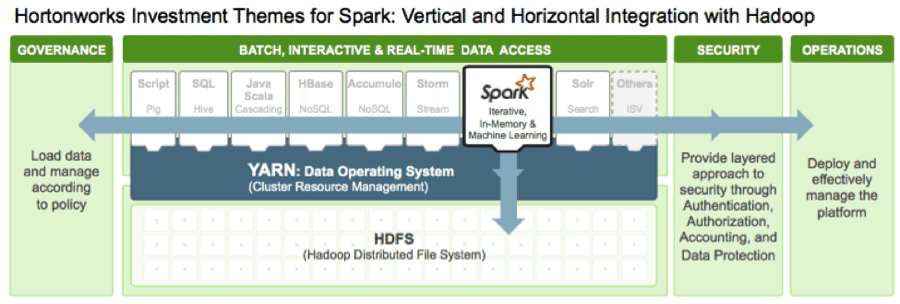

- HDFS erasure coding —halves the storage cost of HDFS while also improving data durability;

- YARN Timeline Service v.2 (preview) —improves the scalability, reliability, and usability of the Timeline Service;

- YARN resource types —enables scheduling of additional resources, such as disks and GPUs, for better integration with machine learning and container workloads;

- Federation of YARN and HDFS subclusters transparently scales Hadoop to tens of thousands of machines;

- Opportunistic container execution improves resource utilization and increases task throughput for short-lived containers. In addition to its traditional, central scheduler, YARN also supports distributed scheduling of opportunistic containers; and

- Improved capabilities and performance improvements for cloud storage systems such as Amazon S3 (S3Guard), Microsoft Azure Data Lake, and Aliyun Object Storage System.

… (emphasis in original)

Ah, the Hadoop link.

Do you find it odd use of the leader in the “$100B annual Big Data market” is documented by string comments in scripts and code?

Do you think non-technical management benefits from the documentation so captured?

Or that documentation for field names, routines, etc., can be easily extracted?

If software is maturing in a $100B market, shouldn’t it have mature documentation capabilities as well?