Americans want to be safer online – but not if they have to do anything by Bill Camarda.

From the post:

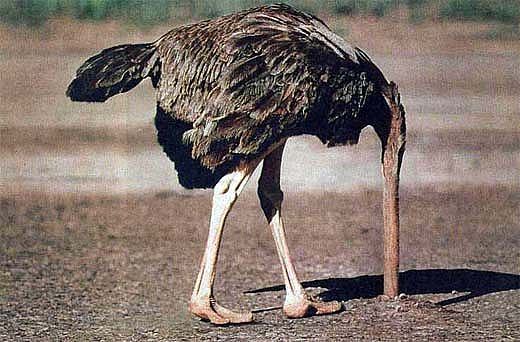

In the wake of non-stop news about identity theft, malware, ransomware, and all manner of information security catastrophes, Americans have educated themselves and are fully leveraging today’s powerful technologies to keep themselves safe… not.

While 67% told Morar Consulting they “would like extra layers of privacy,” far fewer use the technological tools now available to them. That’s the top-line finding of a brand-new survey of 2,000 consumers by Morar on behalf of the worldwide VPN provider “Hide My Ass!”

A key related finding: 63% of survey respondents have encountered online security issues. But, among the folks who’ve been bitten, just 56% have permanently changed their online behavior afterwards. (If you don’t learn the “hard way,” when do you learn?)

According to Morar, there’s still an odd disconnect between the way some people protect themselves offline and what they’re willing to do on the web. 51% of respondents would publicly post their email addresses, 26% their home addresses, and 21% their personal phone numbers.

…

Does this result surprise you?

If not:

How should we judge projects/solutions that presume conscious effort by users to:

- Encode data (think linked data and topic maps)

- Create maps between data sets

- Create data in formats not their own

- Use data vocabularies not their own

- Use software not their own

- Improve search results

- etc.

I mention “search results” as it is commonly admitted that search results are, at best, a pig’s breakfast. The amount of improvement possible over current search results is too large to even be guesstimated.

Rather than beat the dead horse, “…users ought to…,” yes, they should, but they don’t, it is better to ask “Now what?”

Why not try metrics?

Monitor user interactions with information and test systems to anticipate those needs. Both are measurable categories.

Consider that back in the day, indexes never indexed everything. Magazine indexes omitted ads for example. Could have been indexed but indexing ads didn’t offer enough return for the effort required.

Why not apply that model to modern information systems? Yes, we can create linked data or other representations for everything in every post, but if no one uses 90% of that encoding, we have spent a lot of money for very little gain.

Yes, that means we will be discriminating against less often cited authors, for example. And your point?

The preservation of the Greek literature discriminated against authors whose work wasn’t important enough for someone to invest in preserving it.

Of course, we may not lose data in quite the same way but if it can’t be found, isn’t that the same a being lost?

Let’s apply metrics to information retrieval and determine what return justifies the investment to make information easily available.

Consign/condemn the rest of it to search.