Shining a light into the BBC Radio archives by Yves Raimond, Matt Hynes, and Rob Cooper.

From the post:

One of the biggest challenges for the BBC Archive is how to open up our enormous collection of radio programmes. As we’ve been broadcasting since 1922 we’ve got an archive of almost 100 years of audio recordings, representing a unique cultural and historical resource.

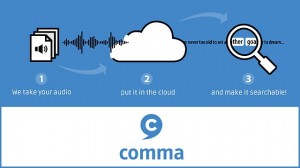

But the big problem is how to make it searchable. Many of the programmes have little or no meta-data, and the whole collection is far too large to process through human efforts alone.

Help is at hand. Over the last five years or so, technologies such as automated speech recognition, speaker identification and automated tagging have reached a level of accuracy where we can start to get impressive results for the right type of audio. By automatically analysing sound files and making informed decisions about the content and speakers, these tools can effectively help to fill in the missing gaps in our archive’s meta-data.

…

The Kiwi set of speech processing algorithms

COMMA is built on a set of speech processing algorithms called Kiwi. Back in 2011, BBC R&D were given access to a very large speech radio archive, the BBC World Service archive, which at the time had very little meta-data. In order to build our prototype around this archive we developed a number of speech processing algorithms, reusing open-source building blocks where possible. We then built the following workflow out of these algorithms:

- Speaker segmentation, identification and gender detection (using LIUM diarization toolkit, diarize-jruby and ruby-lsh). This process is also known as diarisation. Essentially an audio file is automatically divided into segments according to the identity of the speaker. The algorithm can show us who is speaking and at what point in the sound clip.

- Speech-to-text for the detected speech segments (using CMU Sphinx). At this point the spoken audio is translated as accurately as possible into readable text. This algorithm uses models built from a wide range of BBC data.

- Automated tagging with DBpedia identifiers. DBpedia is a large database holding structured data extracted from Wikipedia. The automatic tagging process creates the searchable meta-data that ultimately allows us to access the archives much more easily. This process uses a tool we developed called ‘Mango’.

,,,

COMMA is due to launch some time in April 2015. If you’d like to be kept informed of our progress you can sign up for occasional email updates here. We’re also looking for early adopters to test the platform, so please contact us if you’re a cultural institution, media company or business that has large audio data-set you want to make searchable.

This article was written by Yves Raimond (lead engineer, BBC R&D), Matt Hynes (senior software engineer, BBC R&D) and Rob Cooper (development producer, BBC R&D)

I don’t have a large audio data-set but I am certainly going to be following this project. The results should be useful in and of themselves, to say nothing of being a good starting point for further tagging. I wonder if the BBC Sanskrit broadcasts are going to be available? I will have to check on that.

Without diminishing the achievements of other institutions, the efforts of the BBC, the British Library, and the British Museum are truly remarkable.

I first saw this in a tweet by Mike Jones.