Are Deep Policy Gradient Algorithms Truly Policy Gradient Algorithms? by Andrew Ilyas, et al.

Abstract:

We study how the behavior of deep policy gradient algorithms reflects the conceptual framework motivating their development. We propose a fine-grained analysis of state-of-the-art methods based on key aspects of this framework: gradient estimation, value prediction, optimization landscapes, and trust region enforcement. We find that from this perspective, the behavior of deep policy gradient algorithms often deviates from what their motivating framework would predict. Our analysis suggests first steps towards solidifying the foundations of these algorithms, and in particular indicates that we may need to move beyond the current benchmark-centric evaluation methodology.

Although written as an evaluation of the framework for deep policy gradient algorithms with suggestions for improvement, it isn’t hard to see how the same factors create hiding places for bias in deep learning algorithms.

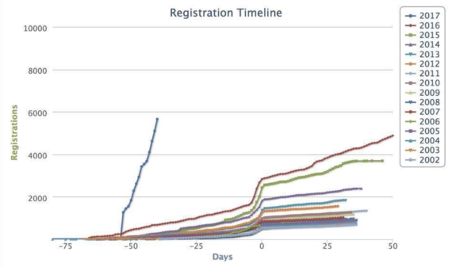

- Gradient Estimation: we find that even while agents are improving in terms of reward, the gradient

estimates used to update their parameters are often virtually uncorrelated with the true gradient.- Value Prediction: our experiments indicate that value networks successfully solve the supervised learning task they are trained on, but do not fit the true value function. Additionally, employing a value network as a baseline function only marginally decreases the variance of gradient estimates (but dramatically increases agent’s performance).

- Optimization Landscapes: we also observe that the optimization landscape induced by modern policy gradient algorithms is often not reflective of the underlying true reward landscape, and that the latter is often poorly behaved in the relevant sample regime.

- Trust Regions: our findings show that deep policy gradient algorithms sometimes violate theoretically motivated trust regions. In fact, in proximal policy optimization, these violations stem from a fundamental problem in the algorithm’s design.

The key take-away is that if you can’t explain the behavior of an algorithm, then how do you detect or guard against bias in such an algorithm? Or as the authors put it:

Deep reinforcement learning (RL) algorithms are rooted in a well-grounded framework of classical RL, and have shown great promise in practice. However, as our investigations uncover, this framework fails to explain much of the behavior of these algorithms. This disconnect impedes our understanding of why these algorithms succeed (or fail). It also poses a major barrier to addressing key challenges facing deep RL, such as widespread brittleness and poor reproducibility (cf. Section 4 and [3, 4]).

Do you plan on offering ignorance about your algorithms as a defense for discrimination?

Interesting.