Most HR Data Is Bad Data by Marcus Buckingham.

“Bad data” can come in any number of forms and Marcus Buckingham focuses on one of the most pernicious: Data that is flawed at its inception. Data that doesn’t measure what it purports to measure. Performance evaluation data.

From the post:

Over the last fifteen years a significant body of research has demonstrated that each of us is a disturbingly unreliable rater of other people’s performance. The effect that ruins our ability to rate others has a name: the Idiosyncratic Rater Effect, which tells us that my rating of you on a quality such as “potential” is driven not by who you are, but instead by my own idiosyncrasies—how I define “potential,” how much of it I think I have, how tough a rater I usually am. This effect is resilient — no amount of training seems able to lessen it. And it is large — on average, 61% of my rating of you is a reflection of me.

In other words, when I rate you, on anything, my rating reveals to the world far more about me than it does about you. In the world of psychometrics this effect has been well documented. The first large study was published in 1998 in Personnel Psychology; there was a second study published in the Journal of Applied Psychology in 2000; and a third confirmatory analysis appeared in 2010, again in Personnel Psychology. In each of the separate studies, the approach was the same: first ask peers, direct reports, and bosses to rate managers on a number of different performance competencies; and then examine the ratings (more than half a million of them across the three studies) to see what explained why the managers received the ratings they did. They found that more than half of the variation in a manager’s ratings could be explained by the unique rating patterns of the individual doing the rating— in the first study it was 71%, the second 58%, the third 55%.

You have to follow the Idiosyncratic Rater Effect link to find the references Buckingham cites so I have repeated them (with links and abstracts) below:

Trait, Rater and Level Effects in 360-Degree Performance Ratings by Michael K. Mount, et al., Personnel Psychology, 1998, 51, 557-576.

Abstract:

Method and trait effects in multitrait-multirater (MTMR) data were examined in a sample of 2,350 managers who participated in a developmental feedback program. Managers rated their own performance and were also rated by two subordinates, two peers, and two bosses. The primary purpose of the study was to determine whether method effects are associated with the level of the rater (boss, peer, subordinate, self) or with each individual rater, or both. Previous research which has tacitly assumed that method effects are associated with the level of the rater has included only one rater from each level; consequently, method effects due to the rater’s level may have been confounded with those due to the individual rater. Based on confirmatory factor analysis, the present results revealed that of the five models tested, the best fit was the 10-factor model which hypothesized 7 method factors (one for each individual rater) and 3 trait factors. These results suggest that method variance in MTMR data is more strongly associated with individual raters than with the rater’s level. Implications for research and practice pertaining to multirater feedback programs are discussed.

Understanding the Latent Structure of Job Performance Ratings, by Michael K. Mount, Steven E. Scullen, Maynard Goff, Journal of Applied Psychology, 2000, Vol. 85, No. 6, 956-970 (I looked but apparently the APA hasn’t gotten the word about access to abstracts online, etc.)

Rater Source Effects are Alive and Well After All by Brian Hoffman, et al., Personnel Psychology, 2010, 63, 119-151.

Abstract:

Recent research has questioned the importance of rater perspective effects on multisource performance ratings (MSPRs). Although making a valuable contribution, we hypothesize that this research has obscured evidence for systematic rater source effects as a result of misspecified models of the structure of multisource performance ratings and inappropriate analytic methods. Accordingly, this study provides a reexamination of the impact of rater source on multisource performance ratings by presenting a set of confirmatory factor analyses of two large samples of multisource performance rating data in which source effects are modeled in the form of second-order factors. Hierarchical confirmatory factor analysis of both samples revealed that the structure of multisource performance ratings can be characterized by general performance, dimensional performance, idiosyncratic rater, and source factors, and that source factors explain (much) more variance in multisource performance ratings whereas general performance explains (much) less variance than was previously believed. These results reinforce the value of collecting performance data from raters occupying different organizational levels and have important implications for research and practice.

For students: Can you think of other sources that validate the Idiosyncratic Rater Effect?

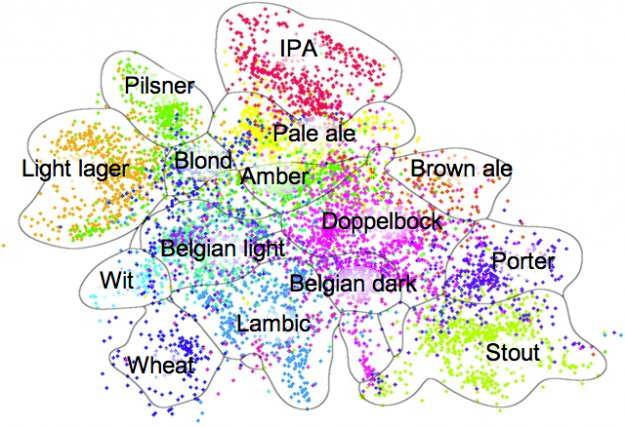

What about algorithms that make recommendations based on user ratings of movies? Isn’t the premise of recommendations that the ratings tell us more about the rater than about the movie? So we can make the “right” recommendation for a person very similar to the rater?

I don’t know that it means anything but a search with a popular search engine turns up only 258 “hits” for “Idiosyncratic Rater Effect.” On the other hand, “recommendation system” turns up 424,000 “hits” and that sounds low to me considering the literature on recommendation.

Bottom line on data quality is that widespread use of data is no guarantee of quality.

What ratings reflect is useful in one context (recommendation) and pernicious in another (employment ratings).

I first saw this in a tweet by Neil Saunders.