LuxRender – Physically Based Renderer.

From the webpage:

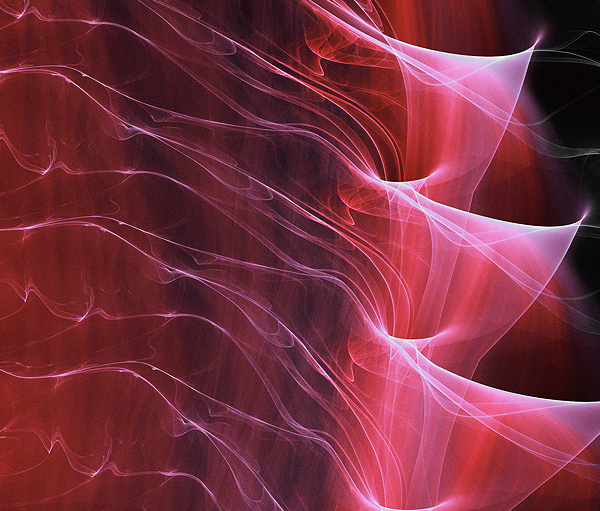

LuxRender is a physically based and unbiased rendering engine. Based on state of the art algorithms, LuxRender simulates the flow of light according to physical equations, thus producing realistic images of photographic quality.

LuxRender is now a member project of the Software Freedom Conservancy which provides administrative and financial support to FOSS projects. This allows us to receive donations, which can be tax deductible in the US.

…

Physically based spectral rendering

LuxRender is built on physically based equations that model the transportation of light. This allows it to accurately capture a wide range of phenomena which most other rendering programs are simply unable to reproduce. This also means that it fully supports high-dynamic range (HDR) rendering.

Materials

LuxRender features a variety of material types. Apart from generic materials such as matte and glossy, physically accurate representations of metal, glass, and car paint are present. Complex properties such as absorption, dispersive refraction and thin film coating are available.

Fleximage (virtual film)

The virtual film allows you to pause and continue a rendering at any time. The current state of the rendering can even be written to a file, so that the computer (or even another computer) can continue rendering at a later moment.

Free for everyone

LuxRender is and will always be free software, both for private and commercial use. It is being developed by people with a passion for programming and for computer graphics who like sharing their work. We encourage you to download LuxRender and use it to express your artistic ideas. (learn more)

Too advanced for my graphic skills but I thought some of you might find this useful in populating your topic maps with high-end visualizations.

I first saw this in a tweet by David Bucciarelli that announced the LuxRender v1.5RC1 release.