Don’t Delete Evil Data by Lam Thuy Vo.

From the post:

The web needs to be a friendlier place. It needs to be more truthful, less fake. It definitely needs to be less hateful. Most people agree with these notions.

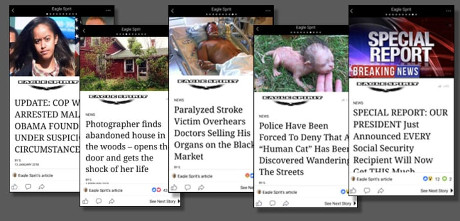

There have been a number of efforts recently to enforce this idea: the Facebook groups and pages operated by Russian actors during the 2016 election have been deleted. None of the Twitter accounts listed in connection to the investigation of the Russian interference with the last presidential election are online anymore. Reddit announced late last fall that it was banning Nazi, white supremacist, and other hate groups.

But even though much harm has been done on these platforms, is the right course of action to erase all these interactions without a trace? So much of what constitutes our information universe is captured online—if foreign actors are manipulating political information we receive and if trolls turn our online existence into hell, there is a case to be made for us to be able to trace back malicious information to its source, rather than simply removing it from public view.

In other words, there is a case to be made to preserve some of this information, to archive it, structure it, and make it accessible to the public. It’s unreasonable to expect social media companies to sidestep consumer privacy protections and to release data attached to online misconduct willy-nilly. But to stop abuse, we need to understand it. We should consider archiving malicious content and related data in responsible ways that allow for researchers, sociologists, and journalists to understand its mechanisms better and, potentially, to demand more accountability from trolls whose actions may forever be deleted without a trace.

…

By some unspecified mechanism, I would support preservation of all social media. As well as have it publicly available, if it were publicly posted originally. Any restriction or permission to see/use the data will lead to the same abuses we see now.

Twitter, among others, talks about abuse but no one can prove or disprove whatever Twitter cares to say.

There is a downside to preserving social media. You have probably seen the NBC News story on 200,000 tweets that are the smoking gun on Russian interference with the 2016 elections.

Well, except that if you look at the tweets, that’s about as far from a smoking gun on Russian interference as anything you can imagine.

By analogy, that’s why intelligence analysts always say they have evidence and give you their conclusions, but not the evidence. Too much danger you will discover their report is completely fictional.

Or when not wholly fictional, serves their or their agency’s interest.

Keeping evidence is risky business. Just so you are aware.