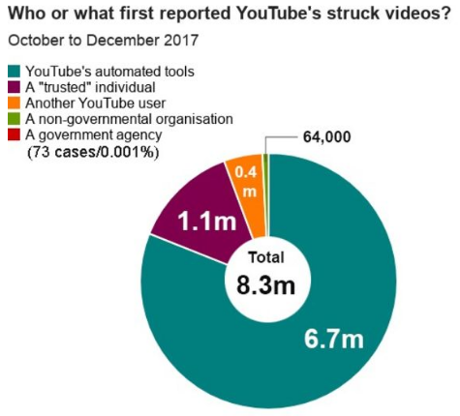

US and Western government propaganda has been plentiful for decades but Caitlin Johnstone uncovers why a prominent think tank is calling for more Western propaganda.

Atlantic Council Explains Why We Need To Be Propagandized For Our Own Good

From the post:

I sometimes try to get establishment loyalists to explain to me exactly why we’re all meant to be terrified of this “Russian propaganda” thing they keep carrying on about. What is the threat, specifically? That it makes the public less willing to go to war with Russia and its allies? That it makes us less trusting of lying, torturing, coup-staging intelligence agencies? Does accidentally catching a glimpse of that green RT logo turn you to stone like Medusa, or melt your face like in Raiders of the Lost Ark?

“Well, it makes us lose trust in our institutions,” is the most common reply.

Okay. So? Where’s the threat there? We know for a fact that we’ve been lied to by those institutions. Iraq isn’t just something we imagined. We should be skeptical of claims made by western governments, intelligence agencies and mass media. How specifically is that skepticism dangerous?

…

A great read as always but I depart from Johnstone when she concludes:

…

If our dear leaders are so worried about our losing faith in our institutions, they shouldn’t be concerning themselves with manipulating us into trusting them, they should be making those institutions more trustworthy.Don’t manipulate better, be better. The fact that an influential think tank is now openly advocating the former over the latter should concern us all.

I tweeted to George Lakoff quite recently, asking for more explicit treatment of how to use persuasion techniques.

Being about to recognize persuasion used against you, in propaganda for example, is good. Being about to construct such techniques to use in propaganda against others, is great! Sadly, no response from Lakoff. Perhaps he was busy.

The “other side,” your pick, isn’t going to stop using propaganda. Hoping, wishing, praying they will, are exercises in being ineffectual.

If you seek to counter decades of finely honed war-mongering, exploitive Western narrative, be prepared to use propaganda and to use it well.