From the webpage:

This is an open source benchmark which compares the performance of several large scale data-processing frameworks.

Introduction

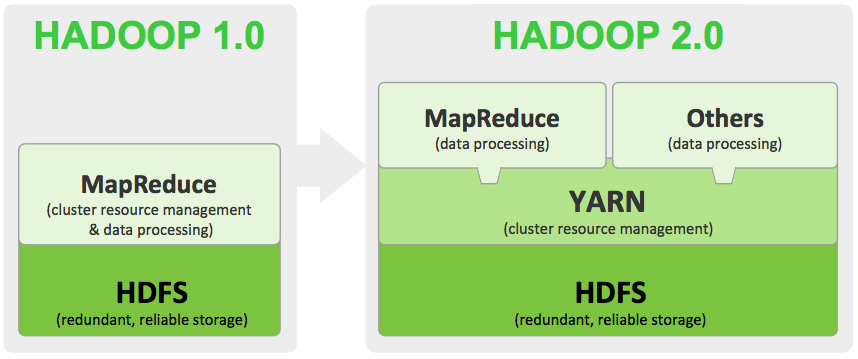

Several analytic frameworks have been announced in the last six months. Among them are inexpensive data-warehousing solutions based on traditional Massively Parallel Processor (MPP) architectures (Redshift), systems which impose MPP-like execution engines on top of Hadoop (Impala, HAWQ) and systems which optimize MapReduce to improve performance on analytical workloads (Shark, Stinger). This benchmark provides quantitative and qualitative comparisons of four sytems. It is entirely hosted on EC2 and can be reproduced directly from your computer.

- Redshift – a hosted MPP database offered by Amazon.com based on the ParAccel data warehouse.

- Hive – a Hadoop-based data warehousing system. (v0.10, 1/2013 Note: Hive v0.11, which advertises improved performance, was recently released but is not yet included)

- Shark – a Hive-compatible SQL engine which runs on top of the Spark computing framework. (v0.8 preview, 5/2013)

- Impala – a Hive-compatible* SQL engine with its own MPP-like execution engine. (v1.0, 4/2013)

This remains a work in progress and will evolve to include additional frameworks and new capabilities. We welcome contributions.

What is being evaluated?

This benchmark measures response time on a handful of relational queries: scans, aggregations, joins, and UDF’s, across different data sizes. Keep in mind that these systems have very different sets of capabilities. MapReduce-like systems (Shark/Hive) target flexible and large-scale computation, supporting complex User Defined Functions (UDF’s), tolerating failures, and scaling to thousands of nodes. Traditional MPP databases are strictly SQL compliant and heavily optimized for relational queries. The workload here is simply one set of queries that most of these systems these can complete.

Benchmarks were mentioned in a discussion at the XTM group on LinkedIn.

Not sure these would be directly applicable but should prove to be useful background material.

I first saw this at Danny Bickson’s Shark @ SIGMOD workshop.

Danny points to Reynold Xin’s Shark talk at SIGMOD GRADES workshop. General overview but worth your time.

Danny also points out that Reynold Xin will be presenting on GraphX at the GraphLab workshop Monday July 1st in SF.

I can’t imagine why that came to mind.