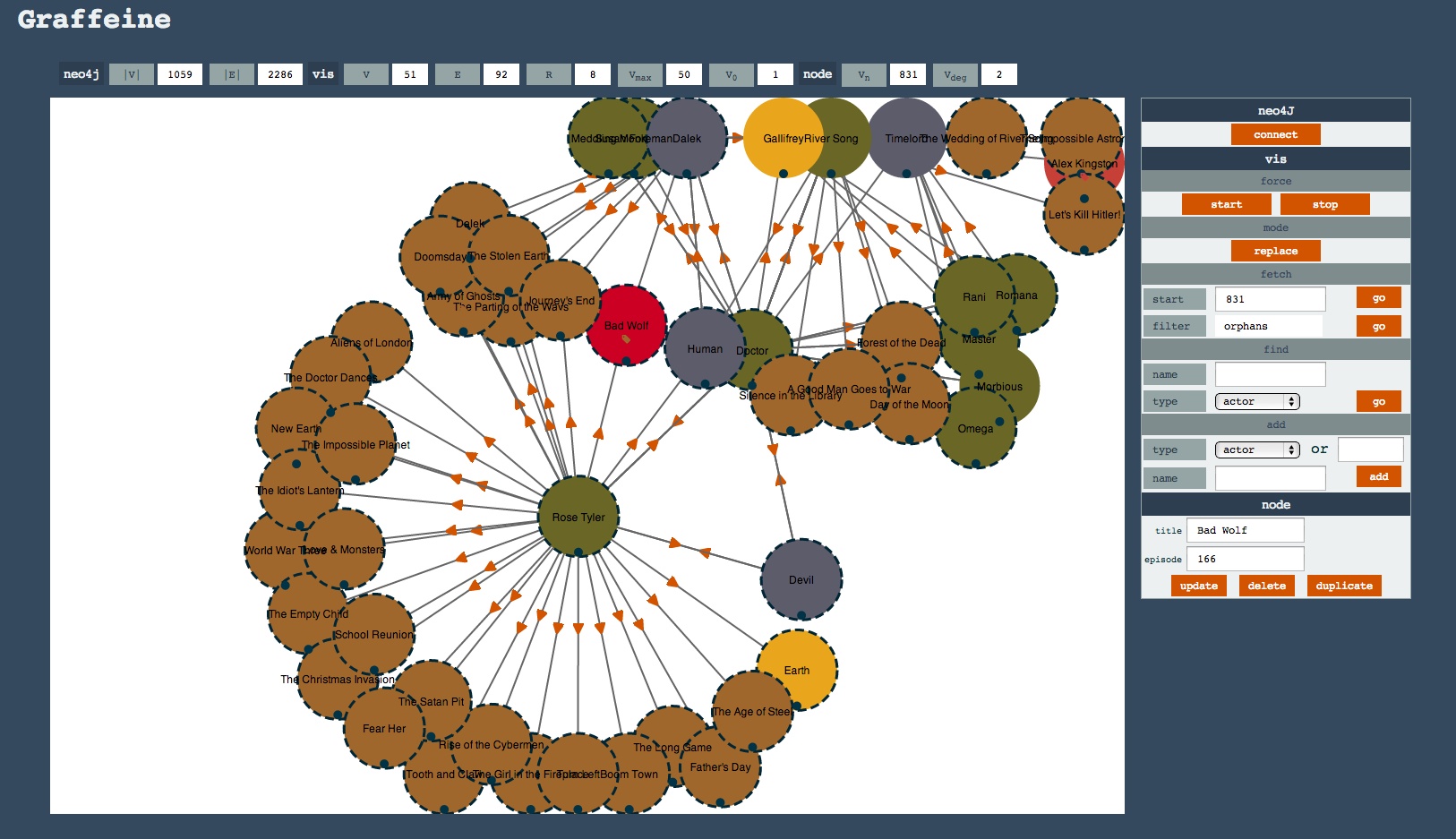

The Social Graph of the Los Alamos National Laboratory by Marko A. Rodriguez.

From the post:

The web is composed of numerous web sites tailored to meet the information, consumption, and social needs of its users. Within many of these sites, references are made to the same platonic “thing” though different facets of the thing are expressed. For example, in the movie industry, there is a movie called John Carter by Disney. While the movie is an abstract concept, it has numerous identities on the web (which are technically referenced by a URI).

…

Aurelius collaborated with the Digital Library Research and Prototyping Group of the Los Alamos National Laboratory (LANL) to develop EgoSystem atop the distributed graph database Titan. The purpose of this system is best described by the introductory paragraph of the April 2014 publication on EgoSystem.

I heavily commend Marko’s post and the Egosystem publication for your reading. That despite my cautions concerning some of the theoretical aspects of the project.

Statements like:

references are made to the same platonic “thing” though different facets of the thing are expressed.

have always troubled me. In part because it involves a claim, usually by the speaker, to have freed themselves from Plato’s cave such that they and they alone can see things aright. Which consigns the rest of us to be the pitiful lot still confined to the cave.

Which of course leads to Marko’s:

There are two categories of vertices in EgoSystem.

- Platonic: Denotes an abstract concept devoid of interpretation.

- Identity: Denotes a particular interpretation of a platonic.

Every platonic vertex is of a particular type: a person, institution, artifact, or concept. Next, every platonic has one or more identities as referenced by a URL on the web. The platonic types and the location of their web identities are itemized below. As of EgoSystem 1.0, these are the only sources from which data is aggregated, though extending it to support more services (e.g. Facebook, Quorum, etc.) is feasible given the system’s modular architecture.

A structure where English labels, remarkably enough, are places on “Platonic” vertices. Not that we would attribute any identity or semantics to a “Platonic” vertex. 😉

Rather than “Platonic” vertices, they are better described as boundary vertices. That is they circumscribe what can be represented in a particular graph, without making claims on a “higher” reality.

I say that not to be pedantic but to illustrate how a “Platonic” vertex prevents us from meaningful merger with graphs with differing “Platonic” vertices.

No doubt Shiva’s1 other residence, Arzamas-16, could benefit from a similar “alumni” graph but I rather doubt it is going to use English labels for its “Platonic” vertices which:

Denote[…] an abstract concept devoid of interpretation.

If I have no “interpretation,” which I takes to mean no properties (key/value pairs), how will I combine social graphs from Los Alamos and Arzamas-16?

I could cheat and secretly look up properties for the alleged “Platonic” nodes and combine them together but then how would you check my work? The end result would be opaque to anyone other than myself.

That isn’t a criticism of using the EgoSystem. I am sure it meets the needs of Los Alamos quite nicely.

However, it can prevent us from capturing the information necessary to expand the boundary of our graph at some future date or merging it with other graphs.

From a philosophical standpoint, we should not claim access to Platonic ideals when we are actually recording our views of shadows on the cave wall. Of which, intersections between graphs/shadows are just a subset.

1. Those of you old enough to remember Robert Oppenheimer will recognize the reference.