Teaching Deep Convolutional Neural Networks to Play Go by Christopher Clark and Amos Storkey.

Abstract:

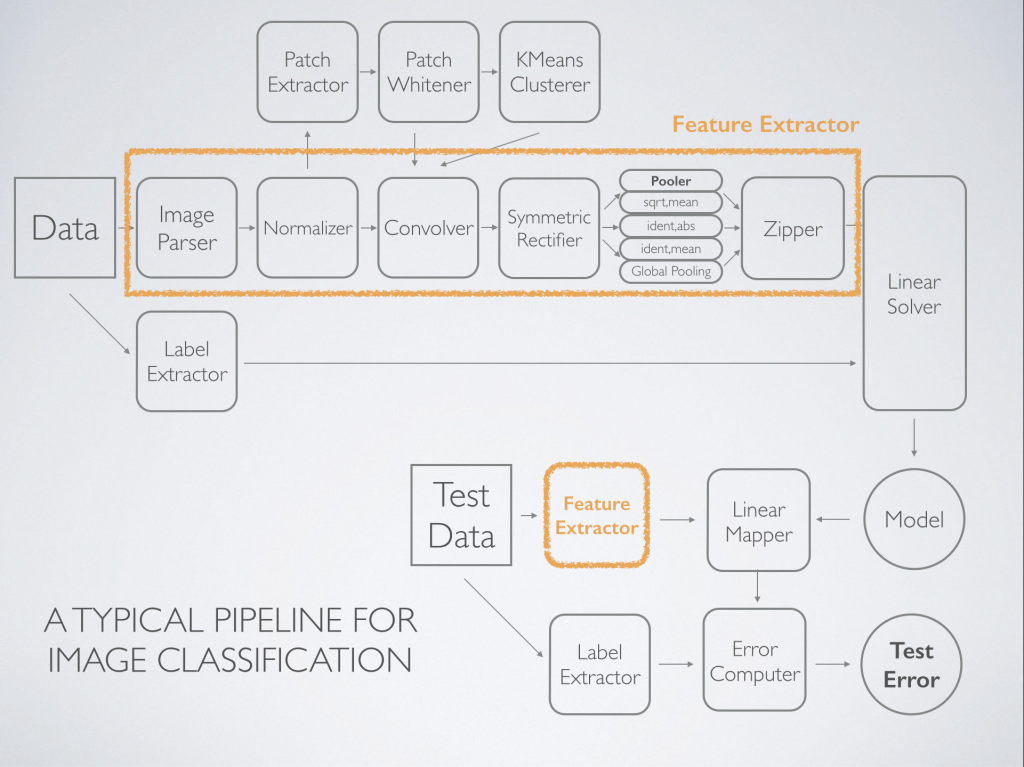

Mastering the game of Go has remained a long standing challenge to the field of AI. Modern computer Go systems rely on processing millions of possible future positions to play well, but intuitively a stronger and more ‘humanlike’ way to play the game would be to rely on pattern recognition abilities rather then brute force computation. Following this sentiment, we train deep convolutional neural networks to play Go by training them to predict the moves made by expert Go players. To solve this problem we introduce a number of novel techniques, including a method of tying weights in the network to ‘hard code’ symmetries that are expect to exist in the target function, and demonstrate in an ablation study they considerably improve performance. Our final networks are able to achieve move prediction accuracies of 41.1% and 44.4% on two different Go datasets, surpassing previous state of the art on this task by significant margins. Additionally, while previous move prediction programs have not yielded strong Go playing programs, we show that the networks trained in this work acquired high levels of skill. Our convolutional neural networks can consistently defeat the well known Go program GNU Go, indicating it is state of the art among programs that do not use Monte Carlo Tree Search. It is also able to win some games against state of the art Go playing program Fuego while using a fraction of the play time. This success at playing Go indicates high level principles of the game were learned.

If you are going to pursue the study of Monte Carlo Tree Search for semantic purposes, there isn’t any reason to not enjoy yourself as well. 😉

And following the best efforts in game playing will be educational as well.

I take the efforts at playing Go by computer as well as those for chess, as indicating how far ahead humans are to AI.

Both of those two-player, complete knowledge games were mastered long ago by humans. Multi-player games with extended networds of influence and motives, not to mention incomplete information as well, seem securely reserved for human players for the foreseeable future. (I wonder if multi-player scenarios are similar to the multi-body problem in physics? Except with more influences.)

I first saw this in a tweet by Ebenezer Fogus.