Kaspersky Lab released The Human Factor in IT Security last July (2017), which was summarized by Nikolay Pankov in The human factor: Can employees learn to not make mistakes?, saying in part:

…

- 46% of incidents in the past year involved employees who compromised their company’s cybersecurity unintentionally or unwittingly;

- Of the companies affected by malicious software, 53% said that infection could not have happened without the help of inattentive employees, and 36% blame social engineering, which means that someone intentionally tricked the employees;

- Targeted attacks involving phishing and social engineering were successful in 28% of cases;

- In 40% of cases, employees tried to conceal the incident after it happened, amplifying the damage and further compromising the security of the affected company;

- Almost half of the respondents worry that their employees inadvertently disclose corporate information through the mobile devices they bring to the workplace.

…

If anything, human stupidity is a constant with little hope of improvement.

For example, the “Big Three” automobile manufacturers were founded in the 1920’s and now almost a century later, the National Highway Traffic Safety Administration reports in 2015 there were 6.3 million police reported automobile accidents (an increase of 3.8% over the previous year).

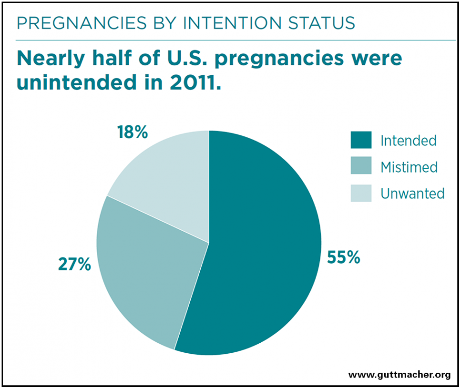

Or, another type of “accident” covered by the Guttmacher Institute shows for 2011:

Not to rag on users exclusively, vulnerabilities due to mis-configuration, failure to patch and vulnerabilities in security programs and programs more generally, are due to human stupidity as well.

0-Days will always capture the headlines and are a necessity against some opponents. At the same time, testing for human stupidity is certainly cheaper and often just as effective as advanced techniques.

Transparency is coming … to the USA! (Apologies to Leonard Cohen)