Massively Parallel Clustering: Overview by Grigory Yaroslavtsev.

From the post:

Clustering is one of the main vechicles of machine learning and data analysis.

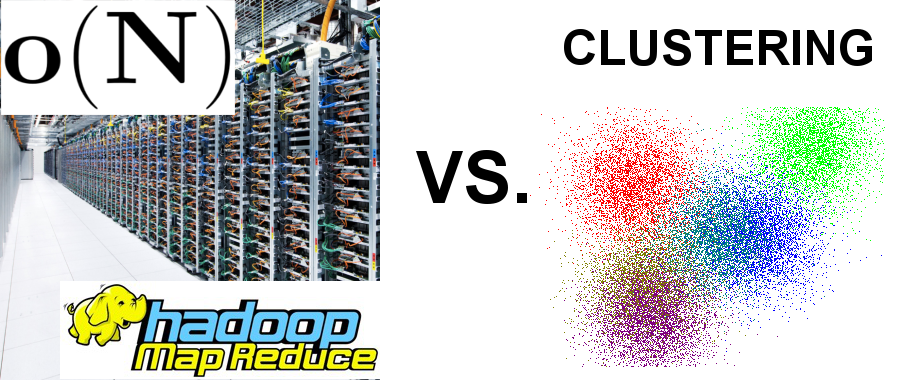

In this post I will describe how to make three very popular sequential clustering algorithms (k-means, single-linkage clustering and correlation clustering) work for big data. The first two algorithms can be used for clustering a collection of feature vectors in \(d\)-dimensional Euclidean space (like the two-dimensional set of points on the picture below, while they also work for high-dimensional data). The last one can be used for arbitrary objects as long as for any pair of them one can define some measure of similarity.

Besides optimizing different objective functions these algorithms also give qualitatively different types of clusterings.

K-means produces a set of exactly k clusters. Single-linkage clustering gives a hierarchical partitioning of the data, which one can zoom into at different levels and get any desired number of clusters.

Finally, in correlation clustering the number of clusters is not known in advance and is chosen by the algorithm itself in order to optimize a certain objective function.All algorithms described in this post use the model for massively parallel computation that I described before.

…

I thought you might be interested in parallel clustering algorithms after the post on OSM-France. Don’t skip model for massively parallel computation. It and the discussion that follows is rich in resources on parallel clustering. Lots of links.

I take heart from the line:

The last one [Correlation Clustering] can be used for arbitrary objects as long as for any pair of them one can define some measure of similarity.

The words “some measure of similarity” should be taken as a warning the any particular “measure of similarity” should be examined closely and tested against the data so processed. It could be that the “measure of similarity” produces a desired result on a particular data set. You won’t know until you look.