The Deadly Data Science Sin of Confirmation Bias by Michael Walker.

From the post:

Confirmation bias occurs when people actively search for and favor information or evidence that confirms their preconceptions or hypotheses while ignoring or slighting adverse or mitigating evidence. It is a type of cognitive bias (pattern of deviation in judgment that occurs in particular situations – leading to perceptual distortion, inaccurate judgment, or illogical interpretation) and represents an error of inductive inference toward confirmation of the hypothesis under study.

Data scientists exhibit confirmation bias when they actively seek out and assign more weight to evidence that confirms their hypothesis, and ignore or underweigh evidence that could disconfirm their hypothesis. This is a type of selection bias in collecting evidence.

Note that confirmation biases are not limited to the collection of evidence: even if two (2) data scientists have the same evidence, their respective interpretations may be biased. In my experience, many data scientists exhibit a hidden yet deadly form of confirmation bias when they interpret ambiguous evidence as supporting their existing position. This is difficult and sometimes impossible to detect yet occurs frequently.

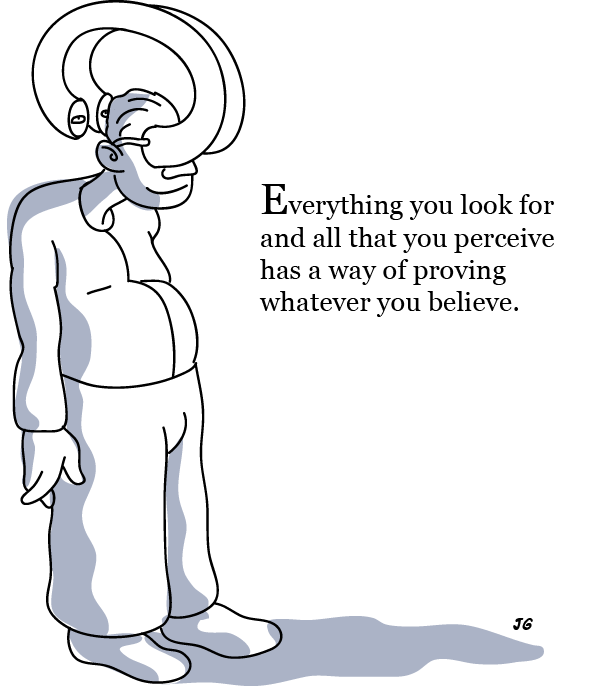

Isn’t that a great graphic? Michael goes on to list several resources that will help in spotting confirmation bias, yours and that of others. Not 1005 but you will do better heeding his advice.

Be aware that the confirmation bias isn’t confined to statistical and/or data science methods. Decision makers, topic map authors, fact gatherers, etc. are all subject to confirmation bias.

Michael sees confirmation bias as dangerous to the credibility of data science, writing:

The evidence suggests confirmation bias is rampant and out of control in both the hard and soft sciences. Many academic or research scientists run thousands of computer simulations where all fail to confirm or verify the hypothesis. Then they tweak the data, assumptions or models until confirmatory evidence appears to confirm the hypothesis. They proceed to publish the one successful result without mentioning the failures! This is unethical, may be fraudulent and certainly produces flawed science where a significant majority of results can not be replicated. This has created a loss or confidence and credibility for science by the public and policy makers that has serious consequences for our future.

.

The danger for professional data science practitioners is providing clients and employers with flawed data science results leading to bad business and policy decisions. We must learn from the academic and research scientists and proactively avoid confirmation bias or data science risks loss of credibility.

I don’t think bad business and policy decisions need any help from “flawed data science.” You may recall that “policy makers” not all that many years ago dismissed a failure to find weapons of mass destruction, a key motivation for war, as irrelevant in hindsight.

My suggestion would be to make your data analysis as complete and accurate as possible and always keep digitally signed and encrypted copies of data and communications with your clients.