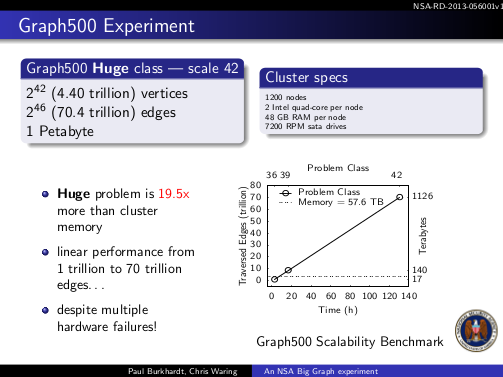

An NSA Big Graph Experiment by Paul Burkhardt and Chris Waring.

Slide presentation on processing graphs with Apache Accumulo.

Which has some impressive numbers:

Except that if you review the Graph 500 Benchmark Specification,

N the total number of vertices, 2SCALE. An implementation may use any set of N distinct integers to number the vertices, but at least 48 bits must be allocated per vertex number. Other parameters may be assumed to fit within the natural word of the machine. N is derived from the problem’s scaling parameter.

You find that all the nodes are normalized (no duplicates).

Moreover, the Graph 500 Benchmark cites:

The graph generator is a Kronecker generator similar to the Recursive MATrix (R-MAT) scale-free graph generation algorithm [Chakrabarti, et al., 2004].

Which provides:

There is a subtle point here: we may have duplicate edges (ie., edges which fall into the same cell in the adjacency matrix), but we only keep one of them. (R-MAT: A Recursive Model for Graph Mining by Deepayan Chakrabarti,

Yiping Zhan, and Christos Faloutsos.

By design, the Graph 500 Benchmark operates on completely normalized graphs.

I mention that because the graphs from Verizon, credit bureaus, FaceBook, Twitter, etc. are anything but normalized, some internally but all externally to each other.

Scaling Big Data Mining Infrastructure: The Twitter Experience by Jimmy Lin and Dmitriy Ryaboy is a chilling outline of semantic impedance in data within a single organization. Semantic impedance that would be reflected in graph processing of that data.

How much more semantic impedance will be encountered when graphs are build from diverse data sources?

Bottom line: The NSA gets great performance from Accumulo on normalized graphs, graphs that do not reflect real-world, non-normalized data.

I first saw this NSA presentation at Here’s how the NSA analyzes all that call data by Derrick Harris.