Homotopy Type Theory by Robert Harper. (Course with video lectures, notes, etc.)

Synopsis:

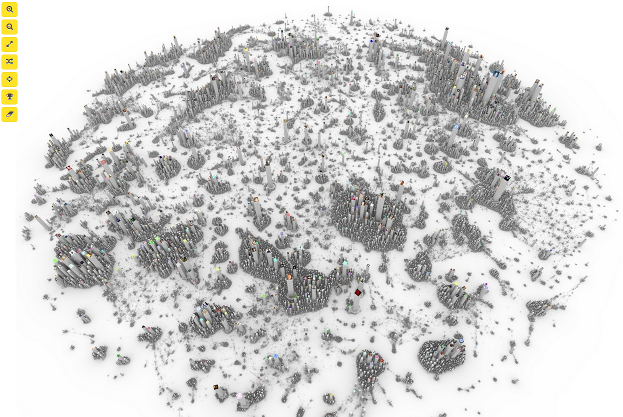

This is a graduate research seminar on Homotopy Type Theory (HoTT), a recent enrichment of Intuitionistic Type Theory (ITT) to include "higher-dimensional" types. The dimensionality of a type refers to the structure of its paths, the constructive witnesses to the equality of pairs of elements of a type, which themselves form a type, the identity type. In general a type is infinite dimensional in the sense that it exhibits non-trivial structure at all dimensions: it has elements, paths between elements, paths between paths, and so on to all finite levels. Moreover, the paths at each level exhibit the algebraic structure of a (higher) groupoid, meaning that there is always the "null path" witnessing reflexivity, the "inverse" path witnessing symmetry, and the "concatenation" of paths witnessing transitivity such that group-like laws hold "up to higher homotopy". Specifically, there are higher-dimensional paths witnessing the associative, unital, and inverse laws for these operations. Altogether this means that a type is a weak ∞-groupoid.

The significance of the higher-dimensional structure of types lies in the concept of a type-indexed family of types. Such families exhibit the structure of a fibration, which means that a path between two indices "lifts" to a transport mapping between the corresponding instances of the family that is, in fact, an equivalence. Thinking of paths as constructive witnesses for equality, this amounts to saying that equal indices give rise to equivalent types, and hence, by univalence, equal elements of the universe in which the family is valued. Thus, for example, if we think of the interval I as a type with two endpoints connected by a path, then an I-indexed family of types must assign equivalent types to the endpoints. In contrast the type B of booleans consists of two disconnected points, so that a B-indexed family of types may assign unrelated types to the two points of B. Similarly, mappings from I into another type A must assign connected points in A to the endpoints of the interval, whereas mappings from B into A are free to assign arbitrary points of A to the two booleans. These preservation principles are central to the structure of HoTT.

In many cases the path structure of a type becomes trivial beyond a certain dimension, called the level of the type. By convention the levels start at -2 and continue through -1, 0, 1, 2, and so on indefinitely. At the lowest, -2, level, the path structure of a type is degenerate in that there is an element to which all other elements are equal; such a type is said to be contractible, and is essentially a singleton. At the next higher level, -1, the type of paths between any two elements is contractible (level -2), which means that any two elements are equal, if there are any elements at all; such as type is a sub-singleton or h-proposition. At the next level, 0, the type of paths between paths between elements is contractible, so that any two elements are equal "in at most one way"; such a type is a set whose types of paths (equality relations) are all h-prop’s. Continuing in this way, types of level 1 are groupoids, those of level 2 are 2-groupoids, and so on for all finite levels.

ITT is capable of expressing only sets, which are types of level 0. Such types may have elements, and two elements may be considered equal in at most one way. A large swath of (constructive) mathematics may be formulated using only sets, and hence is amenable to representation in ITT. Computing applications, among others, require more than just sets. For example, it is often necessary to suppress distinctions among elements of a type so as to avoid over-specification; this is called proof irrelevance. Traditionally ITT has been enriched with an ad hoc treatment of proof irrelevance by introducing a universe of "propositions" with no computational content. In HoTT such propositions are types of level -1, requiring no special treatment or distinction. Such types arise by propositional truncation of a type to render degenerate the path structure of a type above level -1, ensuring that any two elements are equal in the sense of having a path between them.

Propositional truncation is just one example of a higher inductive type, one that is defined by specifying generators not only for its elements, but also for its higher-dimensional paths. The propositional truncation of a type is one that includes all of the elements of the type, and, in addition, a path between any two elements, rendering them equal. It is a limiting case of a quotient type in which only certain paths between elements are introduced, according to whether they are deemed to be related. Higher inductive types also permit the representation of higher-dimensional objects, such as the spheres of arbitrary dimension, as types, simply by specifying their "connectivity" properties. For example, the topological circle consists of a base point and a path starting and ending at that point, and the topological disk may be thought of as two half circles that are connected by a higher path that "fills in" the interior of the circle. Because of their higher path structure, such types are not sets, and neither are constructions such as the product of two circles.

The univalence axiom implies that an equivalence between types (an "isomorphism up to isomorphism") determines a path in a universe containing such types. Since two types can be equivalent in many ways (for example, there can be distinct bijections between two sets), univalence gives rise to types that are not sets, but rather are of a higher level, or dimension. The univalence axiom is mathematically efficient because it allows us to treat equivalent types as equal, and hence interchangeable in all contexts. In informal settings such identifications are often made by convention; in formal homotopy type theory such identifications are true equations.

If you think data types are semantic primitives with universal meaning/understanding, feel free to ignore this posting.

Data types can be usefully treated “as though” they are semantic primitives, but mistaking convenience for truth can be expensive.

The never ending cycle of enterprise level ETL for example. Even when it ends well it is expensive.

And there are all the cases where ETL or data integration don’t end well.

Homotopy Type Theory may not be the answer to those problems, but our current practices are known to not work.

Why not bet on an uncertain success versus the certainty of expense and near-certainty of failure?