Amazon S3 storage buckets set to ‘public’ are ripe for data-plundering by Ted Samson.

From the post:

Using a combination of relatively low-tech techniques and tools, security researchers have discovered that they can access the contents of one in six Amazon Simple Storage Service (S3) buckets. Those contents range from sales records and personal employee information to source code and unprotected database backups. Much of the data could be used to stage a network attack, to compromise users accounts, or to sell on the black market.

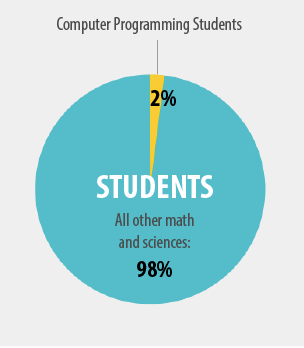

All told, researchers managed to discover and explore nearly 2,000 buckets from which they gathered a list of more than 126 billion files. They reviewed over 40,000 publicly visible files, many of which contained sensitive information, according to Rapid 7 Senior Security Consultant Will Vandevanter.

….

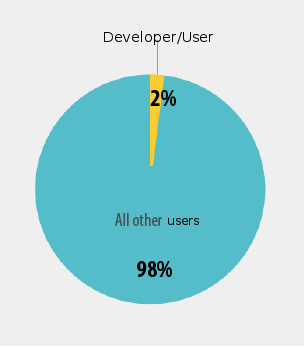

The root of the problem isn’t a security hole in Amazon’s storage cloud, according to Vandevanter. Rather, he credited Amazon S3 account holders who have failed to set their buckets to private — or to put it more bluntly, organizations that have embraced the cloud without fully understanding it. The fact that all S3 buckets have predictable, publically accessible URLs doesn’t help, though.

That was close!

From the headline I thought Chinese government hackers had carelessly left Amazon S3 storage buckets open after downloading. 😉

If you want an even lower tech technique for hacking into your network, try the following (with permission):

Call users from your internal phone system and say system passwords have been stolen and IT will monitor all logins for 72 hours. To monitor access, IT needs users logins and passwords to put tracers on accounts. Could make the difference in next quarter earnings being up or being non-existent.

After testing, are you in more danger from your internal staff than external hackers?

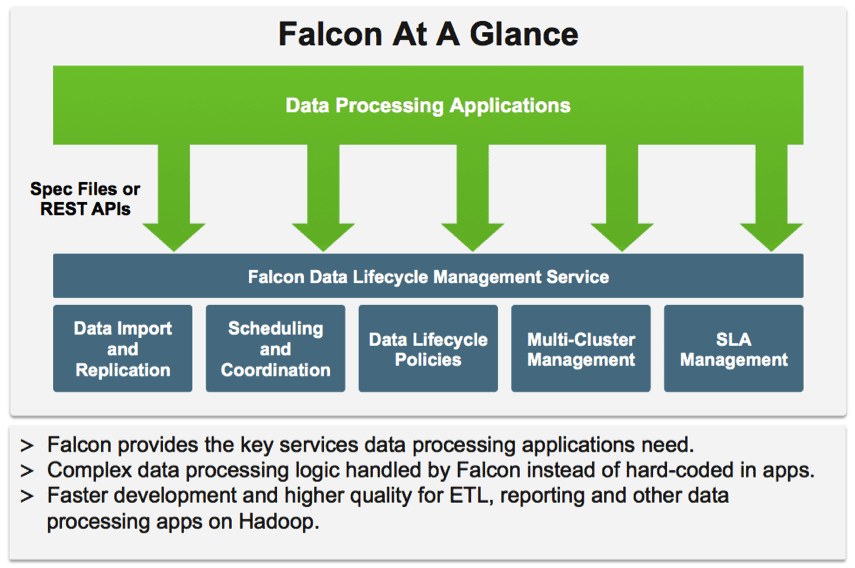

As you might suspect, I would be using a topic map to provide security accountability across both IT and users.

With the goal of assisting security risks to become someone else’s security risks.