New Jersey-based Fraud Ring Charged this Week: The Perfect Case for Social Network Analysis by Mike Betron.

When I first saw the headline, I thought the New Jersey legislature had gotten busted. 😉

No such luck, although with real transparency on contributions, relationships and state contracts, prison building would become a growth industry in New Jersey and elsewhere.

From the post:

As reported by MSN Money this week, eighteen members of a fraud ring have just been charged in what may be one of the largest international credit card scams in history. The New Jersey-based fraud ring is reported to have stolen at least $200 million, fooling credit card agencies by creating thousands of fake identities to create accounts.

What They Did

The FBI claims the members of the ring began their activity as early as 2007, and over time, used more than 7,000 fake identities to get more than 25,000 credit cards, using more than 1,800 addresses. Once they obtained credit cards, ring members started out by making small purchases and paying them off quickly to build up good credit scores. The next step was to send false reports to credit agencies to show that the account holders had paid off debts – and soon, their fake account holders had glowing credit ratings and high spending limits. Once the limits were raised, the fraudsters would “bust out,” taking out cash loans or maxing out the cards with no intention of paying them back.

But here’s the catch: The criminals in this case created synthetic identities with fake identity information (social security numbers, names, addresses, phone numbers, etc.). Addresses for the account holders were used multiple times on multiple accounts, and the members created at least 80 fake businesses which accepted credit card payments from the ring members.

This is exactly the kind of situation that would be caught by Social Network Analysis (SNA) software. Unfortunately, the credit card companies in this case didn’t have it.

Well, yes and no.

Yes, if Social Network Analysis (SNA) software were looking for the right relationships, the it could catch the fraud in question.

No, if Social Network Analysis (SNA) software were looking at the wrong relationships, the it would not catch the fraud in question.

Analysis isn’t a question of technology.

For example, what one policy change would do more to prevent future 9/11 type incidents that all the $billions spent since 9/11/2001?

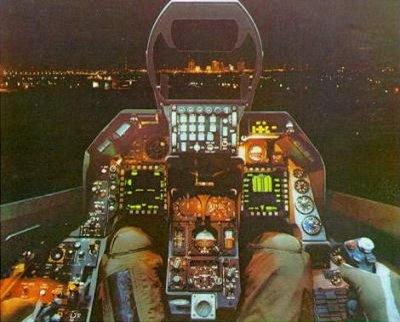

Would you believe: Don’t open the cockpit door for hijackers. (full stop)

The 9/11 hijackers took advantage of the “Common Strategy” flaw in U.S. hijacking protocols.

One of the FAA officials most involved with the Common Strategy in the period leading up to 9/11 described it as an approach dating back to the early 1980s, developed in consultation with the industry and the FBI, and based on the historical record of hijackings. The point of the strategy was to “optimize actions taken by a flight crew to resolve hijackings peacefully” through systematic delay and, if necessary, accommodation of the hijackers. The record had shown that the longer a hijacking persisted, the more likely it was to have a peaceful resolution. The strategy operated on the fundamental assumptions that hijackers issue negotiable demands, most often for asylum or the release of prisoners, and that “suicide wasn’t in the game plan” of hijackers.

Hijackers may blow up a plane, kill or torture passengers, but not opening the cockpit door prevents a future 9/11 type event.

But at 9/11, there was no historical experience with hijacking a plane to use a weapon.

Historical experience is just as important for detecting fraud.

Once a pattern is identified for fraud, SNA or topic maps or several other technologies can spot it.

But it has to be identified that first time.