Making the meaning of contracts visible – Automating contract visualization by Stefania Passera, Helena Haapio, Michael Curtotti.

Abstract:

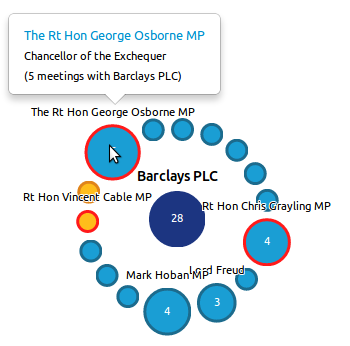

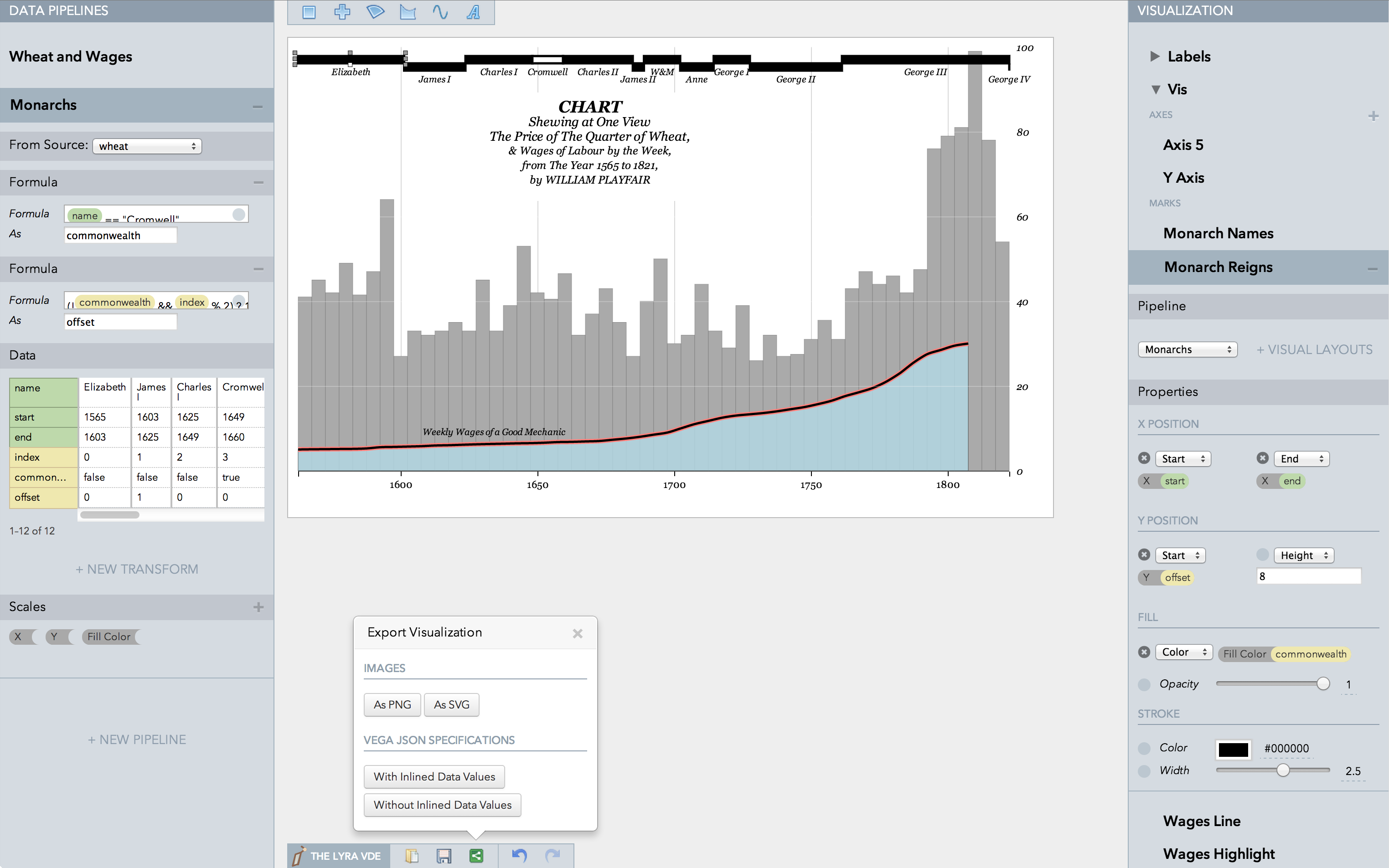

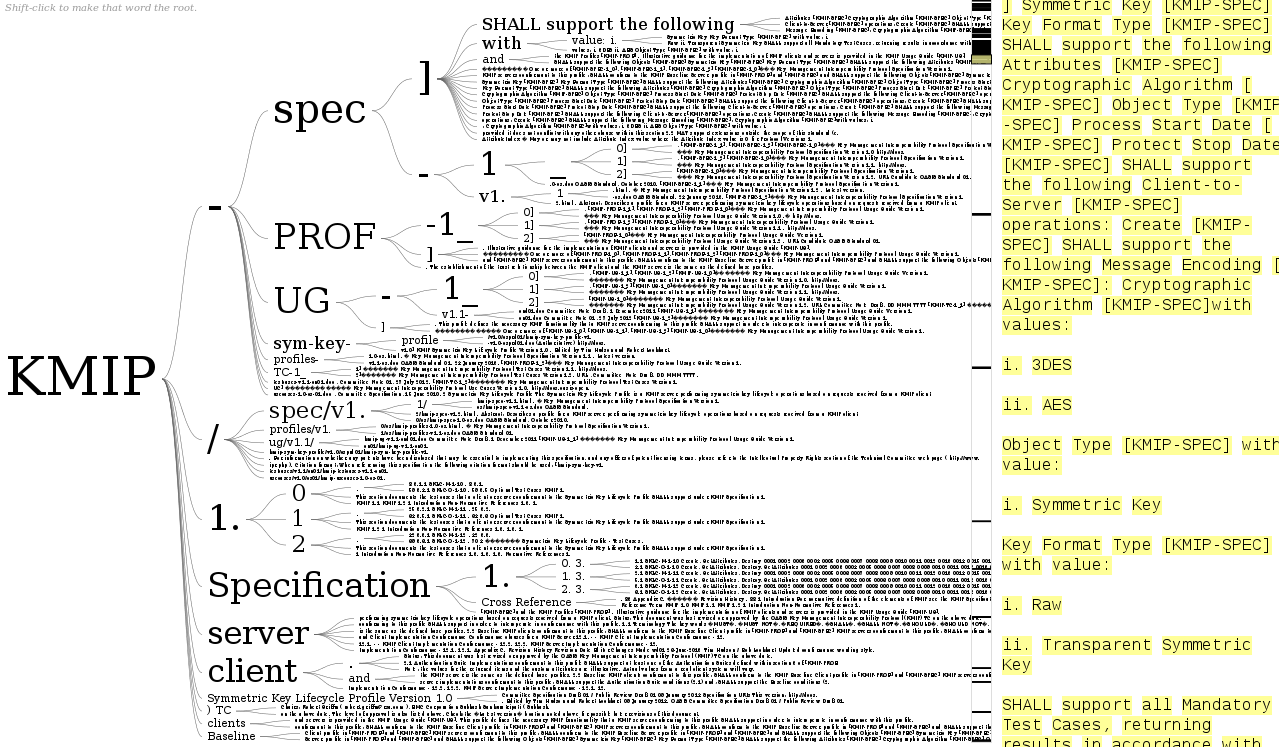

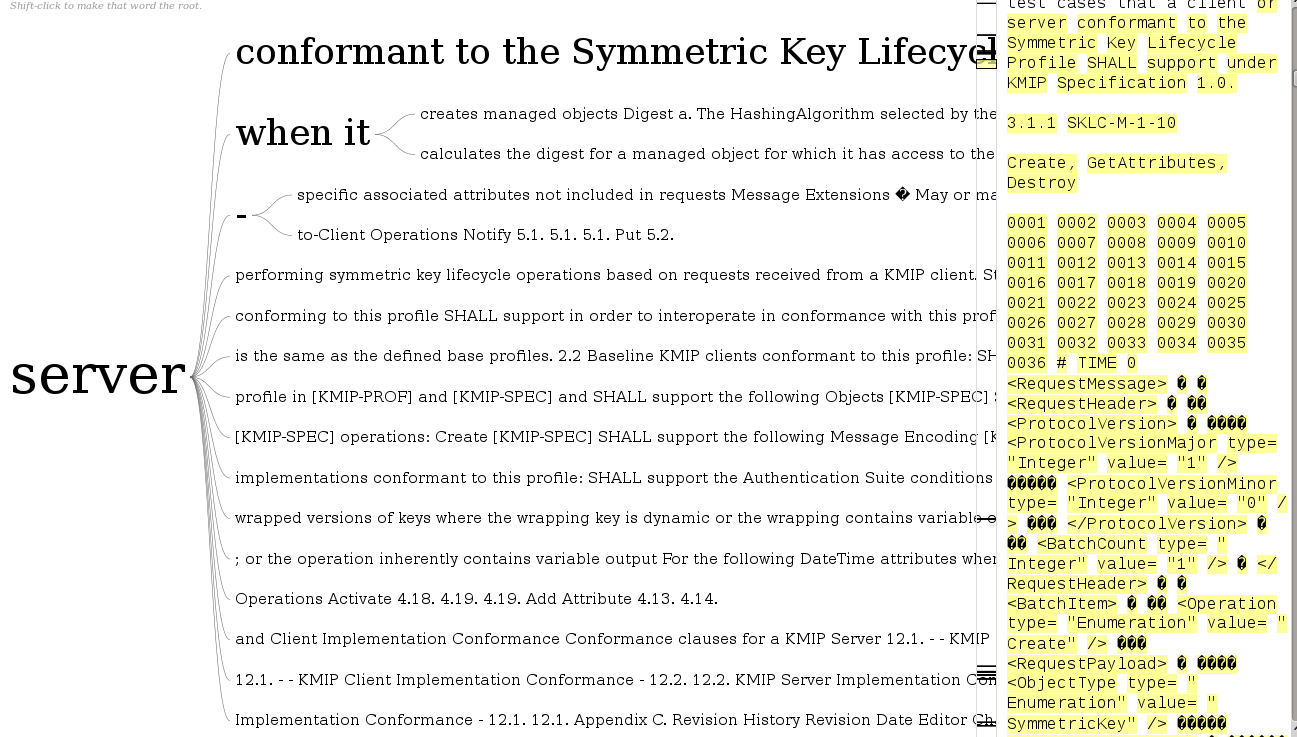

The paper, co-authored by Passera, Haapio and Curtotti, presents three demos of tools to automatically generate visualizations of selected contract clauses. Our early prototypes include common types of term and termination, payment and liquidated damages clauses. These examples provide proof-of-concept demonstration tools that help contract writers present content in a way readers pay attention to and understand. These results point to the possibility of document assembly engines compiling an entirely new genre of contracts, more user-friendly and transparent for readers and not too challenging to produce for lawyers.

Demo.

Slides.

From slides 2 and 3:

Need for information to be accessible, transparent, clear and easy to understand

Contracts are no exception.

Benefits of visualization

- Information encoded explicitly is easier to grasp & share

- Integrating pictures & text prevents cognitive overload by distributing effort on 2 different processing systems

- Visual structures and cues act as paralanguage, reducing the possibility of misinterpretation

Sounds like the output from a topic map doesn’t it?

A contract is “explicit and transparent” to a lawyer, but that doesn’t mean everyone reading it sees the contract as “explicit and transparent.”

Making what the lawyer “sees” explicit, in other words, is another identification of the same subject, just a different way to describe it.

What’s refreshing is the recognition that not everyone understands the same description, hence the need for alternative descriptions.

Some additional leads to explore on these authors:

Stefania Passera Homepage with pointers to her work.

Helena Haapio Profile at Lexpert, pointers to her work.

Michael Curtotti – Computational Tools for Reading and Writing Law.

There is a growing interest in making the law transparent to non-lawyers, which is going to require a lot more than “this is the equivalent of that, because I say so.” Particularly for re-use of prior mappings.

Looks like a rapid growth area for topic maps to me.

You?

I first saw this at: Passera, Haapio and Curtotti: Making the meaning of contracts visible – Automating contract visualization.