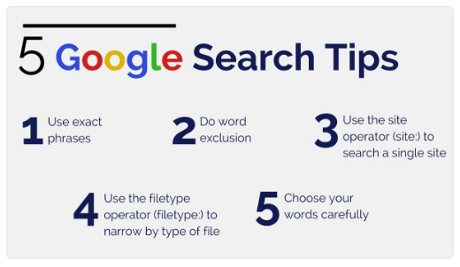

I saw the case on the ACLU website but had to dig through three posts before finding the text: Sandvig v. Lynch, Case 1:16-cv-01368, US District Court for District of Columbia.

When referencing litigation, don’t point to yet another post, link directly to the document in question.

You may be interested in the following posts at the ACLU site:

ACLU Challenges Computer Crimes Law That is Thwarting Research on Discrimination Online

Your Favorite Website Might Be Discriminating Against You

SANDVIG V. LYNCH — CHALLENGE TO CFAA PROHIBITION ON UNCOVERING RACIAL DISCRIMINATION ONLINE

SANDVIG V. LYNCH – COMPLAINT (bingo!)

The ACLU argument is that potential discrimination by websites (posing as users with different characteristics) cannot be researched without violating “terms of use,” and hence the CFAA.

I truly despise the CFAA but given the nature of machine learning driven marketing, I think the ACLU argument has a problem.

You see it already but for the uninitiated:

The complaint uses the term “sock puppet” seventeen (17) times, for example:

…

91. Plaintiffs Sandvig and Karahalios will then instruct the bot to perform the exhibiting behaviors associated with a particular race, so that, for instance, one sock puppet would browse like a Black user, while another would browse like a white user. All the sock puppets will browse the Web for several weeks, periodically revisiting the initial real estate site to search for properties.

[One hopes discovery will include plaintiffs definition of “browse like a Black user, while another would browse like a white user.”]

92. At each visit to the real estate site, Plaintiffs Sandvig and Karahalios will record the properties that were advertised to that sock puppet by scraping that data from the real estate site. They will scrape the organic listings and the Uniform Resource Locator (“URL”) of any advertisements. They will also record images of the advertisements shown to the sock puppets.

93. Finally, Plaintiffs Sandvig and Karahalios will compare the number and location of properties offered to different sock puppets, as well as the properties offered to the same sock puppet at different times. They seek to identify cases where the sock puppet behaved as though it were a person of a particular race and that behavior caused it to see a significantly different set of properties, whether in number or location.

…

As we all know, it took less than a day for a Microsoft chat-bot to become “a racist asshole.”

From all accounts it didn’t start off as “a racist asshole” so inputs must account for its ending state.

Not that the researchers will be inputting such content, but their content will be interacting in unforeseen and unpredictable ways with other content from other users, users who are unknown to the sites being tested.

It’s all well and good to test a website for discrimination but holding it responsible for the input of unknown others, seems like a bit of a stretch.

We won’t know, can’t know, whose input is responsible for whatever results the researchers obtain. The results could well be that no discrimination is found, by happenstance, when in fact the site left to its own devices, does discriminate in some illegal way.

Another aspect of the same problem is that machine learning algorithms give discrete results, they do no provide an explanation for how they arrived at a result. That is a site could be displaying high paying job ads to one job seeker but not another, because one “fake” user has been confused with a real user in an outside database and additional information has been used but not exposed in the result.

Not to mention given the dynamic nature of machine learning, the site that you complain about today as practicing discrimination, may not. Without an opportunity to capture the entire data set for each alleged claim of discrimination, websites can reply, “ok, you say that was your result on day X. Have you tried today?”

The testing for discrimination claim is a clever attack on the CFAA but given the complexities and realities of machine learning, I don’t see it as being a successful one.