Knoema Launches the World’s First Knowledge Platform Leveraging Data

From the post:

DEMO Spring 2012 conference — Today at DEMO Spring 2012, Knoema launched publicly the world’s first knowledge platform that leverages data and offers tools to its users to harness the knowledge hidden within the data. Search and exploration of public data, its visualization and analysis have never been easier. With more than 500 datasets on various topics, gallery of interactive, ready to use dashboards and its user friendly analysis and visualization tools, Knoema does for data what YouTube did to videos.

Millions of users interested in data, like analysts, students, researchers and journalists, struggle to satisfy their data needs. At the same time there are many organizations, companies and government agencies around the world collecting and publishing data on various topics. But still getting access to relevant data for analysis or research can take hours with final outcomes in many formats and standards that can take even longer to get it to a shape where it can be used. This is one of the issues that the search engines like Google or Bing face even after indexing the entire Internet due to the nature of statistical data and diversity and complexity of sources.

One-stop shop for data. Knoema, with its state of the art search engine, makes it a matter of minutes if not seconds to find statistical data on almost any topic in easy to ingest formats. Knoema’s search instantly provides highly relevant results with chart previews and actual numbers. Search results can be further explored with Dataset Browser tool. In Dataset Browser tool, users can get full access to the entire public data collection, explore it, visualize data on tables/charts and download it as Excel/CSV files.

Numbers made easier to understand and use. Knoema enables end-to-end experience for data users, allowing creation of highly visual, interactive dashboards with a combination of text, tables, charts and maps. Dashboards built by users can be shared to other people or on social media, exported to Excel or PowerPoint and embedded to blogs or any other web site. All public dashboards made by users are available in dashboard gallery on home page. People can collaborate on data related issues participating in discussions, exchanging data and content.

Excellent!!!

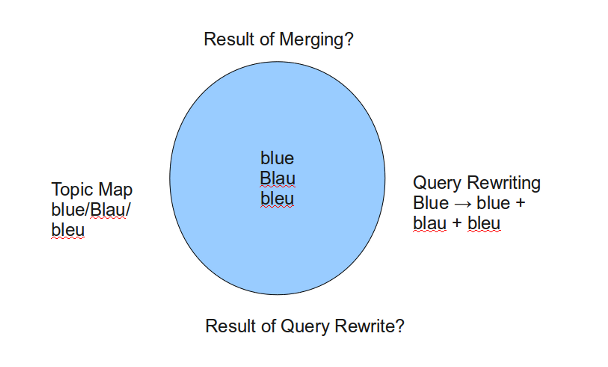

When “other” data becomes available, users will want to integrate it with their data.

But “other” data will have different or incompatible semantics.

So much for attempts to wrestle semantics to the ground (W3C) or build semantic prisons (unnamed vendors).

What semantics are useful to you today? (patrick@durusau.net)