Graph Encryption: Going Beyond Encrypted Keyword Search by Xiarui Meng.

From the post:

Encrypted search has attracted a lot of attention from practitioners and researchers in academia and industry. In previous posts, Seny already described different ways one can search on encrypted data. Here, I would like to discuss search on encrypted graph databases which are gaining a lot of popularity.

1. Graph Databases and Graph Privacy

As today’s data is getting bigger and bigger, traditional relational database management systems (RDBMS) cannot scale to the massive amounts of data generated by end users and organizations. In addition, RDBMSs cannot effectively capture certain data relationships; for example in object-oriented data structures which are used in many applications. Today, NoSQL (Not Only SQL) has emerged as a good alternative to RDBMSs. One of the many advantages of NoSQL systems is that they are capable of storing, processing, and managing large volumes of structured, semi-structured, and even unstructured data. NoSQL databases (e.g., document stores, wide-column stores, key-value (tuple) store, object databases, and graph databases) can provide the scale and availability needed in cloud environments.

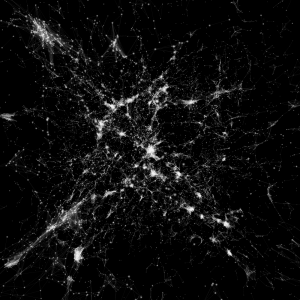

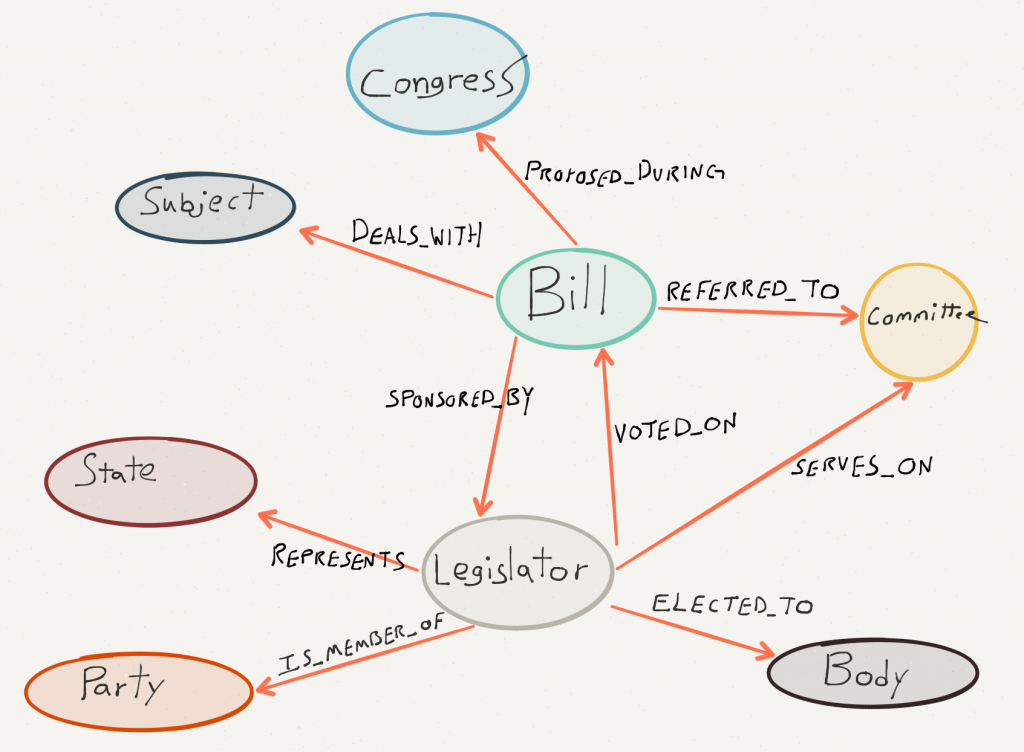

In an Internet-connected world, graph database have become an increasingly significant data model among NoSQL technologies. Social networks (e.g., Facebook, Twitter, Snapchat), protein networks, electrical grid, Web, XML documents, networked systems can all be modeled as graphs. One nice thing about graph databases is that they store the relations between entities (objects) in addition to the entities themselves and their properties. This allows the search engine to navigate both the data and their relationships extremely efficiently. Graph databases rely on the node-link-node relationship, where a node can be a profile or an object and the edge can be any relation defined by the application. Usually, we are interested in the structural characteristics of such a graph databases.

What do we mean by the confidentiality of a graph? And how to do we protect it? The problem has been studied by both the security and database communities. For example, in the database and data mining community, many solutions have been proposed based on graph anonymization. The core idea here is to anonymize the nodes and edges in the graph so that re-identification is hard. Although this approach may be efficient, from a security point view it is hard to tell what is achieved. Also, by leveraging auxiliary information, researchers have studied how to attack this kind of approach. On the other hand, cryptographers have some really compelling and provably-secure tools such as ORAM and FHE (mentioned in Seny’s previous posts) that can protect all the information in a graph database. The problem, however, is their performance, which is crucial for databases. In today’s world, efficiency is more than running in polynomial time; we need solutions that run and scale to massive volumes of data. Many real world graph datasets, such as biological networks and social networks, have millions of nodes, some even have billions of nodes and edges. Therefore, besides security, scalability is one of main aspects we have to consider.

2. Graph Encryption

Previous work in encrypted search has focused on how to search encrypted documents, e.g., doing keyword search, conjunctive queries, etc. Graph encryption, on the other hand, focuses on performing graph queries on encrypted graphs rather than keyword search on encrypted documents. In some cases, this makes the problem harder since some graph queries can be extremely complex. Another technical challenge is that the privacy of nodes and edges needs to be protected but also the structure of the graph, which can lead to many interesting research directions.

Graph encryption was introduced by Melissa Chase and Seny in [CK10]. That paper shows how to encrypt graphs so that certain graph queries (e.g., neighborhood, adjacency and focused subgraphs) can be performed (though the paper is more general as it describes structured encryption). Seny and I, together with Kobbi Nissim and George Kollios, followed this up with a paper last year [MKNK15] that showed how to handle more complex graphs queries.

…

Apologies for the long quote but I thought this topic might be new to some readers. Xianrui goes on to describe a solution for efficient queries over encrypted graphs.

Chase and Kamara remark in Structured Encryption and Controlled Disclosure, CK10:

…

To address this problem we introduce the notion of structured encryption. A structured encryption scheme encrypts structured data in such a way that it can be queried through the use of a query-specific token that can only be generated with knowledge of the secret key. In addition, the query process reveals no useful information about either the query or the data. An important consideration in this context is the efficiency of the query operation on the server side. In fact, in the context of cloud storage, where one often works with massive datasets, even linear time operations can be infeasible. (emphasis in original)

…

With just a little nudging, their:

A structured encryption scheme encrypts structured data in such a way that it can be queried through the use of a query-specific token that can only be generated with knowledge of the secret key.

could be re-stated as:

A subject identity encryption scheme leaves out merging data in such a way that the resulting topic map can only be queried with knowledge of the subject identity merging key.

You may have topics that represent diagnoses such as cancer, AIDS, sexual contacts, but if none of those can be associated with individuals who are also topics in the map, there is no more disclosure than census results for a metropolitan area and a list of the citizens therein.

That is you are missing the critical merging data that would link up (associate) any diagnosis with a given individual.

Multi-property subject identities would make the problem even harder, so say nothing of conferring properties on the basis of supplied properties as part of the merging process.

One major benefit of a subject identity based approach is that without the merging key, any data set, however sensitive the information, is just a data set, until you have the basis for solving its subject identity riddle.

PS: With the usual caveats of not using social security numbers, birth dates and the like as your subject identity properties. At least not in the map proper. I can think of several ways to generate keys for merging that would be resistant to even brute force attacks.

Ping me if you are interested in pursuing that on a data set.