Can Big Data From Cellphones Help Prevent Conflict? by Emmanuel Letouzé.

From the post:

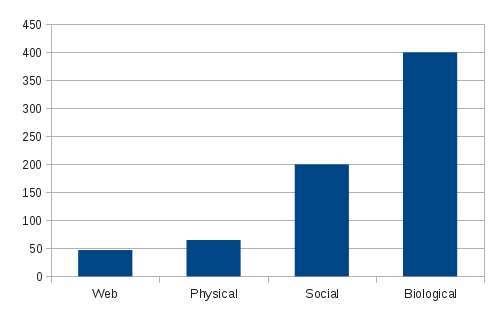

Data from social media and Ushahidi-style crowdsourcing platforms have emerged as possible ways to leverage cellphones to prevent conflict. But in the world of Big Data, the amount of information generated from these is too small to use in advanced data-mining techniques and “machine-learning” techniques (where algorithms adjust themselves based on the data they receive).

But there is another way cellphones could be leveraged in conflict settings: through the various types of data passively generated every time a device is used. “Phones can know,” said Professor Alex “Sandy” Pentland, head of the Human Dynamics Laboratory and a prominent computational social scientist at MIT, in a Wall Street Journal article. He says data trails left behind by cellphone and credit card users—“digital breadcrumbs”—reflect actual behavior and can tell objective life stories, as opposed to what is found in social media data, where intents or feelings are obscured because they are “edited according to the standards of the day.”

The findings and implications of this, documented in several studies and press articles, are nothing short of mind-blowing. Take a few examples. It has been shown that it was possible to infer whether two people were talking about politics using cellphone data, with no knowledge of the actual content of their conversation. Changes in movement and communication patterns revealed in cellphone data were also found to be good predictors of getting the flu days before it was actually diagnosed, according to MIT research featured in the Wall Street Journal. Cellphone data were also used to reproduce census data, study human dynamics in slums, and for community-wide financial coping strategies in the aftermath of an earthquake or crisis.

Very interesting post on the potential uses for cell phone data.

You can imagine what I think could be correlated with cellphone data using a topic map so I won’t bother to enumerate those possibilities.

I did want to comment on the concern about privacy or re-identification as Emmanuel calls it in his post from cellphone data.

Governments, who have declared they can execute any of us without notice or a hearing, are the guardians of that privacy.

That causes me to lack confidence in their guarantees.

Discussions of privacy should assume governments already have unfettered access to all data.

The useful questions become: How do we detect their misuse of such data? and How do we make them heartily sorry for that misuse?

For cell phone data, open access will give government officials more reason for pause than the ordinary citizen.

Less privacy for individuals but also less privacy for access, bribery, contract padding, influence peddling, and other normal functions of government.

In the U.S.A., we have given up our rights to public trial, probable cause, habeas corpus, protections against unreasonable search and seizure, to be free from touching by strangers, and several others.

What’s the loss of the right to privacy for cellphone data compared to catching government officials abusing their offices?