British intelligence spies on lawyer-client communications, government admits by David Meyer.

From the post:

After the Snowden leaks, British lawyers expressed fears that the government’s mass surveillance efforts could undermine the confidentiality of their conversations with clients, particularly when those clients were engaged in legal battles with the state. Those fears were well-founded.

On Thursday the legal charity Reprieve, which provides assistance to people accused of terrorism, U.S. death row prisoners and so on, said it had succeeded in getting the U.K. government to admit that spy agencies tell their staff they may target and use lawyer-client communications “just like any other item of intelligence.” This is despite the fact that both English common law and the European Court of Human Rights protect legal professional privilege as a fundamental principle of justice.

…

See David’s post for the full details.

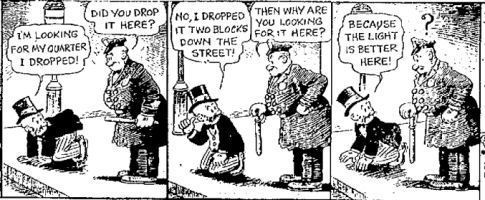

The dividends from 9/11 continue. One substantial terrorist attack and the United States, the United Kingdom and a number of other countries are in head long flight from their constitutions and traditions of individual liberty from government intrusion.

Given the lack of terrorist attacks in the United States following 9/11, either the United States isn’t on maps used by terrorists or they can’t afford a plane ticket to the US. I don’t consider the underwear bomber so much a terrorist as a sad follower who wanted to be a terrorist. If that’s the best they have, we are in no real danger.

What the terrorism debate needs is a public airing of credible risks and strategies for addressing those risks. The secret abandonment of centuries of legal tradition because government functionaries lack the imagination to combat common criminals is inexcusable.

Citizens are in far more danger from their governments than any known terrorist organization. Perhaps that was the goal of 9/11. If so, it was the most successful attack in human history.